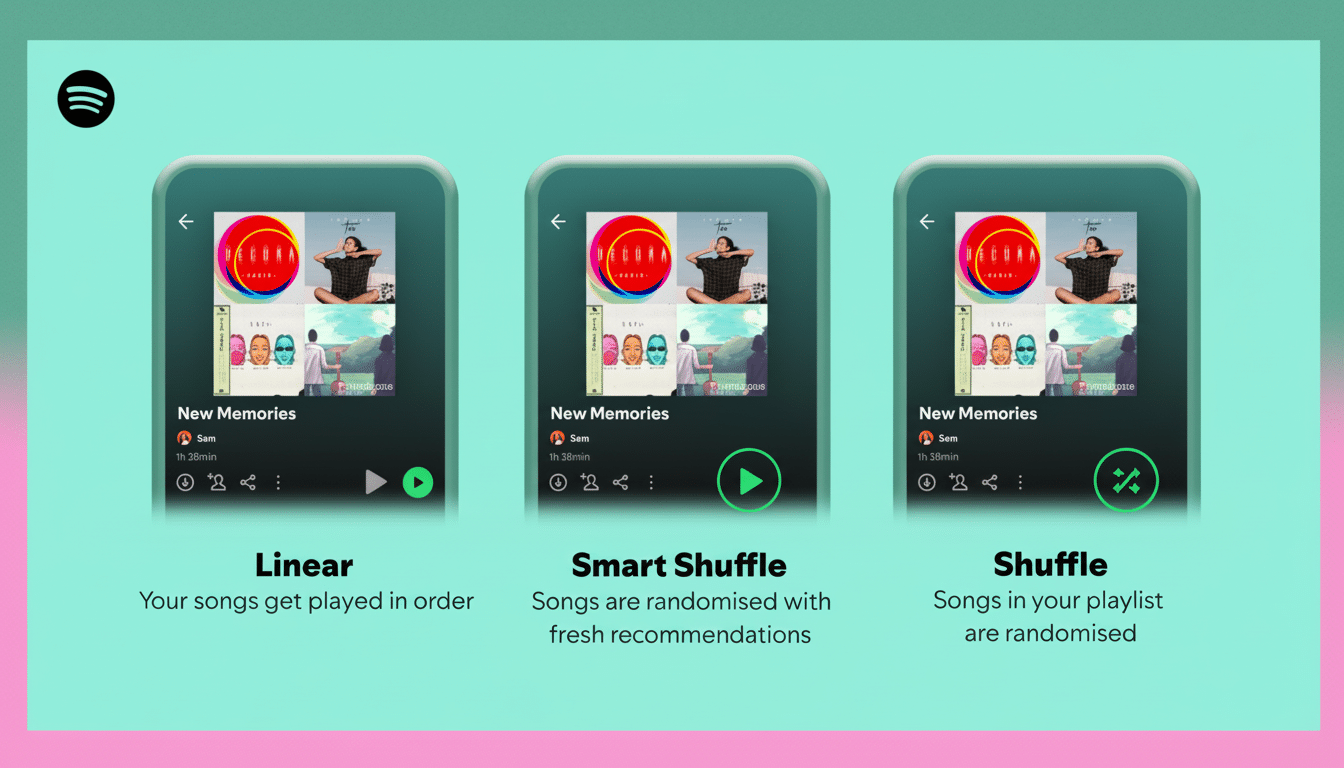

Spotify’s Smart Shuffle has quietly introduced AI-generated tracks alongside those produced by humans, adding new questions around transparency and consent, as well as how algorithmically recommended music affects what we listen to.

A Surprise in a Seamless Mix Within Smart Shuffle

In testing, with a playlist that began with a classic-funk seed — think The Gap Band and Cameo — there was an early transition from essentially one “part” of the song into something called “I’m Letting Go Of The Bullsh*t,” by Nick Hustles. It fit, sonically: rubbery basslines, vintage drums, a voice adulterated with silk. But the lyrics were unmistakably of-the-moment.

A cursory profile check had some traction: hundreds of thousands of monthly listeners, seven-figure play counts on various songs. Public materials and community reporting refer to it as an AI-generated project, but there wasn’t any immediately obvious in-app notification that the voice or composition might not have been human.

Do not consider it a fringe detection problem. Ninety-seven percent of listeners can’t tell the difference between an AI-generated track and one made by humans, according to research from Deezer and Ipsos. When even the most attentive fans can’t tell, labels and context are more than nice-to-haves — they’re table stakes.

How Smart Shuffle Probably Arrived at It

Smart Shuffle builds out from a selected song or playlist with vibe-matched additions. Behind the scenes, Spotify pairs collaborative filtering (what similar listeners like) with audio analysis — tempo, timbre, energy and instrumentation — as well as real-time streaming activity from social platforms like Twitter and press coverage.

If there is a trend-setting AI-generated track, and its audio fingerprint clusters well with your seed song, the system has a lot of motivation to put it in. The point is: the yearning to “preserve the engagement and communality of a team singing the national anthem as they share it with millions” does not need to succumb to singers in meatspace at all costs.

Spotify says it doesn’t punish responsible use of AI and takes down impersonators or content farms. But recommendation systems are reactive by nature: if a synthetic track becomes popular on TikTok, it can just as easily catapult into mainstream rotations.

Labeling Lags the Listening Experience on Spotify

Spotify has deployed AI disclosures using metadata in collaboration with the Digital Data Exchange (DDEX). That is an important industry move: it establishes a common home for AI credits to reside throughout the supply chain.

The problem is visibility. These days, disclosures often occupy credits or back-end body strip fields. There is no ubiquitous, front-and-center badge on track or queue screens and Smart Shuffle does not mark when a recommendation is AI-generated. In other words, the platform knows more than the listener sees.

Listeners want clarity. Eighty percent want AI music to be clearly labeled on streaming services, 72% would like to know when a platform is recommending an AI-generated song or playlist, close to half want the option of being able to filter AI out completely and just over four in 10 say they’d skip such tracks if they came up.

Trust and the Artist Economy Under New AI Pressures

Trust is a fragile thing in music discovery. If the news upsets them, people are willing to experiment until they feel betrayed. That risk is especially high because of past kerfuffles around the same issue — including stories about AI-generated content that showed up on pages for deceased artists as well — and after the viral introduction of AI “bands” with fictitious backstories racked up big streams before acknowledging that they weren’t real.

And for producers, there are material stakes. This is a finding of up to 80% support for legislating against the use of musicians’ music to train AI without their permission and recognition, 77% who regarded uncredited AI-derived music as theft, and 83% who believe that an artist’s creative “personality” should be protected under law from copying by artificial intelligence. Analyses from music trade bodies and creator groups suggest AI could slash earnings for some roles by double-digit proportions in a matter of years if left unregulated.

Lawmakers are paying attention. UK lawmakers have called for tougher safeguards on training data, deepfake voices and transparent consumer labeling. The tide is moving towards transparency and consent as the defaults.

What Spotify Can Do Now to Improve AI Transparency

First, clarify the disclosures: a uniform “AI-generated” badge on track pages, the now-playing bar and in Smart Shuffle. Second, provide a user control: a settings toggle to include or exclude AI-generated tracks in playlists, Autoplay and Smart Shuffle, and filter options in search and recommendations.

Third, mandate transparent AI credits as a condition of upload and enforce with audits and penalties for noncompliance or impersonation. Fourth, create AI havens where people who like synthetic music can get it — without sublimation into human-centric mixes.

Lastly, recommendation cards need to explicitly inform when an item is trending because of synthetic voice and full composition. Context does not just inform; context builds trust.

What Listeners Can Do to Control AI in Smart Shuffle

Check track credits to see who gets AI disclosures. Leverage tools such as “Exclude from your taste profile” in relation to songs that you don’t want included in recommendations. If you’d rather have human-only discovery, create curated playlists and turn Autoplay off eventually. Flag suspected imposters and follow verified artists to strengthen your signal.

The test here isn’t complex: if an AI track can slip undetected into a heritage funk set, its labeling system isn’t doing well by listeners — or creators.

Discovery should delight, not deceive. Clear signals and real user choice would make Smart Shuffle live up to its name.