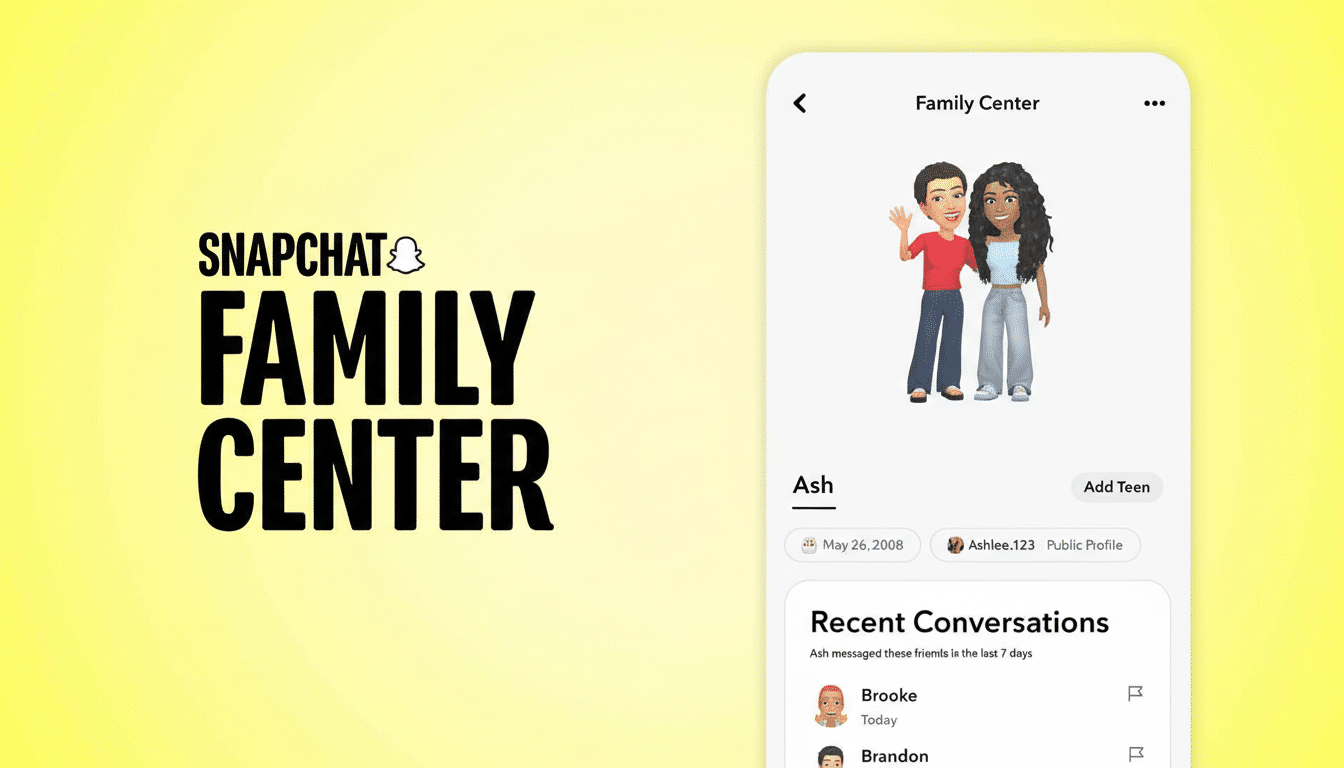

Snapchat is rolling out expanded parental controls for teen accounts, introducing new visibility into screen time and contact activity just after settling a high-profile lawsuit over youth mental health and allegedly addictive design. The move places the app’s Family Center at the center of a renewed push to show measurable safeguards for younger users without exposing private message content.

Within Family Center, parents and guardians linked to a teen’s account can now view a weekly breakdown of time spent on Snapchat and see the kinds of activities driving that use, such as chatting, taking photos, or browsing Snap Map. The company says the content of Snaps and chats remains private, while caregivers gain tools to guide settings and conversations at home.

Snap is also adding more context around new connections. When a teen adds a contact, parents can review mutual friends and the Snap communities that person belongs to, offering a quick way to assess whether a connection appears familiar, school-related, or a potential red flag.

What Parents Can See and Do in Snapchat Family Center

The refreshed dashboard surfaces average weekly usage, activity categories, and trends over time, helping families spot spikes in late-night scrolling or heavy chat sessions. Parents can confirm or adjust key safety settings—like who can contact their teen or view their location—without reading messages. The controls live in Family Center, which Snap launched in 2022 to reflect “real-world” oversight: visibility into behavior rather than surveillance of content.

These updates layer onto existing protections designed to reduce harmful interactions, including stricter friend recommendation rules for minors, expanded content and AI restrictions, friend list visibility options, and location alerts. Snap’s safety team has collaborated with organizations such as the National Center for Missing and Exploited Children and the National Center on Sexual Exploitation, and the company has voiced support for emerging policies like the Take It Down Act aimed at combating nonconsensual intimate imagery and deepfakes.

Why Snap Is Expanding Parental Controls Now

The timing follows a settlement with a 19-year-old plaintiff who alleged that Snapchat’s design—including feed and recommendation mechanics—contributed to compulsive use and mental health harms, a critique that has also been leveled at Meta, YouTube, and TikTok in parallel lawsuits. The case was resolved before a jury trial, and while terms weren’t disclosed, filings highlighted internal concerns raised by employees about youth well-being, adding urgency to the platform’s safety posture.

Public health data underscores the stakes. The U.S. Surgeon General’s 2023 advisory warned that excessive social media use can correlate with sleep disruption, body image issues, and anxiety, and called for stronger parental controls and data access for independent researchers. CDC surveys show sustained increases in reports of persistent sadness among teens, with girls and LGBTQ+ youth disproportionately affected. Meanwhile, Pew Research Center estimates about 60% of U.S. teens use Snapchat, and roughly 14% say they’re on it “almost constantly”—a usage pattern that heightens the importance of guardrails.

How This Fits Into Wider Regulatory Scrutiny

Regulators and lawmakers are sharpening expectations for “safety by design.” State attorneys general have sued large platforms over youth harms, the United Kingdom’s Online Safety Act imposes child protection duties, and the European Union’s Digital Services Act requires risk assessments and mitigation for systemic harms, including risks to minors. In response, tech companies are leaning more heavily on teen protections and parental oversight, analogous to Apple’s Screen Time and Google’s Family Link.

Snap’s approach emphasizes metadata—not message content—to balance privacy with accountability. A practical example: a parent sees their teen spent several hours on Snapchat primarily chatting and browsing Snap Map, then notices a new contact with no mutual friends who belongs to adult-oriented communities. That context can trigger a timely conversation and a review of contact and location settings, without handing parents a transcript of private messages.

Safety advocates note that dashboards are a start, but defaults matter. Common Sense Media has long argued that features like time nudges, nighttime quiet hours, and friction around unknown adult contact requests are most effective when they’re on by default rather than optional. Researchers also want greater data access to evaluate whether interventions reduce problematic use or exposure to harmful content.

What Comes Next for Snapchat’s Family Center Tools

The expanded controls are rolling out now, and Snap is likely to face pressure to show real-world impact through transparency reporting and third-party audits. Expect continued scrutiny of recommendations, messaging features, and location tools—areas frequently cited by advocates and regulators alike. As lawsuits and policy proposals evolve, platforms will be measured not just by the presence of controls, but by how consistently they’re adopted, how effective they are for families, and how well they protect teen privacy while reducing risk.

For caregivers, the update adds actionable context without crossing into surveillance. For Snap, it’s a visible response in a climate demanding proof that product design prioritizes teen mental health—and that safety features are more than a settings page few families ever find.