Powerful voices in Silicon Valley are dialing up the rhetoric against AI safety groups, accusing them of self-serving agenda-pushing and stealth efforts at regulatory capture. Though it is happening on the level of research, not policy or business, the backlash signals a crucial turning point in the conversation about A.I.: No longer are people contending merely with worst-case scenarios and responding to headline-grabbing statements that many scaredy-cats — let alone tech skeptics — deem as far-fetched.

What Triggered the Backlash Against AI Safety Groups

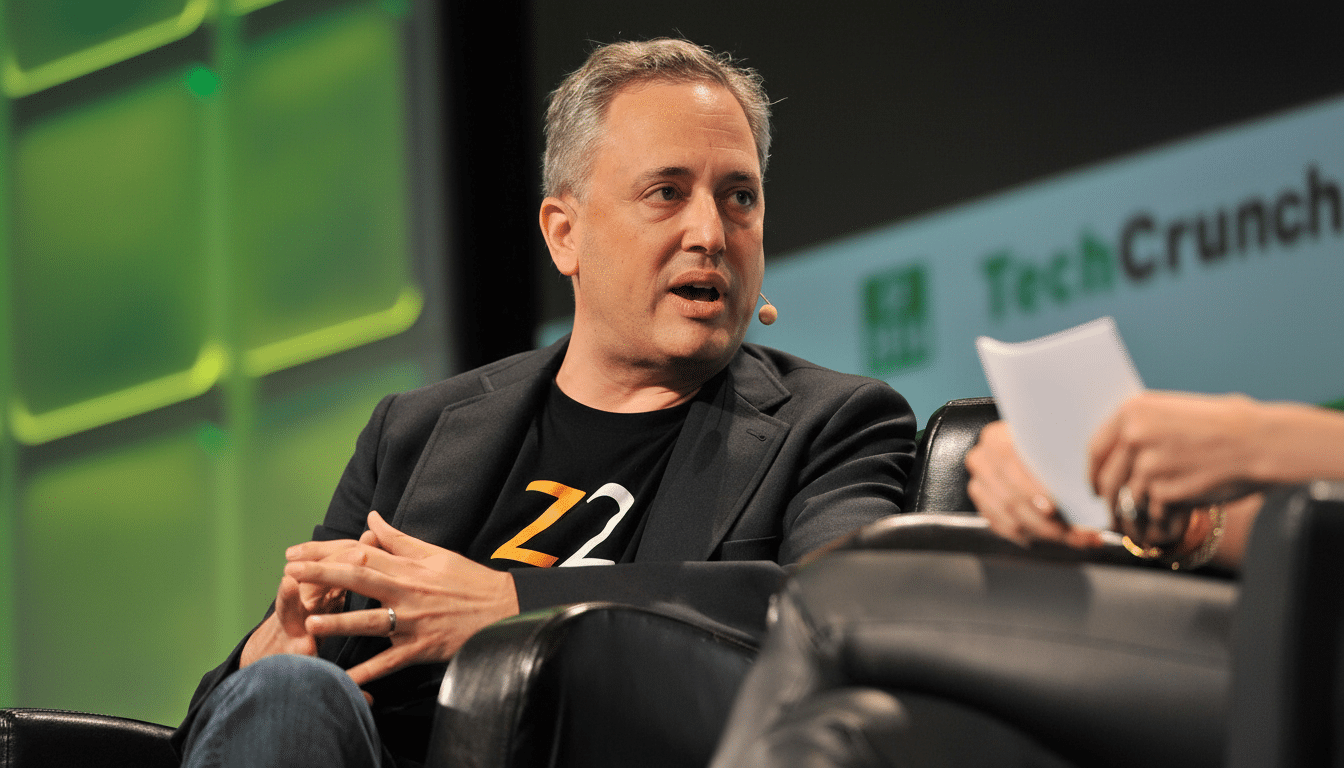

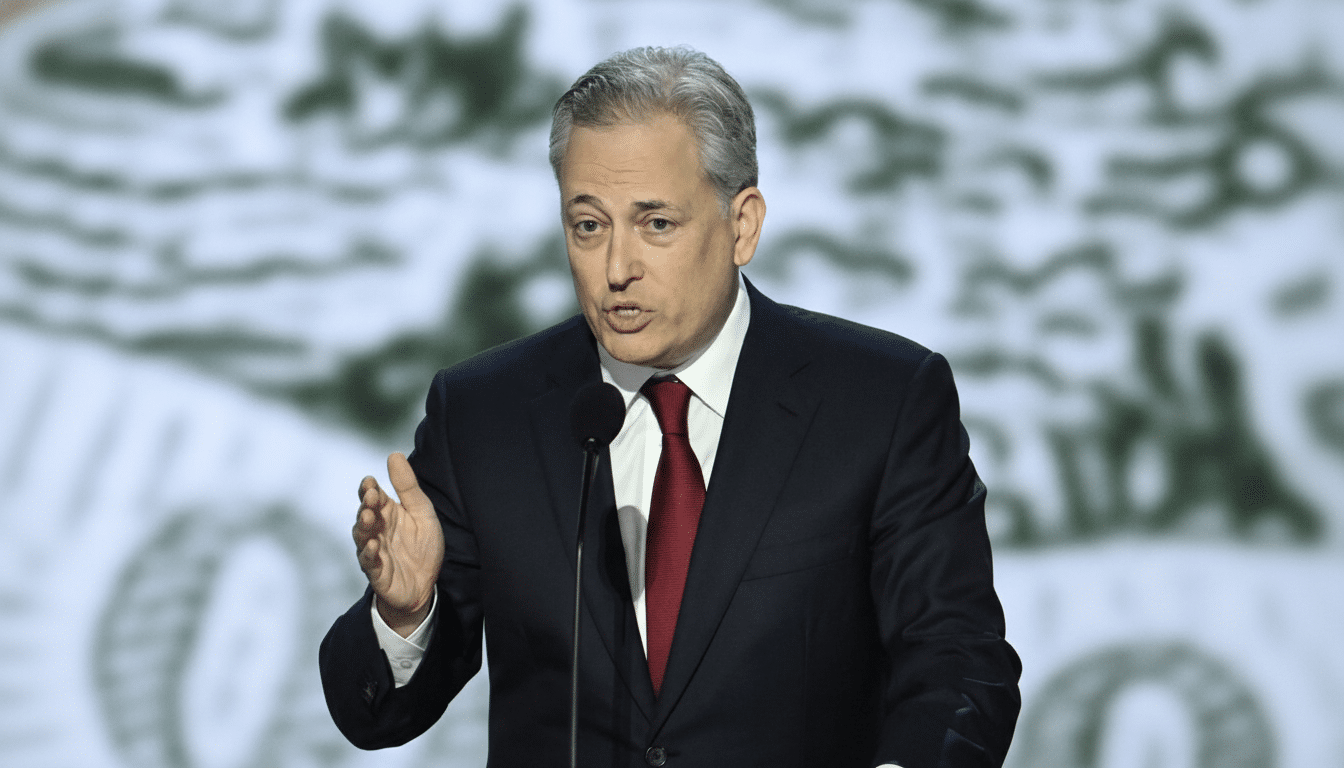

Two flashpoints sparked the latest tempest. Investor David Sacks publicly bashed Anthropic, saying the company is promoting rules that would benefit incumbents while suffocating startups in red tape. At the same time, OpenAI’s chief strategy officer Jason Kwon defended the company’s choice to subpoena AI safety nonprofits after Elon Musk sued OpenAI and asked if there was coordinated opposition behind the scenes.

- What Triggered the Backlash Against AI Safety Groups

- Accusations of Regulatory Capture in AI Safety Policy

- Subpoenas Test the Limits of Debate Over AI Nonprofits

- Public Opinion and Political Crosscurrents

- The stakes for startups and big labs in AI safety

- What real progress on AI safety actually looks like

Anthropic, which has cautioned of AI’s potential for cyberattacks, job displacement and misuse, has backed new guardrails on developers of large models in California. Concerns articulated by co-founder Jack Clark in a widely shared essay and talk are the sober-before-a-hangover musings of a builder confronting unintended consequences. Sacks interpreted it differently, describing it as an effort to tilt the regulatory landscape in favor of Anthropic.

Accusations of Regulatory Capture in AI Safety Policy

The critique that the industry has captured regulation makes sense in a market where compliance can work as if it were a fixed tax. If the paperwork calls for full-time policy teams, and expensive evaluations, the fear is that only the largest labs — those with enough billionaires backing them — will have a shot. This is not a theoretical concern: the Stanford AI Index has reported sustained growth in private investment in AI, with most of it flowing to only a small number of firms who have extremely large investments in compute and reach. The taller the barrier, the more entrenched are today’s leaders.

But the notion of all safety policy as capture risks ignoring genuine needs. The NIST AI Risk Management Framework, the UK’s nudging model evaluations toward public publishing, and nascent AI incident databases all echo a middle path: Mandate more testing for high-capability systems but avoid kneecapping small low-risk projects. A more pragmatic strategy is to make rules as a function of capability and impact rather than company size, so that capture can be limited while lifting the safety floor.

Subpoenas Test the Limits of Debate Over AI Nonprofits

Kwon’s support of subpoenas is in relation to transparency around funding and any potential coordination among the groups that received the order, which included Encode, according to NBC News.

But the decision raised alarms among advocates who said it could have an ameliorative effect on dissent. A stunningly blunt comment by Joshua Achiam, OpenAI’s head of mission alignment, made publicly implied that the tactic didn’t go down well within the organization either.

It’s not unheard-of for legal discovery to take place in corporate disputes, but applying it to nonprofits working in the policy space risks blurring a line between watchdogs and litigants. If they do, the AI field might have less of what it needs to be trustworthy: amicus briefs, whistleblowers and independent analysis — exactly the inputs that produce credible safety oversight.

Public Opinion and Political Crosscurrents

Public sentiment tilts toward caution. Pew Research Center has found again and again that about half of Americans are more worried than thrilled about A.I. Polling by such organizations as the AI Policy Institute suggests that voters are more concerned about proximate harms — deepfakes, fraud, job losses — than they are about speculative catastrophic scenarios. This gap is the source of the “out-of-touch” critique of some AI safety rhetoric and it’s what motivates industry figures, like Sriram Krishnan, to call for more engagement between advocates and practitioners / end-users.

Meanwhile, policymakers are moving from principles to playbooks: targeted rules for high-risk systems, safety reporting on frontier models and transparency requirements for AI-generated media. The European Union takes a tiered approach to obligations based on risk and U.S. agencies are testing out model evaluations, procurement rules and incident reporting—incremental steps that go beyond press-release governance.

The stakes for startups and big labs in AI safety

Startups worry about compliance drag at precisely the time when product cycles are getting compressed. Where cash is tight, fixed costs for evaluations, red-teaming and security controls can be existential. Big labs argue that proper safety testing — adversarial evaluations, alignment research, secure deployment and post-release monitoring — can’t be optional for models that support code synthesis, bio-design assistance or realistic voice cloning.

A workable compromise is coming into focus: match obligations to capacity and distribution. For frontier systems, independent audit, safety incident reporting and verification of data harvesters could act as guardrails. Lighter-touch requirements and standardized tooling lower friction for smaller models. This reflects best practice in safety-critical industries, where oversight follows risk.

What real progress on AI safety actually looks like

Concrete wins are within reach.

- Uniform red-teaming guidelines overseen by neutral labs.

- Standards of risk, generalization, and autonomy.

- Provenance and watermarking for signed synthetic media supported by cross-industry coalitions.

- Third-party audits linked to deployment thresholds.

- Transparent pathways to report and disclose, akin to aviation’s safety culture.

Such measures do not entail the picking of winners. They would need ways to measure risks, publish methods and demonstrate that controls work outside the ivory tower of the lab. “If the loudest critics in Silicon Valley of AI safety really want to make it as hard as possible for AI to subjugate or destroy us, they’d be better off arguing for capability-based rules, open evaluations and interoperable reporting — not blanket friction.”

The sudden frost toward safety advocates may discourage some watchdogs. It may also show that oversight is finally starting to bite. In any event, the next stage of AI governance will not be determined by who shouts louder but by who can translate principled concern into testable, independent and scalable safeguards.