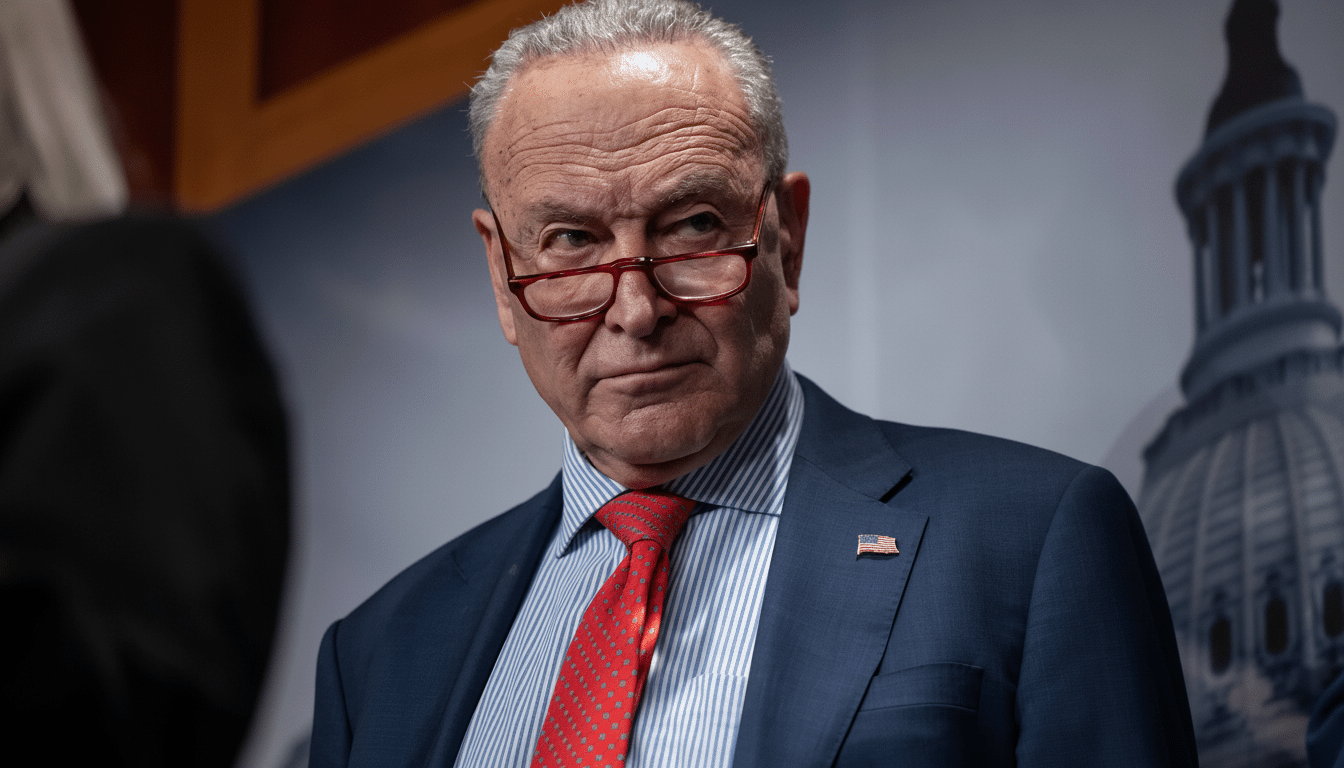

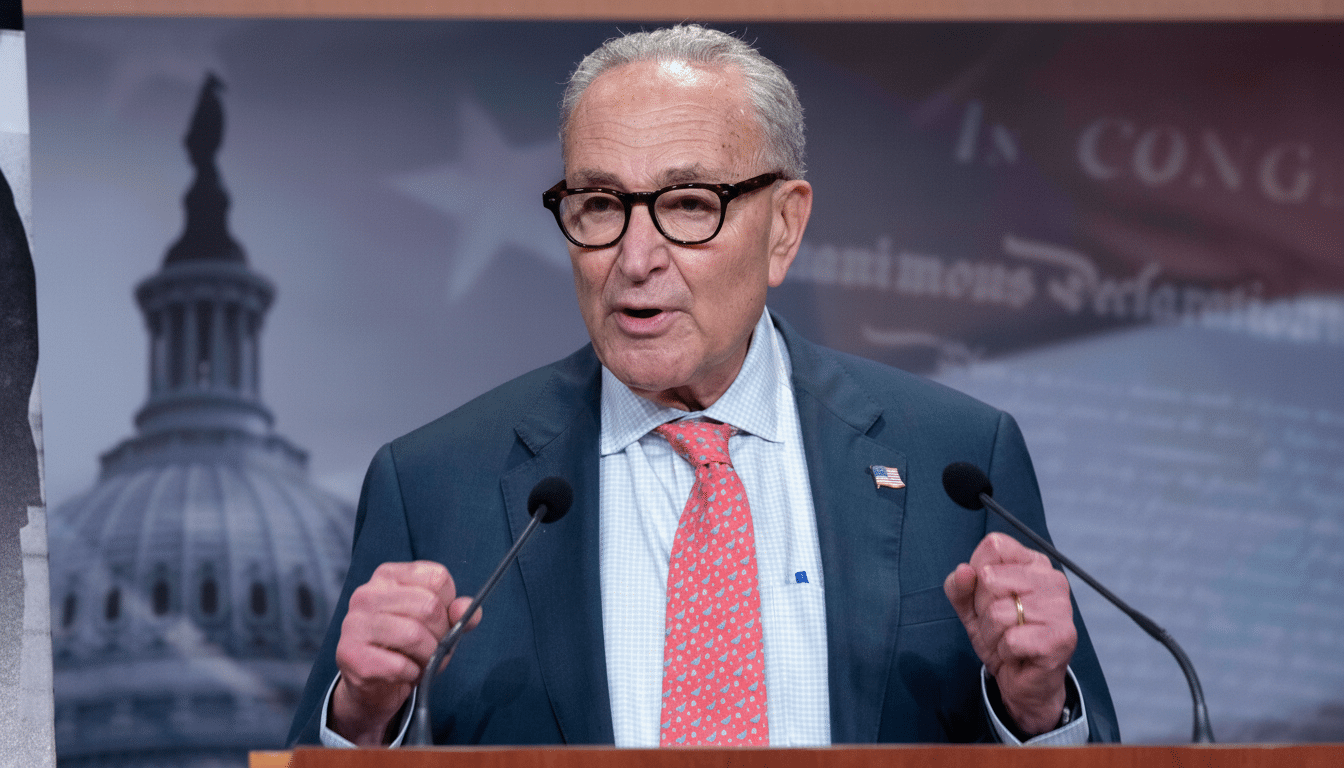

Senate Republicans shared an AI-edited video of Senate Minority Leader Chuck Schumer that has him appearing to be cheering on a long government shutdown. And despite its synthetic origins and dubiousness of intent, X has not taken down the clip or added a warning label, ushering in new scrutiny for the platform’s enforcement of its own rules on manipulated media.

What the Video Shows in the Senate GOP Deepfake of Schumer

The video, posted by the Official Senate Republicans account on X, features a convincingly CGI-rendered Schumer saying “every day gets better for us,” repeatedly. The line originates from a Punchbowl News report but is without the context it had when Schumer was speaking about strategy around healthcare policy and GOP pressure tactics. In the AI clap-back, that’s paraphrased into a rebuke of Democrats who are said to “want to own shutdown over ‘this president.’”

- What the Video Shows in the Senate GOP Deepfake of Schumer

- Policy Gaps on X Regarding Synthetic and Manipulated Media

- How the Parties Are Framing the Schumer Deepfake Incident

- The Law Is Playing Catch-Up to Political Deepfake Risks

- Why Disclosures and Watermarks Are Not Enough on Platforms

- A Pattern of Deepfakes With Growing Electoral Misinformation Risks

- X’s Next Move on Enforcing Manipulated Media Policies

The post carries a watermark indicating that it is the result of A.I. tools. That disclosure, however, is buried and easy to overlook: With no label on the video itself from the party or the platform explaining its true source of origin, casual viewers are apt to accept it at face value — doubly so when jolted with an imprimatur from official party accounts.

Policy Gaps on X Regarding Synthetic and Manipulated Media

X’s policy on synthetic and manipulated media, which includes images, audio and video files generated by AI that aren’t created using tools to significantly enhance or change the effect of a photo or video (i.e., filters), takes hard action against deceptive sharing of AI-doctored clips that could cause real-world harm — such as making someone believe falsities about another person’s actions in public debate around a certain topic. Among the tools that it says could be considered for enforcement are removal, warning labels, making content less visible or adding context to a post.

But as of publication, the Schumer deepfake was still easily findable, and there was no platform-applied warning on it. This isn’t the first time X has allowed political deepfakes to hang around. Ahead of the last election cycle, X owner Elon Musk promoted a doctored video of then–Vice President Kamala Harris that set off an argument over what separates political spin from actionable misinformation.

X’s crowdsourced fact-checks — Community Notes — can help, but they often lag viral posts. Researchers at institutions like M.I.T. and the University of Washington have found that labels and notes do help to lower belief in false content, though the effect can be modest and is closely related to how it’s used — timing, placement and whether users find that they trust other users more than professional fact-checkers all play a role.

How the Parties Are Framing the Schumer Deepfake Incident

Democrats say the clip is a cut-and-paste scenario of context-stripping and voice synthesis created to inflame at a time of a crucial budget impasse. Republican operatives, in contrast, describe AI as an inevitable campaign instrument. The communications director for the National Republican Senatorial Committee has brushed off criticism as pearl-clutching and encouraged the party to adjust to new media dynamics quickly.

The broader political backdrop is this: Democrats are pushing to save health insurance subsidies for millions and reverse Medicaid cuts, in addition to shielding public health agencies from cuts. And by casting Schumer as exulting in a government shutdown, that recasts those policy fights as cynical gamesmanship — which is the whole reason why so much manipulated political media is such an evil.

The Law Is Playing Catch-Up to Political Deepfake Risks

State lawmakers have acted more quickly than Washington. More than two dozen states have passed measures focused on political deepfakes — especially around elections, according to tracking by the National Conference of State Legislatures and legal analyses generated by the Brennan Center for Justice. California, Minnesota and Texas, for instance, focus on AI-generated media designed to deceive voters or cause harm to a candidate within specific pre-election windows.

Federal rules remain piecemeal. The Federal Election Commission has considered whether existing fakery prohibitions can be extended to cover AI. However, there are no widespread federal standards on these issues. With no coherent federal guardrails, enforcement largely depends on what the platforms are willing to do — and that has been highly variable.

Why Disclosures and Watermarks Are Not Enough on Platforms

Watermarks and little “AI” stamps don’t hold up so well in the real world. Reposts crop or shrink videos, attribution disappears and context goes up in smoke. Studies — research published by the Center for an Informed Public — that I am highlighting in a piece of mine show that small labels can move audiences slightly toward skepticism, though this impact dries up as content is shared around polarized networks.

Technologists are seeking to close the trust gap through standards for content provenance like C2PA, which would bind material cryptographically to its history of creation. That might help — but only if platforms surface provenance prominently and apply real friction to misleading posts. In the Schumer case, a barely perceptible watermark doesn’t stand in for a prominent manipulated-media label or an authoritative note suggesting the restoration of some original context.

A Pattern of Deepfakes With Growing Electoral Misinformation Risks

This is far from an isolated case. In recent weeks, deepfakes of Schumer and House Minority Leader Hakeem Jeffries have proliferated on Truth Social with falsehood-strewn claims about immigration and voter fraud. The repetition is important: if you tell people the same kind of story over and over again, they will remember it and likely believe it, even if they later see corrections — a well-documented finding in political communication research.

X’s Next Move on Enforcing Manipulated Media Policies

Enforce the policy as written: apply a manipulated-media label, add prominent context that includes the original quote, decrease algorithmic amplification. “Get there fast, because your relevance is dependent on your timeliness.” Couple that with clearer guidance for official political accounts, and a fast-track mechanism to address high-salience disputes leading up to elections.

Without strict enforcement, bad actors will see AI watermarks as hall passes. The Schumer deepfake is a test of whether X can balance speech with responsibility, when the stakes are properly public understanding of government action. So far, the platform is failing that test in broad daylight.