A hobbyist’s weekend project to steer a robot vacuum with a PS5 controller accidentally cracked open a door to a company’s entire fleet of smart vacuums and power stations, exposing sensitive data from every customer using the platform. The episode underscores a recurring flaw in connected-device ecosystems: authentication that verifies who you are, but not what you’re allowed to see.

How A Fun Hack Turned Into A Full-Scale Exposure

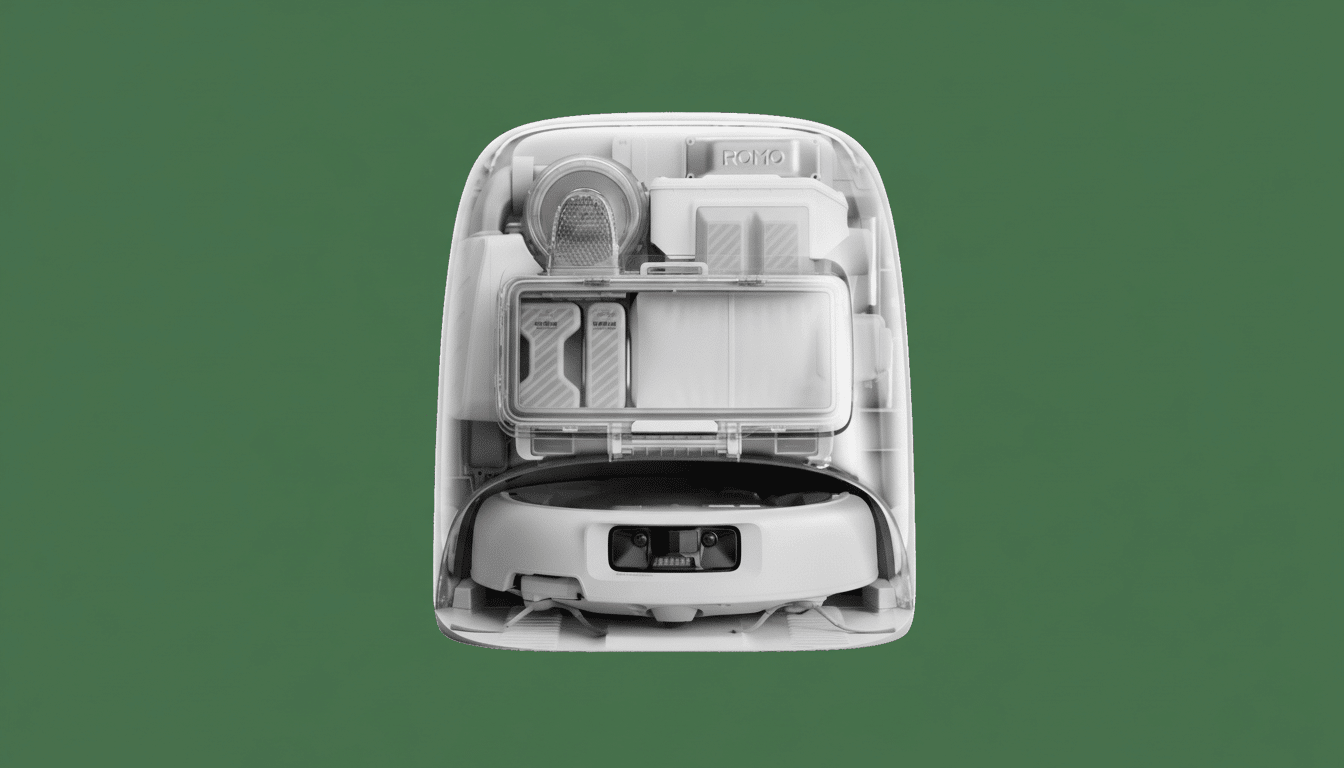

Software tinkerer Sammy Azdoufal set out to control his DJI Romo vacuum via a gamepad, enlisting an AI coding assistant to analyze the companion app and reverse engineer its communications. In the process, he discovered that the devices relied on MQTT, a lightweight publish–subscribe protocol frequently used in IoT, and that once he obtained a valid app token, the system failed to limit access to a single device.

- How A Fun Hack Turned Into A Full-Scale Exposure

- What Was Exposed And Why It Matters For Privacy

- The Technical Root Cause Behind The Data Exposure

- What The Company Fixed And What Security Gaps Linger

- Implications For AI And The IoT Attack Surface

- What Users Can Do Now To Protect Their Privacy

- What Vendors Should Change To Fix Authorization Flaws

With one token in hand, he could subscribe to topics for any vacuum or compatible power station on the platform, viewing data streams that should have been walled off per customer. Azdoufal shared details with journalists and the vendor, prompting the company to close the broad cross-account visibility. But he also found lingering weaknesses, including ways to bypass PIN protections for viewing vacuum camera feeds.

What Was Exposed And Why It Matters For Privacy

The accessible data reportedly included live or near-live vacuum camera feeds and detailed floor maps. That combination is highly sensitive: floor plans reveal room layouts, doorways, and furniture placement, while periodic activity suggests occupancy patterns. In privacy terms, the data resembles a blueprint for the interior of a home—exactly the kind of information threat actors covet for surveillance or targeted crime.

Smart-home privacy lapses are not theoretical. Test images from robot vacuum cameras circulated online in 2020 after being shared with external data-labeling contractors, and multiple camera brands have suffered server-side authorization bugs that exposed feeds to strangers. A notable cloud misconfiguration at a popular camera service in 2022 and a caching error at another provider that surfaced thumbnails to unrelated users highlight how cloud back-ends, not just devices, can be the soft underbelly.

The Technical Root Cause Behind The Data Exposure

This breach was less about cracked encryption and more about authorization gone wrong. MQTT brokers organize data into topics; clients publish and subscribe. Properly implemented, access tokens should be device-scoped and topic-scoped, enforcing least privilege so a user authenticated for Vacuum A cannot subscribe to Vacuum B’s topics. Here, a single token allegedly unlocked the entire namespace—an Improper Authorization issue mapped by MITRE as CWE-285 and CWE-862.

Best practice in IoT would layer controls: mutually authenticated TLS with per-device certificates, short-lived tokens bound to device IDs, broker-side access control lists per topic, and rigorous server-side checks that ignore any client-supplied identifiers. OWASP’s IoT Top 10 calls out exactly these pitfalls, and standards bodies like ETSI (EN 303 645) and NISTIR 8425 urge manufacturers to bake in such safeguards from the outset.

What The Company Fixed And What Security Gaps Linger

Following disclosure, the vendor restricted the excessive access so customers could no longer view other users’ devices. That closes the most damaging avenue, but reports of remaining gaps—such as the ability to override or bypass PIN gating on camera access—suggest that authorization logic still needs hardening across all endpoints, not just the MQTT broker.

Security teams typically follow a coordinated vulnerability disclosure process, rolling out patches in stages. The real test will be whether the company issues device-bound tokens, tight per-topic ACLs, and comprehensive server-side validation, and whether it launches a public bug-bounty program to encourage independent testing.

Implications For AI And The IoT Attack Surface

AI-assisted coding didn’t create the flaw—but it did lower the barrier to discovering it. Large language models can rapidly map APIs, decode obfuscated logic, and generate working clients, accelerating both good-faith research and malicious probing. With an estimated 15+ billion connected devices in use globally, according to market analysts, even a small fraction with similar authorization bugs can translate into massive exposure.

Enterprise telemetry backs up the risk. Past assessments by security researchers have found widespread use of unencrypted or weakly protected IoT traffic in real networks, and agencies like CISA and ENISA routinely warn about lax identity, credential, and access management in consumer IoT. The lesson is plain: assume adversaries have powerful automation and design controls accordingly.

What Users Can Do Now To Protect Their Privacy

- Update the vacuum’s firmware and app immediately and reauthenticate to force fresh tokens.

- Reset any camera PINs and disable remote viewing features you don’t need.

- Place smart-home devices on a separate Wi-Fi network or VLAN; block unnecessary outbound connections at the router.

- Review the vendor’s privacy settings and opt out of cloud data retention where possible.

What Vendors Should Change To Fix Authorization Flaws

- Enforce device-scoped, short-lived tokens and broker-level topic ACLs; implement mutual TLS with per-device certificates and certificate pinning.

- Validate all authorization on the server side; never trust client-supplied IDs for access decisions.

- Instrument robust logging and anomaly detection to flag cross-tenant access patterns in real time.

- Align with ETSI EN 303 645 and NISTIR 8425, and launch a public vulnerability disclosure or bug-bounty program.

The bottom line: a playful experiment revealed a systemic access-control failure that turned one customer’s token into a master key. The company has shut the biggest gap, but until the remaining issues are fixed and defenses are modernized end to end, smart-home privacy will continue to rest on weak locks.