Roblox is adding facial age verification and age tiers in a wide-ranging safety crackdown on young players interacting with adult users. The shift also follows increased scrutiny for the platform over child safety and legal threats, even as close to 40% of its community is under 13. The question is whether such tools can significantly reduce risk without also adopting added privacy and accuracy threats.

What Roblox Is Changing in Its New Safety Update

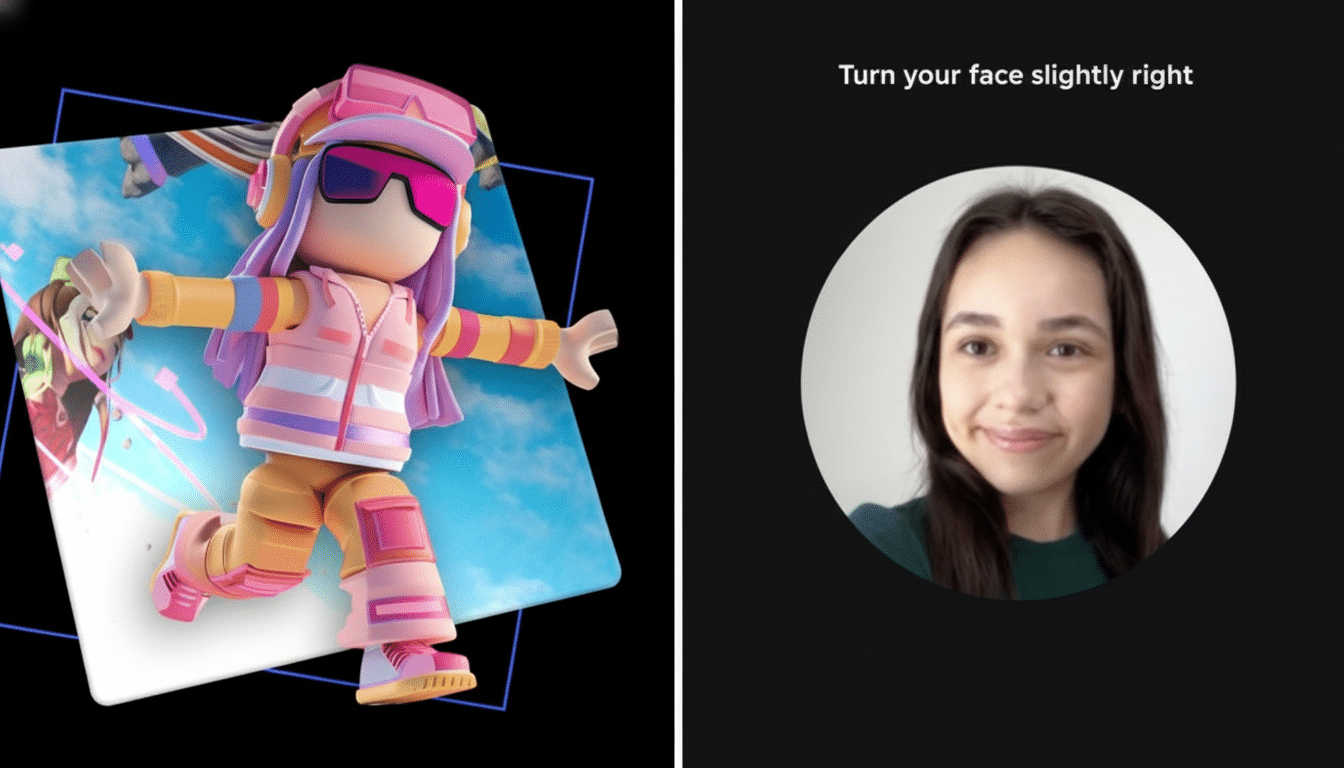

The company will require users to verify their age with government ID or a brief video selfie utilizing the Facial Age Estimation feature. The processing is done by Persona, a third-party verification provider, and Roblox says images are deleted after the check. The checks will initially be optional in many areas, but will become mandatory on some features and in certain markets, with a gradual global rollout proposed.

After being verified or estimated, accounts are placed into one of six bands: under 9, 9–12, 13–15, 16–17, 18–20, and 21+. Currently, users are able to chat in their own band and one level higher for age-appropriate groups. Cross-group contact beyond those boundaries requires a mutual “Trusted Connection,” which is designed to facilitate real-life relationships, like siblings, but restricts unsolicited contact.

How the New Roblox Age Bands Work to Limit Contact

Age bands narrow the social graph. For instance, a user who is 12 may see users 13–15 but may not interact with this group; 16+ means no chatting. An 18-year-old can chat with users who are at least 16, and add a younger sibling as a Trusted Connection to continue contact. The goal: to minimize the pool for opportunistic outreach, which can often be a precursor to grooming.

Developers also gain from clearer audience segmentation. Experiences can be delivered to age bands, and even moderation can be adjusted based on the age level you specify in your audience. That could limit exposure to age-inappropriate content longitudinally, while still allowing social play with same-age peers.

Privacy and Verification Trade-Offs for Age Assurance

Facial age estimation is different from identity verification. It estimates likely age from a video selfie — which doesn’t track IDs in a database — but raises questions of accuracy. In separate tests, including research by NIST’s Face Recognition Vendor Test program, performance for face analysis can differ across age, gender, and skin tone, causing fairness concerns for any system that gates access based on a machine-produced statistic.

Roblox has said the media used for checks is deleted following completion by its vendor, an important reassurance to families concerned about biometric risk. Still, privacy advocates will be looking for clarity on data retention policies, information sharing between Roblox and Persona, and how the company prevents function creep. Regulators around the world – from the FTC in America to data protection authorities in the UK and EU – are growing more focused on proportional, privacy-respecting “age assurance.” Roblox will be judged by those standards.

Will These New Roblox Measures Actually Keep Kids Safer?

Age stratification and type constraints are established forms of friction. They lower the likelihood that a 9–12-year-old will receive messages from much older users without having to follow them first, which groups like the National Center for Missing & Exploited Children warn is a common precursor to online enticement. Restraining cold contact, along with better audience targeting for experiences, should make a difference.

But that is no silver bullet. Vigilant offenders may still try to make friends with young people in public lobbies, move conversations off-platform to external apps, use misclassification and shared devices, or target kids who accept Trusted Connections from people they don’t actually know. The safeguards will work best in concert with strict defaults, responsive reporting, active human and automated moderation, and accelerated enforcement against repeat offenders.

Context is everything: Roblox has reported more than 70 million daily active users in recent letters to shareholders, and large youth populations make risk feel even larger. In the meantime, lawsuits from state attorneys general in Texas, Louisiana, and Kentucky have accused the platform of not doing enough to protect minors. The rollout of age checks and grouped chat is a safety upgrade as well as a response to this legal and regulatory pressure.

What Parents and Developers Can Do Right Now

Practical steps parents can take today

- Review account settings and restrict who can chat or send friend requests.

- Enable two-factor authentication for added account security.

- Start a conversation with kids about Trusted Connections: who they are and why they matter.

- Encourage keeping communication within the game and use in-experience ratings and content filters.

- Report any suspicious behavior that concerns them.

Practical steps developers can take today

- Tag experiences to the targeted age band.

- Avoid mechanics that can be used for private contact.

- Implement session-level protections, like default muting for new connections and proactive moderation prompts.

- Be transparent about how data will be used and how safety is designed.

Bottom line: Roblox’s face checks and age pools are meaningful guardrails, and they echo a broader industry trend toward verifying ages. Whether they work will depend on accuracy, privacy safeguards, robust defaults, and swift enforcement. The tools lower risk; sustained safety will rely on how reliably they’re used — and on how fast the platform closes down the still-open loopholes.