Consumers are more likely to open their wallets for artificial intelligence tools that feel responsible and transparent, according to new research from Deloitte. The company’s new Connected Consumer Survey, which polled 3,524 US consumers, shows that trust and innovation have become critical in whether or not people pay for generative AI services, a development that might change how vendors produce and market AI offerings.

Trust, Innovation Are Measures For Willingness To Pay

Four in 10 generative AI users already report that someone in their household uses such tools and services — a substantially higher figure than previous research, which found willingness to pay for AI in the single digits, Deloitte reports. The distinction, Deloitte says, is who is perceived as the one to deliver both innovative new features and responsible data stewardship.

- Trust, Innovation Are Measures For Willingness To Pay

- Usage Is Soaring as Consumer Expectations Mature

- Risk Awareness Is Rising Among Everyday AI Users

- What Responsible AI Really Means to Today’s Buyers

- The Competitive Advantage of Trust in AI Offerings

- The Bottom Line for Growing AI Subscription Revenue

To interpret the spending profile, Deloitte classified tech providers into four vectors—Trusted Trailblazers, Fast Innovators, Data Stewards, and Slow Movers—by assessing consumer perceptions that included innovation velocity, personalization speed, and core data responsibility (protection, transparency, and user control). Trusted Trailblazers–aligned consumers spend 62% more annually on devices and 26% more per month on services than Slow Movers, and are more likely to increase spending in the coming year.

The lesson is simple: when consumers believe the provider is innovative and trustworthy, willingness to pay increases. As lead author Steve Feinberg explains, people are getting savvier about the trade-offs — and rewarding companies that treat safety, privacy, and clarity as features, not fine print.

Usage Is Soaring as Consumer Expectations Mature

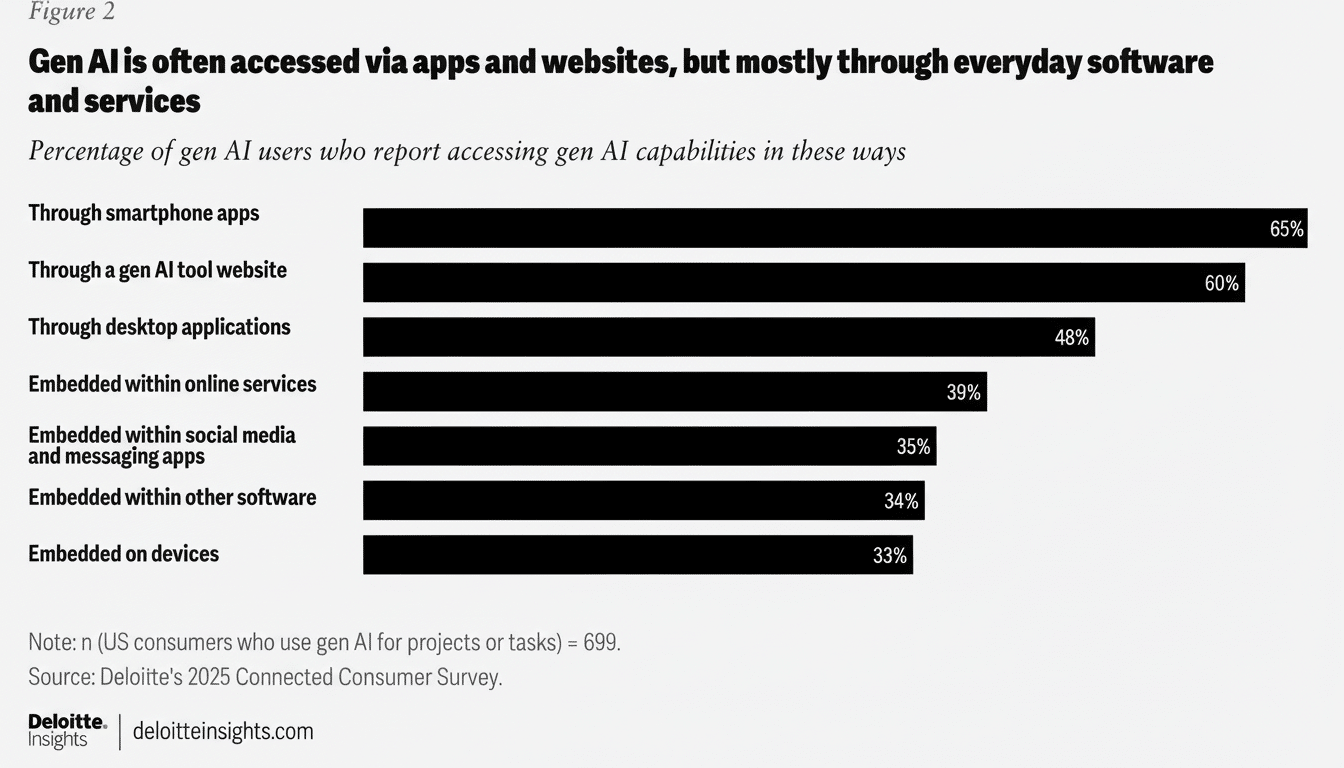

AI is increasingly a part of daily life. Generative AI is no longer just a research topic; Deloitte finds 53% of consumers are already dabbling in or using generative AI, compared to 38% last year. Roughly half of people interact with it at least daily, and 38% engage with AI on a weekly basis or more. Among everyday users, 42% say the technology has a very positive effect on their lives — a higher share than the ratings they give to devices and apps more generally.

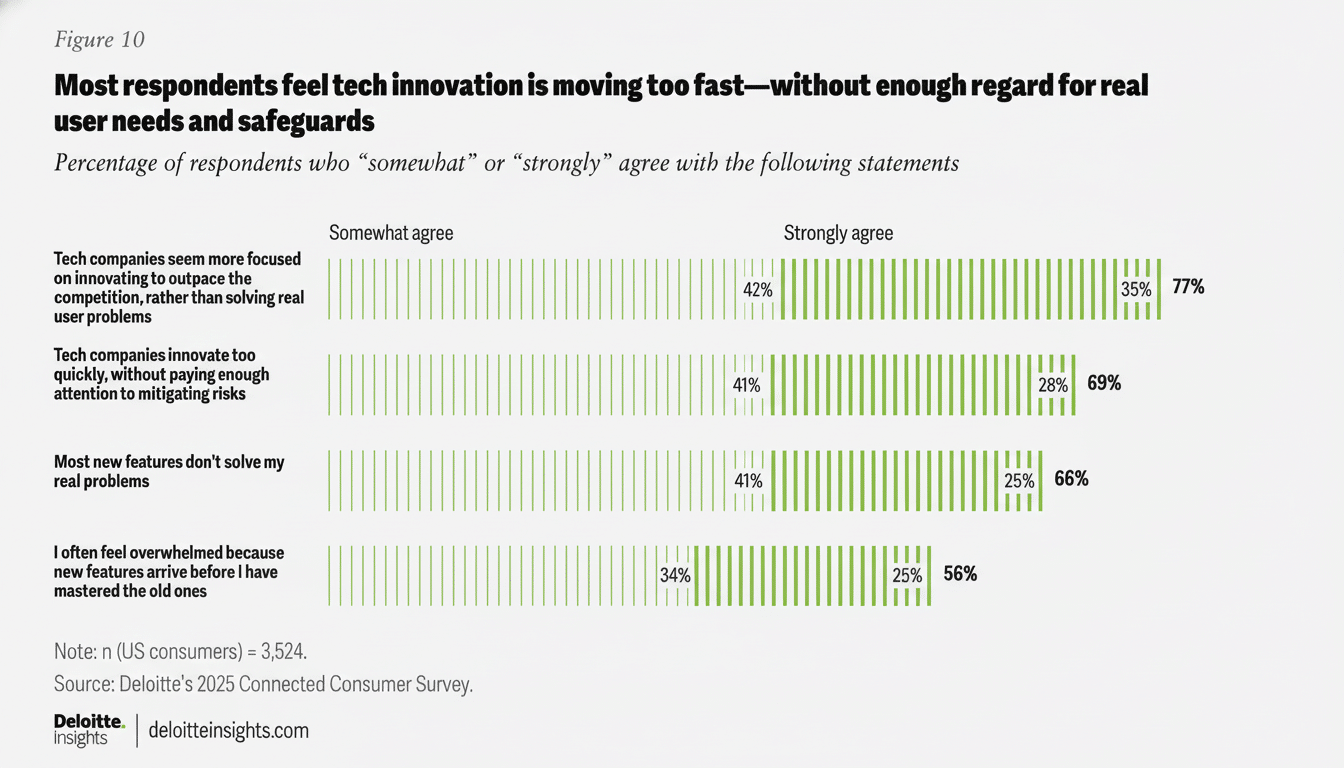

But greater momentum also creates a target on its back. Those who don’t pay are held back by utility and price: half say free tools are adequate, one in five say they don’t use AI frequently enough to merit a subscription, and 17% name cost. That calculus changes when the products in question show responsible design — for instance, through strong privacy controls, transparent data practices, and trustworthy outputs.

Risk Awareness Is Rising Among Everyday AI Users

With adoption comes concern. Eighty-two percent of users and experimenters think generative AI could be used unethically, up from 74%, according to Deloitte. Seventy-four percent fret about the erosion of critical thinking. One-third said they have encountered incorrect or misleading information while using the apps, and almost a quarter described problems with data privacy.

These anxieties are not limited to bad actors; they increasingly involve the providers themselves. What makes that nuance interesting is that it resonates with a broader trend within the policy and standards space, from the US National Institute of Standards and Technology (NIST) AI Risk Management Framework to obligations under directionally similar provisions in the EU AI Act. Consumers are learning the language of model transparency, provenance, and user control — and they demand that vendors speak it fluently.

What Responsible AI Really Means to Today’s Buyers

“Responsible” is increasingly felt by the end user. Some features that stand out include:

- Explainable outputs

- Clear provenance metadata on generated content

- Easy-to-find options for erasing and opting out of data usage

- Stringent age protections

- Protection against hallucinations

Enterprise buyers are increasingly requesting:

- Isolation of customer data

- Audit trails

- Policy enforcement layers

Real-world examples are shaping expectations. Privacy-focused strategies, such as supporting on-device processing for select AI capabilities, training on licensed or company-owned data sets, and publishing model cards detailing limitations are emerging as trust indicators. Vendors who focus on these aspects are well placed to defend subscriptions and premium tiers.

The Competitive Advantage of Trust in AI Offerings

Deloitte’s breakdown points to a business upside for responsibility. Trusted Trailblazers not only overindex on current spend, but they claim the future wallet share as consumers look to upgrade devices and add in services. Providers, by contrast, that are slow to catch up on transparency and data protection risk ensnaring themselves in “good enough” land where the free tools roam and pricing power shrivels.

For product leaders, that implies a playbook:

- Make trust measurable in the experience, not simply in policy pages.

- Create transparency dashboards.

- Default to data minimization.

- Watermark AI outputs and explain model improvements and limitations in simple terms.

- Package safety reviews and red-teaming as salable features.

- Adapt internally to meet recognized standards and translate responsibility as a consumer feature.

The Bottom Line for Growing AI Subscription Revenue

Consumers are not buying AI in some sort of abstract sense — they are buying reliable end results. As use cases expand and the stigma around risk becomes more pronounced, those providers that match smart, tailored innovation with strong data stewardship will have the clearest route to subscription revenue — as well as loyalty. In a crowded market of competent free products, responsibility is becoming the killer feature that sells.