A confidential report has rekindled debate over how far automation should reach inside cloud operations, alleging that artificial intelligence tools helped trigger an Amazon Web Services disruption in December. Amazon contests the claim, attributing the incident to human error and emphasizing that the impact was tightly contained and short-lived.

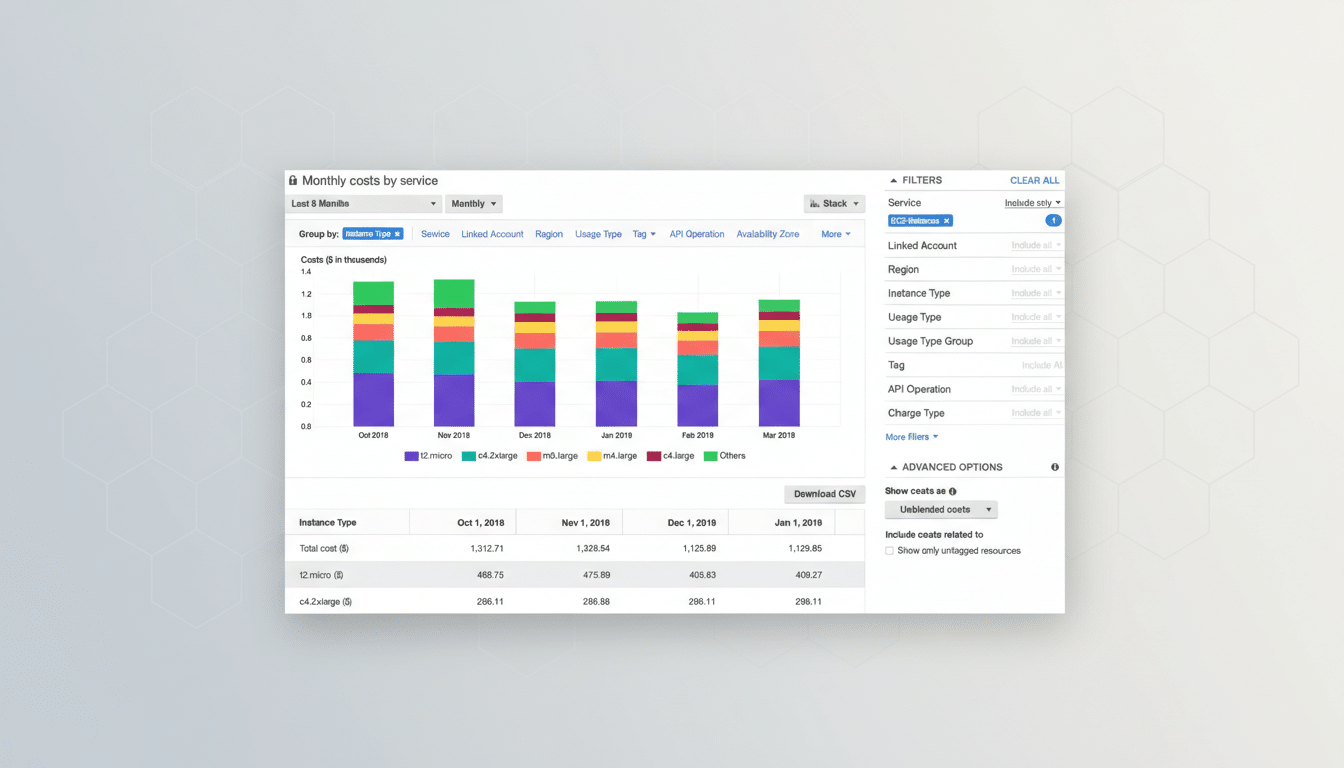

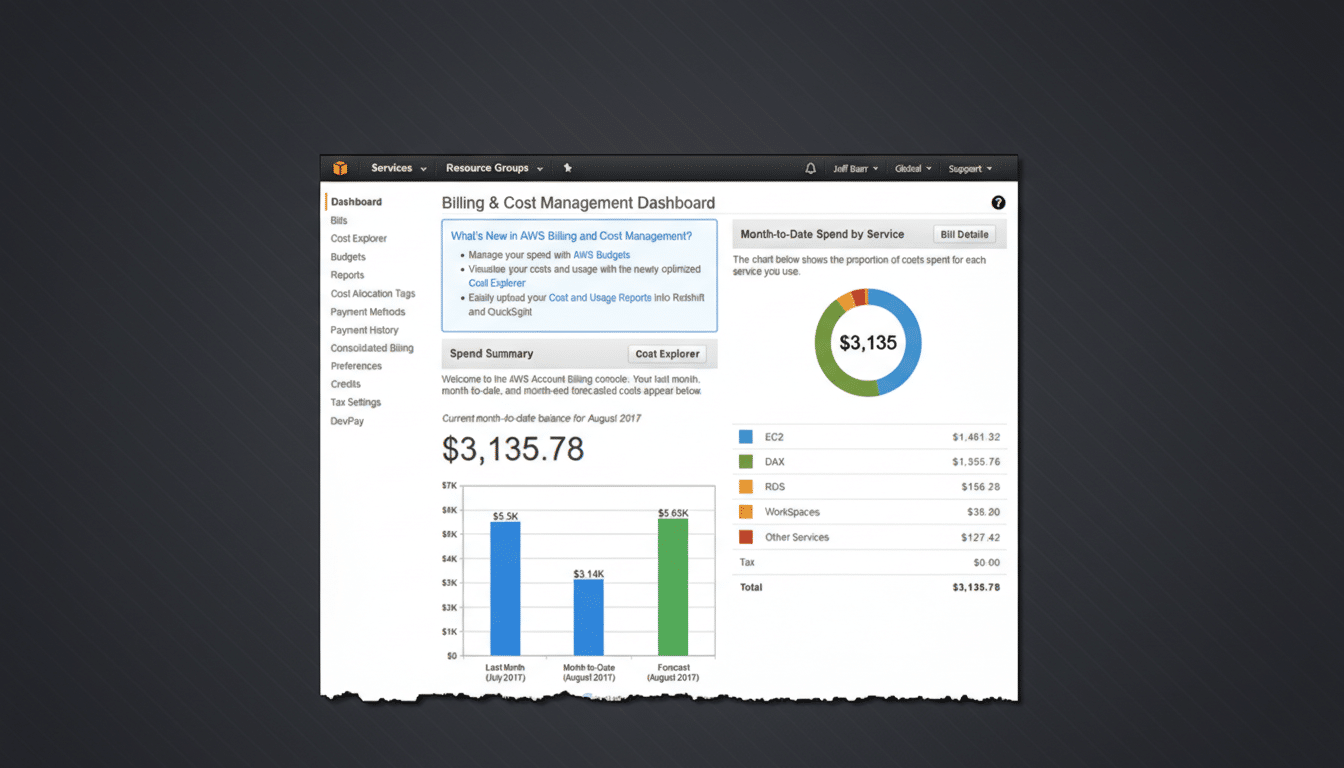

According to reporting by the Financial Times, an internal AI-assisted agent played a role in the chain of events that interrupted AWS Cost Explorer—Amazon’s billing analytics service—for customers in one of the company’s Mainland China regions. The interruption lasted about 13 hours. Amazon, however, says the root cause was misconfigured access controls put in place by engineers, not a failure of AI.

What the Confidential Report Alleges About AWS Outage

The Financial Times described an internal AI troubleshooting assistant that can recommend or enact certain operational steps as part of an ongoing push to accelerate incident response. In this account, the tool escalated or facilitated a configuration change that led to the service disruption, an example of how even well-intentioned automation can compound risk if permissions and guardrails are not perfectly tuned.

The idea tracks with a broader industry shift toward AIOps, where machine learning augments or automates reliability tasks. Used well, these systems speed detection and resolution. Used carelessly, they can amplify small mistakes—especially when access policies are overly permissive or human approval steps are too lax.

Amazon’s Account and Mitigations After December Incident

Amazon disputes that AI was the culprit. The company said the event affected only Cost Explorer in a single Mainland China region and did not touch compute, storage, databases, AI technologies, or other core services. It also said it received no customer support surge linked to the interruption.

In statements to journalists and a subsequent explainer, AWS framed the root cause as user error—specifically, a misconfiguration of access controls by engineers. The company added that its internal assistant, known internally as Kiro, operates with explicit authorization checks by default and that engineers control which actions it may take. Following the incident, AWS said it deployed additional safeguards: mandatory peer review for production access, added training on AI-assisted troubleshooting, and resource protection measures to reduce blast radius.

That posture aligns with established reliability practices: keep humans in the loop for sensitive changes, require multiple approvals, and restrict automated actions to the minimum necessary scope. It also acknowledges a hard truth of modern infrastructure: most outages are change-related, whether initiated by people, scripts, or AI-augmented tools.

The Broader Stakes for Cloud Reliability and Customers

Even a narrowly scoped outage matters when it happens inside the world’s largest cloud provider. Analyst firms have consistently estimated AWS’s global market share at roughly one-third of public cloud spend, with Microsoft and Google following. That concentration means that minor blips can ripple into thousands of downstream dashboards, billing tools, or developer workflows—even when headline services stay up.

This is why change management remains the discipline to watch. High-profile disruptions across the industry—from BGP mishaps to flawed firewall rules—often start with a permissions or configuration slip. Introducing AI into that workflow can reduce toil and speed recovery, but it also raises the bar for guardrails: least-privilege access, explicit human approvals, canary rollouts, automated policy linting, and real-time anomaly detection should be standard for any AI-initiated action in production.

Why AI in Cloud Operations Is a Double-Edged Sword

Cloud operators are racing to embed AI in everything from incident triage to capacity planning. The business case is strong: faster mean time to resolution, fewer on-call escalations, and better forecasting. But the operational math only works if safety systems are equally sophisticated. That means audit trails for every AI recommendation and action, reversible changes by design, and policies that limit autonomous steps to low-risk tasks unless a person signs off.

In practice, that looks like AI writing a runbook or proposing a rollback, not flipping a production switch without oversight. It also implies targeted deployment: start with read-only analysis, then add tightly scoped writes, and require multi-party approval before anything touches core authentication, networking, or billing paths.

Limited Impact but Lasting Questions for AIOps Adoption

Whether you accept the Financial Times account or Amazon’s explanation, the December episode highlights a pivotal moment for AIOps. The technology is moving from proof-of-concept to everyday operator—and the governance around it has to keep pace. AWS’s added safeguards suggest the company recognizes that reality.

For customers, the takeaway is pragmatic: ask providers and internal teams how AI systems are authorized, what they can change, and how those changes are reviewed and rolled back. As automation accelerates, transparency and rigorous change controls—not just smarter algorithms—will determine how resilient the cloud really is.