Reflection AI has raised $2 billion at an $8 billion valuation — enough to make a year-old startup one of the leading lights in frontier model research and to establish it as a U.S. counterpart to China’s DeepSeek. The company’s pitch is simple and audacious — build state-of-the-art models and agents at open, usable scales, then let any enterprise or government download and deploy them on their own terms.

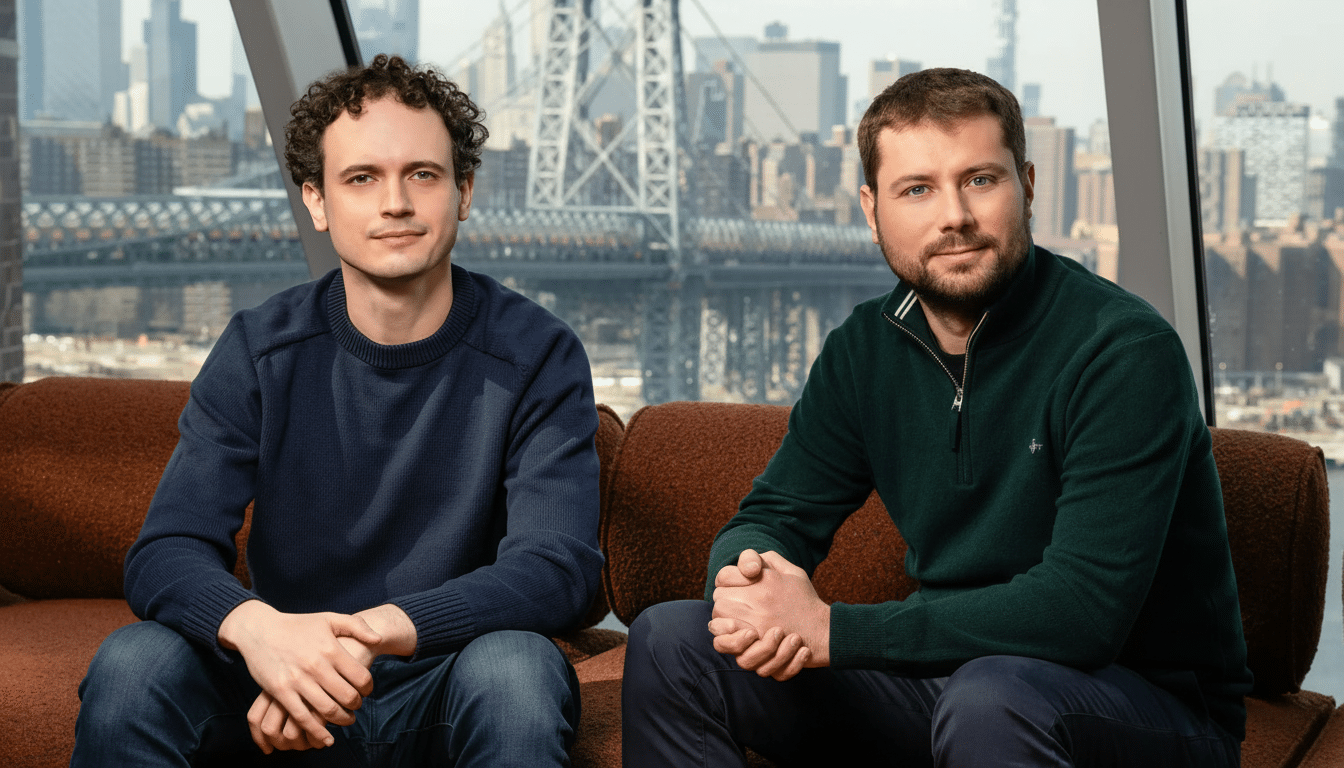

The round is a tenfold-plus bump in the company’s value over earlier this year, reflecting investors’ conviction that open-weight frontier models are on their way to being a strategic pillar of the AI economy. Reflection is built by former Google DeepMind leaders Misha Laskin and Ioannis Antonoglou, who have a history in both the research of reinforcement learning and large-scale training, which fuel the strategy.

Why This Investment Matters For Open Frontier AI

The center of gravity for cutting-edge AI has tilted toward a few closed labs where an elite group of scientists increasingly works as the technology industry demands more dedicated technological expertise. Reflection is a bet that there’s a lasting market for frontier-grade models that have been put out with open weights, allowing users to manage cost, customization and deployment. That strategy reflects how Meta’s Llama and Mistral seeded large developer ecosystems, while keeping proprietary data sets and tooling.

There’s also a geopolitical subtext. DeepSeek’s swift advancement, along with models from Qwen and Kimi, have been a wake-up call for U.S. researchers concerned the next “default” intelligence stack could be formed offshore. Enterprises and public-sector buyers are increasingly speaking of “sovereign AI” (much like sovereign cloud) and regulatory exposure, says Tippett, adding that many are favoring models they can run privately or for which they can audit source code.

Technical Bet On Mixture-Of-Experts And Agentic Reasoning

Reflection claims to have developed a large-scale LLM and RL stack that is able to train gigantic Mixture-of-Experts models. MoEs route tokens to specialized “experts,” increasing compute efficiency by activating only parts of the network for a given token. In practice, that can achieve frontier-level quality and allow for trade-offs with lower inference cost (a trade-off recently made popular in DeepSeek’s models).

The team’s initial emphasis on autonomous coding agents serves a dual purpose. Code is relentless, and since iterative RL methods — things like reward modeling or outcome-based fine-tuning — actually transfer very naturally from this world of program synthesis to a broader class of what we might think of as “agentic” workflows such as planning, tool use, and multi-step reasoning. If Reflection can generalize those gains, we may soon get products that chain tasks together, check their own work and call APIs with less human orchestration.

Commercial Spine and the Open Weights Strategy

Reflection’s concept of “open” is that of releasing model weights for wide use while still keeping the reins on data sets and the full training pipeline. It has become de rigueur for leading open-weight efforts and is so simply because weights unlock practical adoption — fine-tuning for specific deployments, on‑prem deployment in general, domain-specific customization — without ceding expensive IP (infra) and data curation to centralized owner controls.

The business model goes after two customers: researchers, who receive generous access; and enterprises and governments, who pay for support, SLAs, compliance tooling and large-scale deployments. For larger customers, total cost of ownership is as important as raw capability. Open weights enable cost control via fine-grained quantization, placing instances in cloud or on‑prem GPUs, and using adapters to reduce inference spend.

Challenging DeepSeek And New Competitive Map

DeepSeek demonstrated that open, sparse models can narrow the performance gap with closed peers by squeezing more out of training compute. It’s Chinese labs such as Qwen and Kimi that have really solidified that race, putting out faster and faster releases and engaging in community benchmarking. Reflection wants to be the Western lab to keep up with that pace, while also conforming to U.S. regulatory and corporate demands.

Success will depend on three things: training efficiency, scalable safety alignment and credible evaluation. Industry groups like MLCommons and academic centers like Stanford’s CRFM have been advocating for clear, task-related benchmarks outside mere leaderboards. Reflection’s designs would be evaluated based on resistance to jailbreaks, reliability of tool use and trade-offs between latency and cost in real workloads.

Money, Talent and Compute for Frontier AI Ambitions

Leveraging capital from strategic and financial investors such as Nvidia, DST, B Capital, Lightspeed, GIC, Sequoia, CRV and others, the company has attracted talent from DeepMind and OpenAI.

The money will go toward compute reservations, data pipelines, and training runs in order to get the first model (something that is text-first, with eventual multimodal follow-ons) to market in short order.

Compute remains the limiting resource for any frontier lab. Frequency will be determined by the availability of high-bandwidth interconnects, GPU memory footprints suitable for sparse experts and scalable RL infrastructure. If Reflection’s MoE stack pays off, it could potentially reach competitive quality with fewer FLOPs per token, an advantage that multiplies over both training and inference.

What to Watch Next as Reflection AI Scales Up

Watch for licensing terms on the pre-trained weights, alignment red-teaming results and early enterprise pilots in code and analytics. Also pay attention to whether Reflection can develop a sustainable contributor ecosystem — Hugging Face model cards, community evaluations and third-party adapters often portend staying power for open-weight releases.

If Reflection succeeds, it will provide to U.S. programmers and policymakers something they have been asking for: a domestically based, open-weight frontier lab competing directly with DeepSeek while retaining the flexibility and control that heavy users are increasingly demanding.