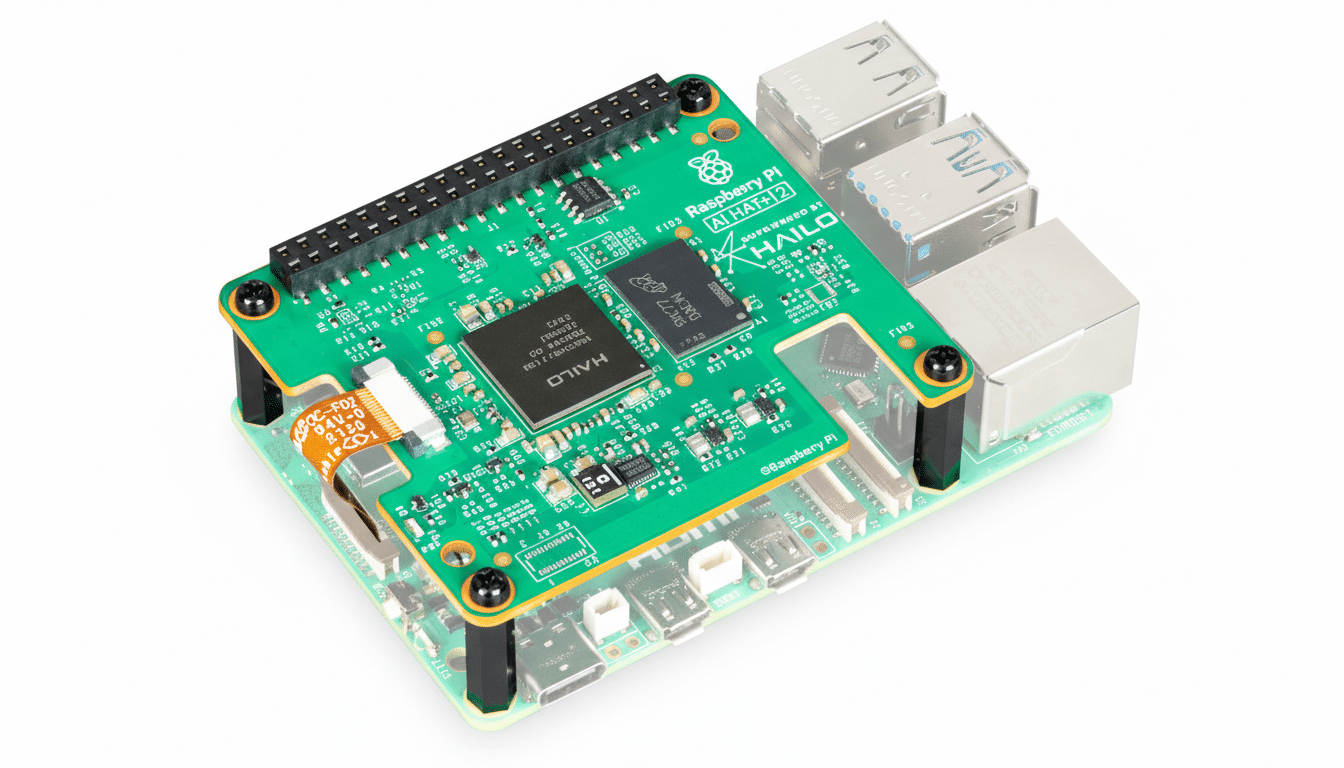

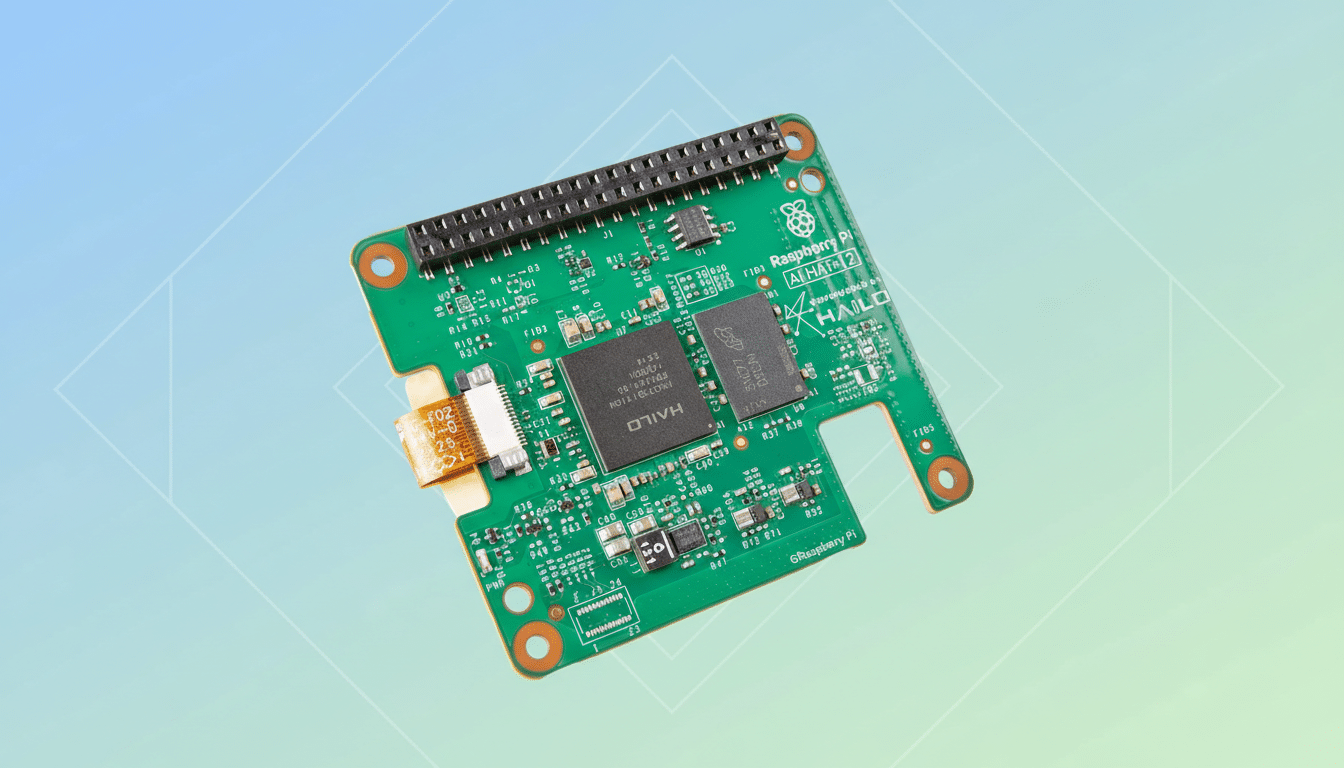

Your Raspberry Pi 5 just picked up a serious new trick. The new AI HAT+ 2 add-on arrives with a 40 TOPS accelerator and 8GB of onboard RAM, pushing local large language models, multimodal inference, and advanced vision tasks onto dedicated silicon instead of the Pi’s CPU and GPU. For makers and deployers who care about privacy, latency, and cost, this is a meaningful step forward for edge AI on the Pi 5.

What Changed With the New AI HAT+ 2 Upgrade

The original AI HAT+ shipped in two trims: 13 TOPS with Hailo-8L and 26 TOPS with Hailo-8. The AI HAT+ 2 upgrades the engine to Hailo-10H, delivering up to 40 TOPS and adding 8GB of local memory. That memory matters as much as the compute uplift—LLMs and VLMs are often RAM-bound, and 7B-parameter models quantized to 4-bit typically need around 4GB of VRAM-like capacity just to run smoothly.

Vision pipelines such as object detection and pose estimation can maintain throughput comparable to the earlier 26 TOPS board, while the new headroom enables heavier generative workloads to run locally, independently of the Pi 5. It’s a shift from “accelerated CV” to “practical on-device generative AI.”

Why 40 TOPS Matters For Raspberry Pi 5 Users

TOPS isn’t the whole story—MLCommons and industry benchmarks routinely note that memory bandwidth, model optimization, and I/O shape real performance. Even so, offloading to a dedicated accelerator transforms the Pi 5 from a controller with occasional AI into a credible edge inference node. Instead of sending data to the cloud, you can answer queries, classify frames, or summarize text near-instantly and privately.

In practical terms, expect snappier token generation for compact LLMs, better concurrency for multimodal apps, and steadier frame rates for CV tasks under load. The 8GB on-module RAM also reduces reliance on the Pi’s shared system memory, which keeps the desktop and other services responsive.

Compatibility and setup for Raspberry Pi AI HAT+ 2

The AI HAT+ 2 connects over the Raspberry Pi 5’s PCIe interface and works across all Pi 5 RAM configs from 1GB to 16GB. It integrates directly with the libcamera-based camera stack, which means less fiddling to tap into camera feeds for inference. According to Raspberry Pi Senior Principal Engineer Naush Patuck, “transitioning to the AI HAT+ 2 is mostly seamless and transparent,” so existing AI HAT+ projects should carry over with minimal changes.

For software, Hailo’s maintained repositories provide turnkey demos and reference pipelines for generative AI and vision. At launch, you can load models such as Llama 3.2, DeepSeek-R1-Distill, and Qwen2, with more arriving as community support grows. Quantization and model pruning tools are available to help fit applications within the 8GB envelope without sacrificing too much quality.

What You Can Build Now With AI HAT+ 2 on Pi 5

- A private smart camera that runs object and pose detection at the edge, then summarizes scenes with a compact VLM—no cloud dependency, reduced bandwidth, and improved privacy.

- A voice assistant kiosk that transcribes audio, queries a local LLM, and generates responses in real time. With careful quantization, you can keep latency low and throughput consistent, even with multiple users.

- A robotics brain that fuses camera input with on-device policy models for navigation and manipulation. The accelerator handles perception and language grounding, freeing the Pi 5 to manage control loops and I/O.

Thermals, power, and caveats for sustained performance

The board ships with an optional heatsink, and it’s worth installing. Generative workloads keep the accelerator busy and warm; sustained performance depends on proper cooling and ambient airflow. A quality power supply is also essential—the official 27W USB-C PSU for Raspberry Pi 5 is recommended when you pair the Pi with power-hungry peripherals and continuous AI workloads.

As with any accelerator, your mileage will vary based on model choice and optimization. TOPS ratings are peak theoretical numbers; real-world results hinge on operators, batch size, and I/O limits. That said, the added memory and compute make a tangible difference in the kinds of projects that are now feasible on a $70-class single-board computer.

The bottom line on Raspberry Pi AI HAT+ 2 upgrade

The AI HAT+ 2 meaningfully upgrades Raspberry Pi 5 capabilities with 40 TOPS of dedicated AI compute and 8GB of local RAM, without upending existing software stacks. For developers who value on-device privacy, predictable latency, and low operating cost, it’s a promising path to run LLMs, VLMs, and demanding vision workloads on the edge—no datacenter required.