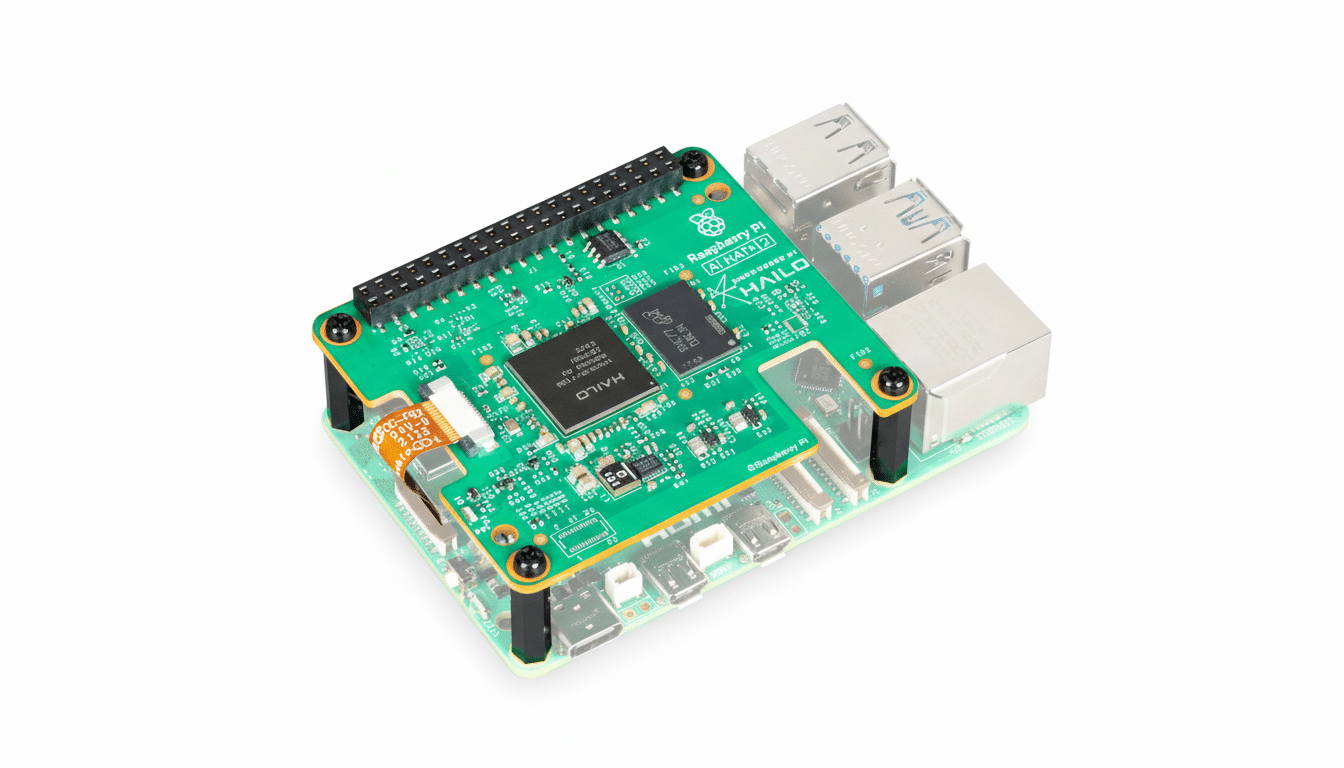

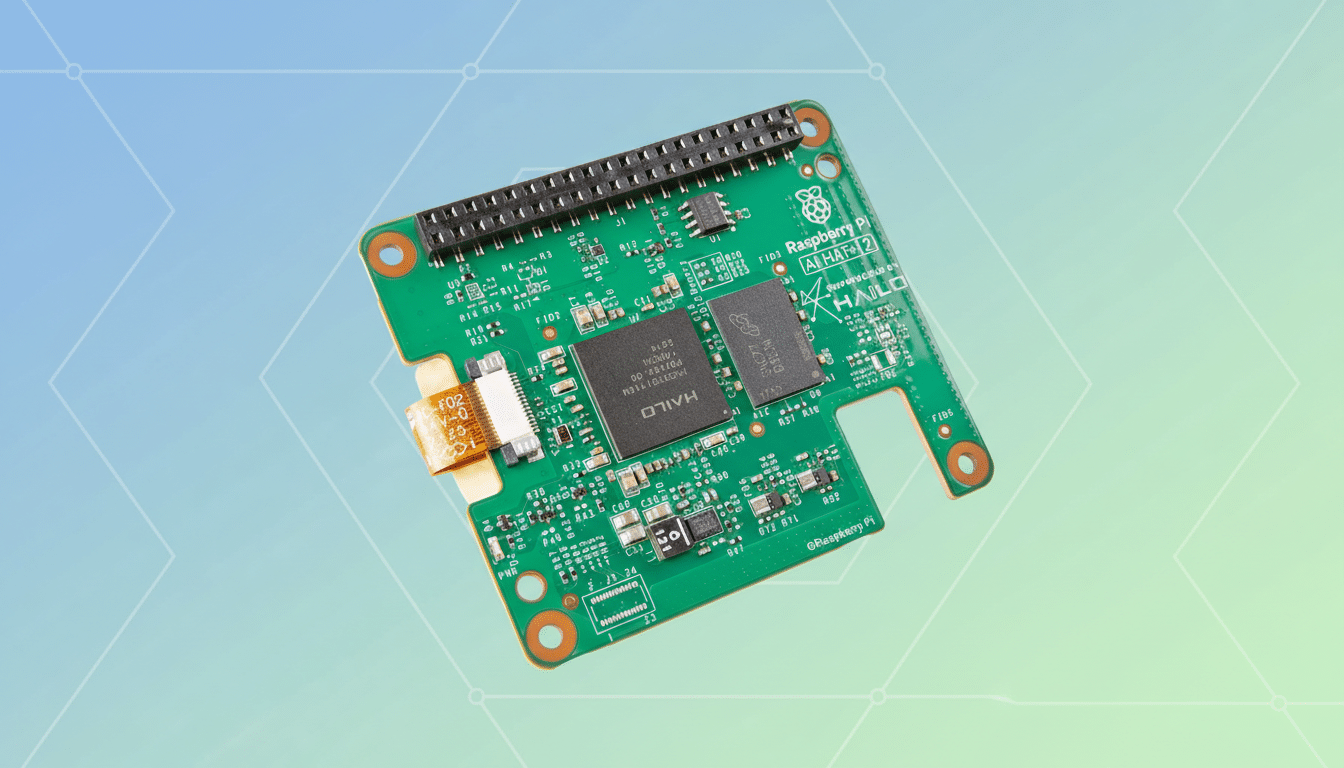

Your Raspberry Pi 5 just picked up a serious new trick. The second-generation AI HAT+ 2 adds a dedicated Hailo-10H accelerator delivering up to 40 TOPS and 8GB of onboard memory, unlocking local large language models, vision-language pipelines, and high-speed computer vision without relying on the cloud.

What the New AI HAT+ 2 Adds to Raspberry Pi 5

The original AI HAT+ arrived in two flavors: 13 TOPS using Hailo-8L and 26 TOPS with Hailo-8. The AI HAT+ 2 jumps to 40 TOPS with Hailo’s newer 10H silicon, plus 8GB of RAM on the board for models and intermediate tensors. That’s roughly a 54% compute uplift over the 26 TOPS version and more than 200% over the 13 TOPS model, expanding what’s feasible at the edge on the Pi 5.

Crucially, the performance boost is aimed at generative workloads: compact LLMs, VLMs, and diffusion-style tasks now run locally, while classic vision jobs like detection, segmentation, and pose estimation retain strong throughput similar to the prior Hailo-8 class.

Why 40 TOPS Matters on the Raspberry Pi 5 Today

Raspberry Pi 5 brought a modern CPU and a PCIe 2.0 lane but no built-in NPU. Offloading to a 40 TOPS accelerator dramatically changes the equation. Developers can keep data on-device, cut inference latency by avoiding round trips to cloud APIs, and deploy applications that remain functional offline—a big deal for robotics, smart cameras, kiosks, and privacy-first assistants.

The onboard 8GB RAM reduces host memory pressure, which is vital on Pi 5 variants with 1GB or 2GB. It also helps sustain token generation or frame processing without aggressive swapping, a common bottleneck in CPU-bound inference.

Compatibility and Setup for Raspberry Pi 5 Users

The board connects via the Pi 5’s PCI Express interface and works across the entire Pi 5 line (1GB through 16GB). It plugs neatly into the existing camera stack, so CV pipelines can tie directly into libcamera-based apps without wrestling with extra middleware.

For makers with projects built on the first AI HAT+, Raspberry Pi engineering says migration should feel largely transparent—software changes are minimal, and the handoff of workloads to the new accelerator follows the established toolchain.

Software and Model Support at Launch and Beyond

Hailo’s developer resources on GitHub provide starter projects, model compilers, and reference pipelines tuned for the 10-series silicon. At launch, there’s support for popular compact models, including Llama 3.2 variants, DeepSeek-R1-Distill, and Qwen2, with more arriving over time as the model zoo expands.

Expect a mix of quantized LLM chat, vision-language captioning and grounding, and efficient real-time detection to run side by side—something that was harder to juggle on CPU alone. The combination of the Pi 5’s improved I/O and the accelerator’s throughput means robotics stacks can pair perception with on-device reasoning without handing context to external services.

Thermals, Power, and Reliability Considerations and Tips

The AI HAT+ 2 ships with an optional heatsink, and it’s worth installing if you plan to stress the accelerator. Sustained generative workloads can push thermals high; adequate case ventilation and a quality power supply make a noticeable difference. The official Raspberry Pi 27W USB-C supply gives the headroom needed for the Pi 5 plus accelerator and peripherals.

What It Means for Edge AI on Raspberry Pi 5 Projects

For hobbyists and professionals alike, this is a capability leap. A 40 TOPS edge module with 8GB RAM on a $60–$80-class computer rewrites the price-performance math: smart cameras that summarize scenes, private voice or vision assistants, QA inspection on a conveyor, even educational kits that teach generative AI without exposing student data.

The earlier AI HAT+ brought acceleration to the Pi 5; the AI HAT+ 2 broadens the workload envelope into generative territory while keeping classic vision fast. With support from Raspberry Pi and Hailo’s tooling, and a generally drop-in upgrade path, this looks like a timely and promising step for anyone building serious edge AI on the Pi.