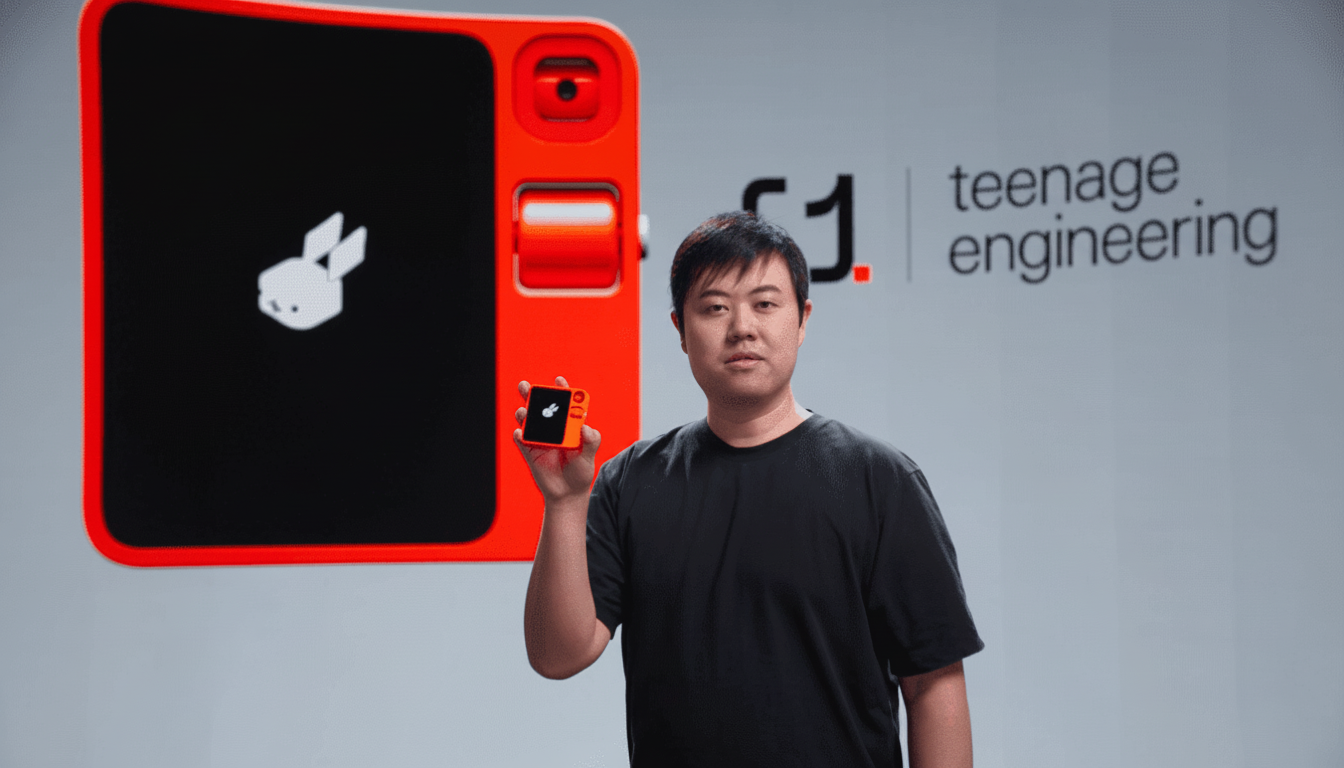

Rabbit’s next move in dedicated AI hardware is starting to take shape. CEO Jesse Lyu has teased a “three-in-one” device concept and suggested the company is testing multiple prototypes, signaling a more ambitious follow-up to the voice-first R1. Lyu also hinted that major entrants could crowd the category soon, saying that “OpenAI is releasing hardware, and others will follow.”

Although he didn’t specify what the three would be, the natural extrapolation is a single product that combines multiple modes of interaction and utility. Most likely a compact device with voice control, a visual interface, and a camera or sensor array—think assistant, display, and smart-cam in one—that can handle not only hands-free commands but richer tasks like translation, shopping, or visual search. Or a modular package that serves as a standalone gadget, a wearable clip, and a desktop dock, with minimal friction as the user changes context throughout the day.

Crucially, Lyu acknowledged the elephant in the room: almost nobody needs two devices that slightly resemble a smartphone. Two devices work only if one fits in your pocket and the other in your ear. A three-in-one will require an outside shove.

Rabbit tests prototypes for a more ambitious successor

Rabbit’s R1 came as one of the more visible experiments in screen-light AI access. For $199, you could call up your usual services by voice, accessed through a simple OS skin, with a small touchscreen and camera. In practice, according to a half-dozen early adopters found online, the experience is inconsistent and slower than just picking up the phone already in your pocket.

What the R1 offered—less complexity, more immediacy—was at odds with the selling point that, whenever an assistant stumbled, you reverted to a smartphone anyway. It wasn’t alone. The broader slate of “AI companions,” including wearable assistants from Fossil and others, hammered home how hard it is to beat a mature smartphone platform on latency, app access, and battery life.

The take: you can’t ever succeed as a sequel. A follower to the smartphone should be just as reliable, have a manifest runway in particular tasks—such as hands-free capture, persistent context, and automation—and be genuinely better. A triptych approach could help here: bundle the best inputs for the job instead of letting every action route through voice.

Smartphone advances raise the bar for AI devices

Lyu joked that smartphones will be effectively “agentic” in about 18 months, a nod to assistants that can determine objectives, perform multi-step actions, and update without constant input. That sounds about right with the industry’s pace. Google has started weaving multimodal Gemini more fully into Android, Apple has been rolling out system-wide AI facilities that manage across apps, and chipmakers are ramping neural compute to tens of TOPS for on-device reasoning. As phones add more persistent memory, contextual recognition, and app-control APIs, the bar for a distinct AI device goes up a bit further each year.

What Rabbit must deliver to compete with incumbents

This reality defines the brief for Rabbit. To beat a rapidly improving smartphone to second place everywhere, the next device will have to win at some specific, high-frequency jobs: live transcription and summarization, visual assistance, lightning-fast task automation across accounts, or secure hands-free actions while the phone is safely concealed.

Privacy will also be essential. As our brief explains, the safer the device can be with less reliance on the cloud, the more likely it can attract consumers who are concerned about ambient microphones transmitting private data to huge data streams.

Lyu said: “To the best of my knowledge, OpenAI is about to release their own hardware.” Similar reports have been published by the Financial Times and The Information. If OpenAI launches a tailor-made hardware product, expectations around latency, multimodal capabilities, and the lock-in of the ecosystem could be reset.

And then, of course, there are the incumbents. Meta is creating smart glasses to internalize technology capabilities, which can appear more natural than new device form factors when they are integrated. Smartphone companies are rushing to push their AI into the core parts of their OS. Accordingly, Rabbit’s roadmap is increasingly urgent.

If the three-in-one idea really wants to assert itself within Rabbit’s timeline, there must be a powerful, quantifiable justification for shipping: beating the phone in a number of scenarios, consistently delivering in noisy places, and a battery that carries through a complete day. Pricing will decide acceptance; the R1 sold for less than $200, but customers will expect the price to rise in lockstep with performance, not just a different shape.

Watch input versatility, autonomy, and ecosystem reach

- Input versatility: A genuinely multimodal interface — voice, touch, and vision — should fluidly hand off between modes.

- Agentic autonomy: Assistants must be demonstrably useful, completing multi-step tasks across services without constant confirmation.

- Ecosystem reach: Partnerships with commerce, travel, productivity, and other platforms will make or break real-world usefulness.

Rabbit earned attention with the R1’s ambition. The teased three-in-one shows the company learned that form factor is strategy, not packaging. If the next device reduces device overload, nails the basics, and leverages agentic AI where phones still falter, it can carve out a lane before bigger players lock down the category.