In Palo Alto, that role is being filled by a select group of builders and investors who are meeting to sketch out the next decade in graphic terms. It is an unusually concrete agenda, less of the preaching and more of the plumbing. The event is put on by Playground Global, whose general partner and erstwhile Intel chief Pat Gelsinger steered the conversation toward the hard problems that actually move the frontier.

The draw is not hype. It’s that the people on stage are already constructing the infrastructure of our very near future — new chipmaking tools, novel human-computer interfaces and bioelectronics that can restore lost senses and might even unlock new ones. Here’s what that future looks like from the front row.

Breaking the Chipmaking Bottleneck in the AI Era

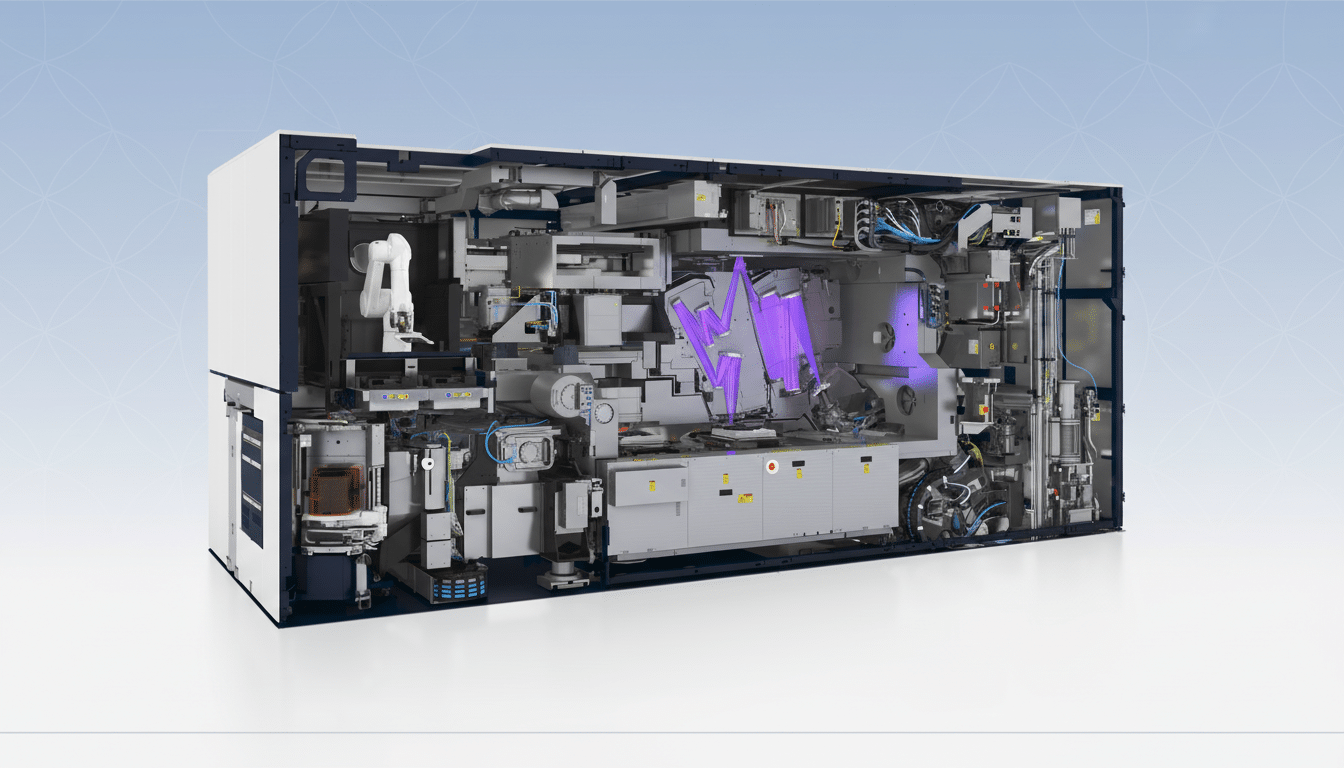

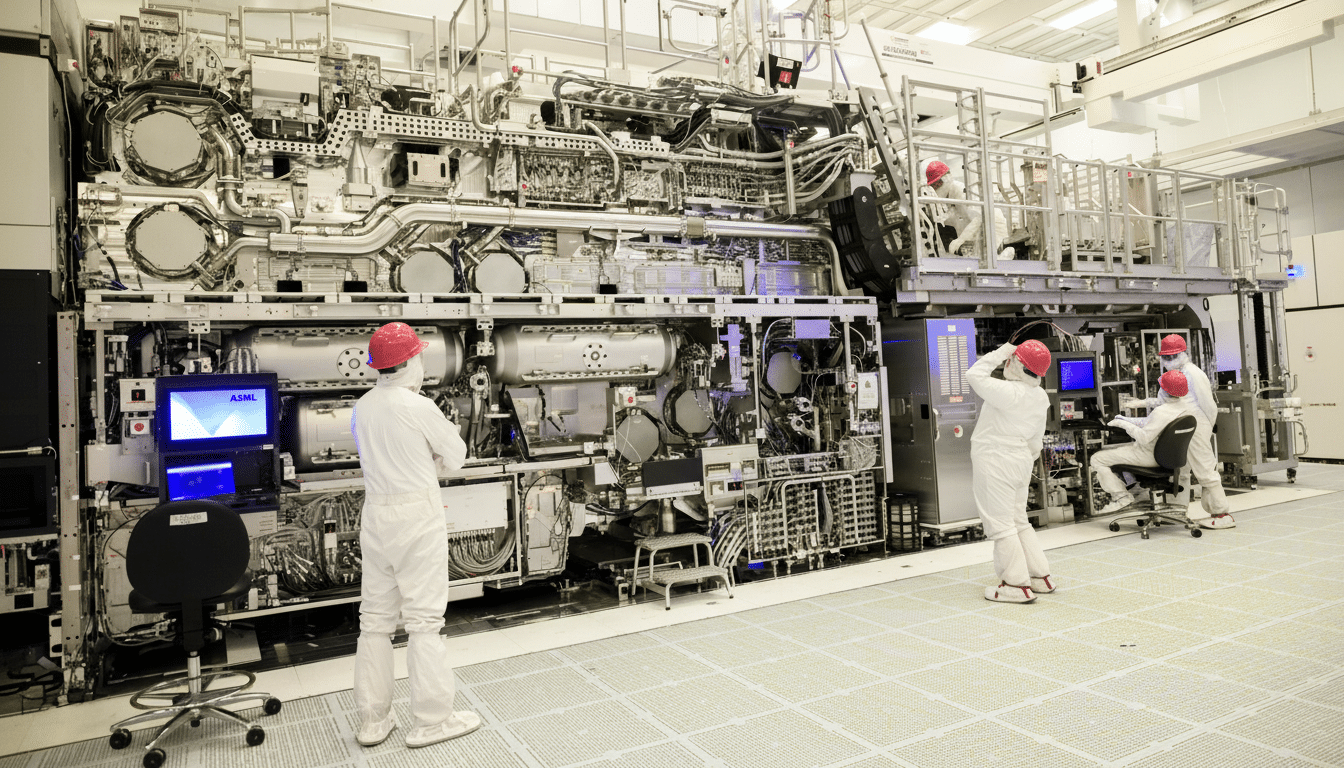

All it takes to make any advanced chip today is an extreme ultraviolet lithography machine that costs about $400 million each and is available from just one manufacturer, the Dutch company ASML. Now that concentration has itself become a strategic choke point, as AI demand soars and geopolitics tighten export controls. The U.S. share of manufacturing capacity has slipped to about 12%, according to the Semiconductor Industry Association, highlighting the risk to supply.

Introduce Nicholas Kelez, a physicist at a particle accelerator who worked for the Department of Energy for two decades. He’s establishing in the United States a next-generation technique to pattern silicon through accelerator-based light sources, an effort to establish independence from single-tool monopolies. If it works, it could significantly cut lead times, enable higher yields and draw some parts of the value chain back onshore alongside CHIPS Act incentives.

The stakes are clear: IDC forecasts global AI spend to breach the $300 billion threshold in a few years, and every model update is more computationally heavy. Even small percentages of the lithography machines, existing or not, are thermopolitical and etch their effects into capacity, pricing, and who gets to innovate next.

Whisper Tech and Cognitive Wearables Reshape Interfaces

On the consumer side, Mina Fahmi is demonstrating a ring that can transcribe whispered thoughts — subvocalized speech — as text. He and co-founder Kirak Hong also worked on neural and interface projects at Meta after their startup was acquired, so they’ve got unusual experience in both hardware innovation and machine learning applied to human input.

And with long-time operator and investor Toni Schneider of True Ventures behind them, their company Sandbar is betting that wearable interfaces will be more than just a shiny reflection of a phone as currently optimized.

It’s a pretty safe bet: Pew Research has found that roughly 1 in 5 adults in the United States uses a wearable device, and data.ai monitors multi-hour daily mobile usage globally. Low-latency, high-accuracy whisper-based interfaces might compress tasks in seconds and can open quiet private modes of personal computing for work and accessibility.

The open questions on the stage: word error rate in noisy settings, privacy guarantees for always-on microphones, and whether on-device models can avoid cloud round trips without sacrificing accuracy. Anticipate that real-world demos will count more than slides.

Biohybrid Brain Interfaces Advance Toward Clinic

Max Hodak, a co-founder of Neuralink and the current president of its parent company, Science Corp, believes the most important user interface could be biological. Science is investigating “biohybrid” brain-computer interfaces — chips implanted in the brain that have been seeded with stem cells and become one with its neural tissue — to restore function for people living with paralysis, and eventually to open up new avenues of communication between man and machine.

The field is realigning, from demos to durability. Since then, the BRAIN Initiative at NIH has driven innovations in neural recording density and materials, the FDA has awarded Breakthrough Device designations for several BCI systems, and peer-reviewed studies have demonstrated patients controlling cursors to type or robotic limbs via implanted arrays. Some clinical programs have already restored partial vision to a number of blind patients using retinal implants, providing a credible near-term path to impact as longer-term biointegration develops.

What matters next: time-to-first clinical endpoints, safety over multi-year implants and manufacturability at cost. Look for details on trial design, sample size and methodology — things that distinguish moonshots from milestones.

The Consumer Shift Everyone Is Missing in AI

Amid an investment rush in the enterprise AI business, investors Chi-Hua Chien of Goodwater Capital and Elizabeth Weil and Scribble Ventures are making a contrarian argument for consumer. Their resumes include early bets on Twitter, Spotify, TikTok, SpaceX, Slack, Figma and Coinbase — portfolios built on spotting inflection points before metrics feel tidy.

The thesis: AI-native consumer apps will not resemble AI wrappers. They’ll bake generative models into creation, identity and social graphs, with unit economics augmented by on-device inference and smarter acquisition loops. Goodwater’s own analysis has observed rapid consumer behavior change where friction crumbles, and the arrival of the next wave of interfaces — voice-first, camera-first and context-aware — can assist with lowering friction once more. Look for frameworks around retention, trust and monetization that are outside of monthly active users.

Why Palo Alto’s Culture Helps Dictate the Future

The region has more than mere capital density, though; it has cross-pollination. And hardware founders are sitting beside policy thinkers, AI researchers and operators who’ve helped scale products to hundreds of millions of users. At one of its previous get-togethers here, Sam Altman, OpenAI’s chairman, famously said that the organization would build AGI and then ask it how to make money — what felt like a joke at the time sounds like an operating thesis now.

That audacious, pragmatic mix is also what excites this event. Chip tools, neural wearables and bioelectronics are not slides; they are manufacturing and clinical challenges with clear endpoints. The discussion is meant to translate frontier work into timelines that executives and policymakers can actually do something about.

Onstage: What to Watch Across Chips, Wearables, BCIs

- For semiconductors: concrete specs on light source, wafer throughput and a glide path to EUV resolution parity; how U.S. suppliers might plug into the toolchain; and whether CHIPS incentives can help de-risk that first pilot.

- For cognitive wearables: latency measured against smartphone voice input, size of on-device model footprints, and privacy-by-design choices that can be trusted by regulators and consumers.

- For BCIs: enrollment goals, clinical endpoints for vision or limb restoration, longer-term biocompatibility and the reimbursement narrative that will determine access in the outside world.

If you need a working definition of what’s next, it is being written in Palo Alto — by people making it, not just anticipating it.