A showy comparison intended to prove that OxygenOS 16 is faster than Samsung’s One UI is under scrutiny, with hawk-eyed fans noting that the “slower” phone doesn’t appear to have been operated properly in the demo.

Far from being a victory for OnePlus, the clip is an uncomfortable reminder of how these speed tests often run and what they actually say.

The Demo That Made It Move Suspiciously

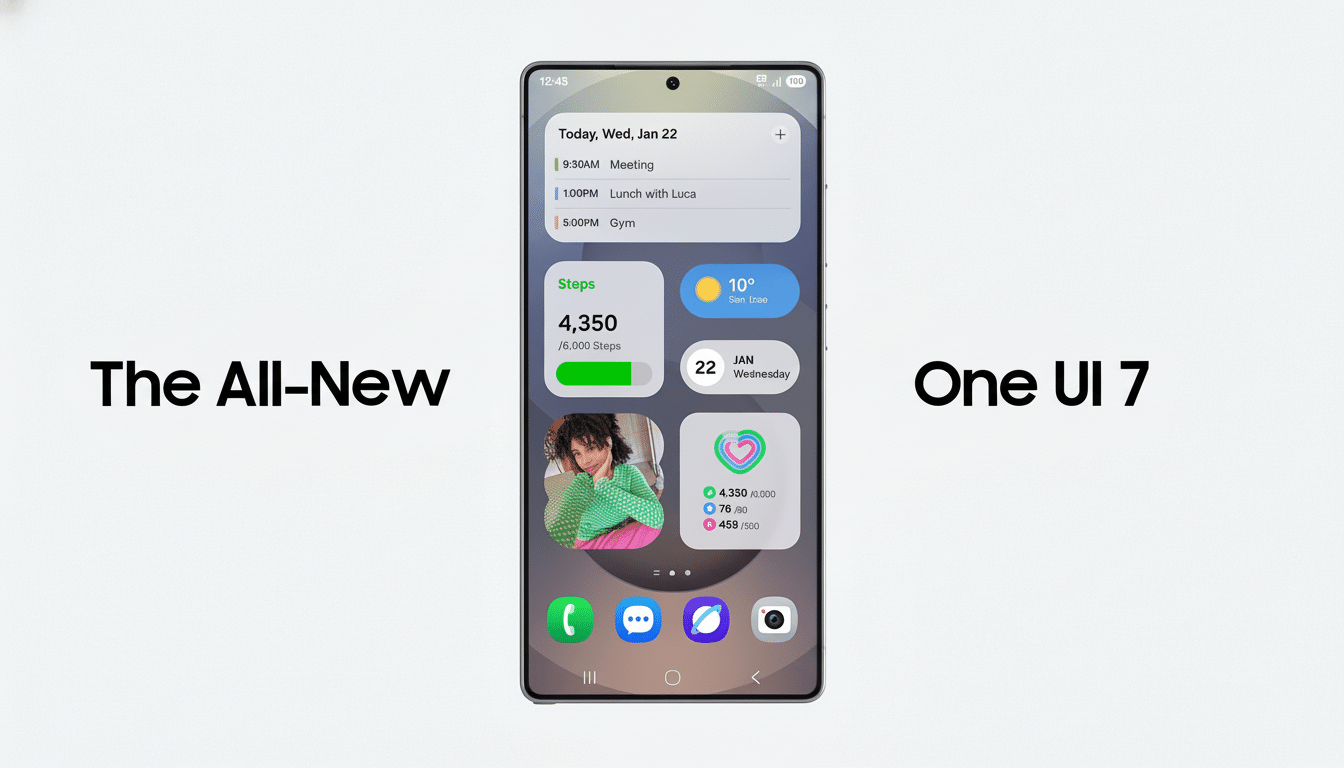

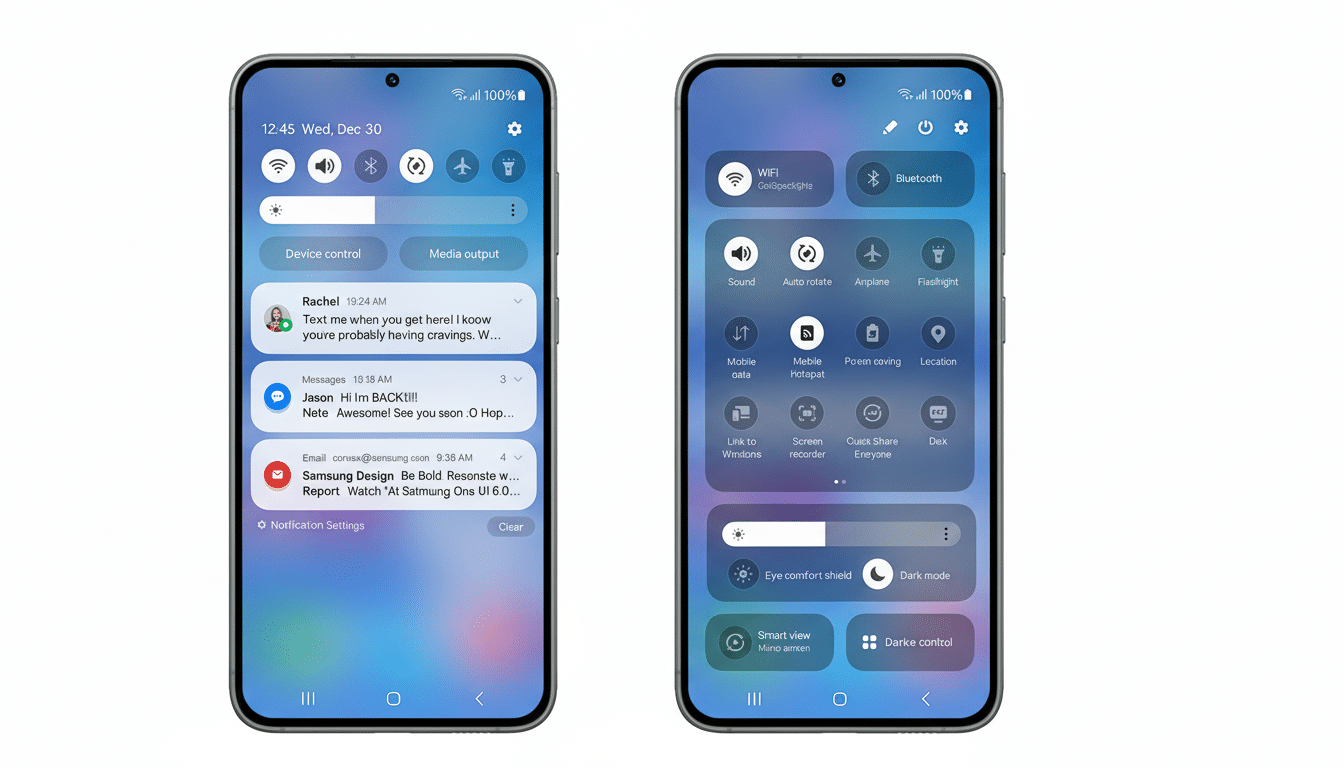

OnePlus gave us a sneak peek at OxygenOS 16 with a section that showcased “Parallel Processing” enhancements that should make app opening and switching feel instant. In that segment, split-screened with shots of an “Other Flagship UI” device (we assume is a Samsung running One UI) next to a OnePlus handset, the host jumped in and out of different apps and one mobile game on both devices. At a high level, the contrast was immediately apparent: While the OnePlus flew, the Samsung lagged, if only for a second or two.

But the apparent stumble wasn’t a rendering — or frame-rate — issue. It appeared connected to how the Samsung phone was being treated. The presenter opened a full-screen game and repeatedly tapped where the Home button would appear in three-button navigation — despite the game masking that bar. Without the swipe needed to bring up the system navigation, those taps would be interacting inside the game, not outside it with the OS.

The sequence was indicated by a Threads user, uyagoda_, and later picked apart by third-party Android researchers, including Mishaal Rahman, who pointed to the telltale cues: a full-screen app, no sign of a nav bar, and taps that were simply going nowhere near Home.

The on-screen disclaimer warning viewers not to try this at home didn’t do the optics any favors.

What Likely Went Wrong During the One UI Demo

In the age of modern Android, full-screen apps can hide your system navigation as desired — either through gestures (as in Android 10) or even through buttons (in some older versions). The user must uncover the Home screen by either swiping up from the bottom (gestures) or swiping to show the nav bar (buttons), then double-tapping. Without that step, tapping the lower bezel region does nothing — and if the game actually listens for taps in that area, it just accepts them as in-game touches.

That nuance is not a nitpick; it makes what should be a head-to-head “speed” moment an operator error. If one device is driven properly and the other isn’t, it’s not clear what I should conclude about scheduler tuning, animation timelines, or parallelism — mostly because it’s measuring the difference between a real gesture and a mis-tap.

Why Speed Demos Can Be So Hard to Trust and Verify

Even with perfect inputs, side-by-side videos can easily be manipulated — a design flaw. There are so many variables that can skew results:

- Chipset variant (Snapdragon or Exynos)

- Thermals and ambient temperature

- Display refresh rate and animation scales

- Health and speed of storage

- Background services and app load

- Network conditions at game or app load

With no power limits, the fastest phone might wind up being the one in a cooler room or with fewer background tasks.

Google’s developer advice recognizes the difference between a hot, warm, and cold start. A hot startup can be nearly instant because the activity is already in memory, but a cold start will require process creation and I/O to load it, which becomes increasingly complicated with shader compilation or asset loading for games. For related reasons, 120 Hz makes sample timelines appear a smidgen smoother, and aggressive RAM policies can “win” quick-switch tests by evicting background apps you might actually want to keep.

Both companies have software tricks: OnePlus relies on its system-level tuning — recent devices boast “Trinity” or parallel processing claims — and Samsung ships App Booster and RAM Plus to help performance post-installation and virtualize additional effective memory, respectively. These types of decisions impact subjective feel and perceived rapidity but are best proved out with repeatable methods rather than ad hoc showpieces.

What Real, Trustworthy Speed Tests Would Look Like

Comparisons that one can believe require a test plan. That would entail the same hardware or, at least, a single chip family; matched refresh rates; the same navigation modes; identical animation scales; and the corresponding app versions running on each phone. The inputs should be automatic using Android’s UI Automator or ADB scripts so you don’t mess it up timing-wise.

For app launches, use the ADB “am start -W” flag for timings, and Perfetto/Systrace for frame timelines. 240 fps high-speed recording supports confirming visual smoothness. More comprehensive suites like UL’s PCMark Work 3.0 and our web workloads in Speedometer flesh out the test suite, but when it comes to app switching, purpose-written scripts cycling through a set of applications are more informative. Publish the method, do it more than once, and report medians and a measure of variance. That’s how you build trust.

What We Want to See Next From OxygenOS 16 Release

That’s not to say OxygenOS 16 isn’t fast. The build they displayed looked incredibly snappy, and OnePlus cares about touch latency and animation responsiveness. But a side-by-side picture that hinges on a misapplied navigation gesture doesn’t seem like convincing evidence of being “strikingly ahead” of One UI.

For buyers, the smart play is to wait for independent testing on retail firmware. If OnePlus’s side-channel prowess is as impressive in actual use as it has been pitched, real-world testing will reveal the spoils. And if Samsung’s One UI is a real contender, controlled tests will reveal that as well. Either way, it’s transparent methodology — not the dramatic clip — that should end this debate.