OpenAI is pressing ahead on a huge infrastructure expansion that brings five U.S. data centers into its Stargate program through partnerships with Oracle and SoftBank. The expansion raises its planned capacity of Stargate to something like 7 gigawatts — more electricity, by far, than the tens of thousands of homes that an energy utility might need to power — highlighting the scope of compute that the company says it requires for next-generation AI training and inference.

Three of these sites will be built with assistance from Oracle, in Shackelford County, Texas, Doña Ana County, N.M., and an unannounced site in the Midwest. SoftBank is supporting two more campuses in Lordstown, Ohio, and Milam County, Texas. Combined, the projects represent a multi-partner approach aimed at diversifying risk, reducing timelines, and leveraging regional strengths in power, land, and fiber connections.

Why these locations, and why these infrastructure partners

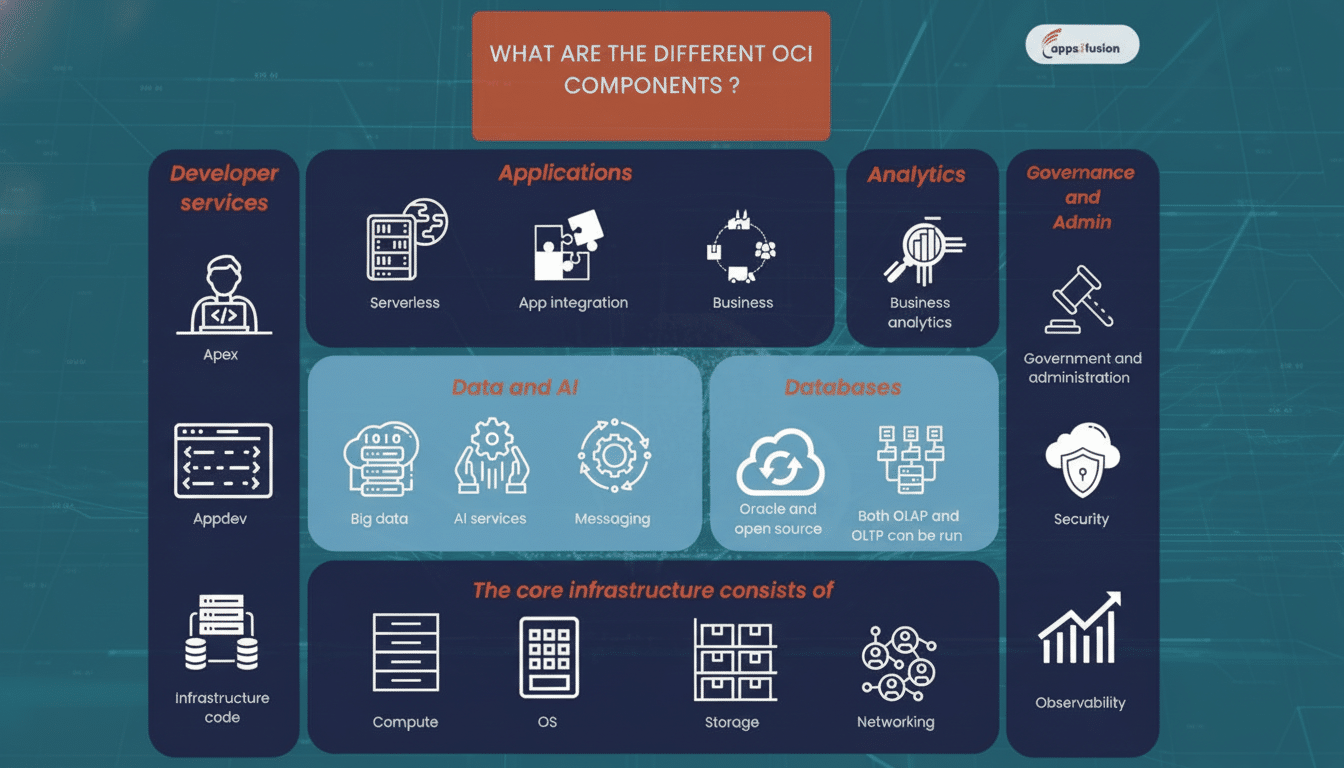

Oracle brings an established hyperscale footprint with Oracle Cloud Infrastructure along with current collaboration with OpenAI to provide hosting and optimization of large model workloads. SoftBank, in the meantime, has talked about building upstream and downstream AI infrastructure around its chip investments, so you can see these locations fitting in as part of an effort to pick winners on a broader platform bet. Using two separate backers also spreads procurement, construction, and long-term operations risk.

Texas and New Mexico are rich in land, come with good siting jurisdictions, and can tap into high-capacity transmission. ERCOT’s increasingly mature wind and solar fleet — as well as rapidly growing battery storage — is making sites in West and Central Texas look good to power-hungry campuses. Another allure is the proximity of New Mexico to major long-haul fiber routes and interties to neighboring grids. Across the Midwest and Ohio you see these heavy-industry corridors with substations, transportation by rail and road, a workforce that needs to be employed alongside communities looking for ways to repurpose legacy manufacturing infrastructure for digital-era jobs.

Power, chips and the economics of scale

Seven gigawatts is an enormous amount of power, by data center standards — about equal to a handful of large power plants. The state-of-the-art AI models today require tens of megawatts for weeks or months to train, and deploying these models worldwide creates a constant, always-on load for inference. The International Energy Agency has predicted that overall data center power use could almost double from 2022 to the end of 2026, with much of that coming from AI and crypto, with the latter projected as the largest growth driver in established global markets.

At this level, substation build-outs, power purchase agreements, and on-site backup generation are as strategic as GPU procurement. The squeeze on chips stays tight: high-bandwidth memory, advanced packaging capacity, and next-gen accelerator supply have been gating factors for many operators. Analysts also point out that operators are designing for liquid cooling and higher rack densities to accommodate the maximum performance per square foot, which dictates their power distribution choice as well as floorplate options on day one.

Grid bottlenecks and the sustainability squeeze ahead

Interconnect queues are a big barrier. Research from Lawrence Berkeley National Laboratory puts the number at more than 2,000 gigawatts of generation and storage in need of a grid hookup across the United States, which translates to multi-year timelines on new capacity. The North American Electric Reliability Corporation has also cautioned that fast-growing data center clusters can stress local reliability if load growth outpaces transmission upgrades.

That context helps explain the site mix: areas presenting higher transmission, access to renewables, and cooperative utilities can shave months off schedules. Look for long-term contracts for wind, solar, and storage with efficiency measures like warm-water liquid cooling, heat reuse wherever possible, and demand management that’s getting more sophisticated. Policymakers and communities are also pushing for transparency around water use, emissions accounting, and local hiring — all considerations that have now become core to enterprise AI procurement.

What the Stargate build means for AI development

The five new sites are a clear indication OpenAI intends to work toward multiple generations of model size growth as well as lower-latency global inference. Centralizing capacity in a few regions ought to cut down on congestion, increase availability, and protect against supply shocks — in semiconductors, transformers, or grid access. It’s also a manifestation of a larger trend: The biggest AI developers are no longer just customers of the cloud; they’re co-designers, in effect, of the underlying infrastructure.

Campuses of this size are usually built in two- to three-year phases with incremental power and network additions. The community impact of the new sites will become more apparent as local permitting, workforce needs, and vendor selection move forward. At the same time, competitors from hyperscale cloud providers to specialized AI clouds are ramping up their own builds, setting the stage for a capacity race where siting strategy, supply chain execution, and energy economics may prove as important as model architecture.

If delivered on its proposed timeline, Stargate’s 7 GW of planned capacity would be one of the most ambitious AI data center projects globally to date. The lesson for businesses and developers is simple: the infrastructure to train and serve remotely accessible, larger, more powerful models is being put in place today, and its characteristics — what it’s built out of, where it draws power from, whether it sustains itself with renewables or fossil fuels — will determine what AI can do on whose behalf for some time.