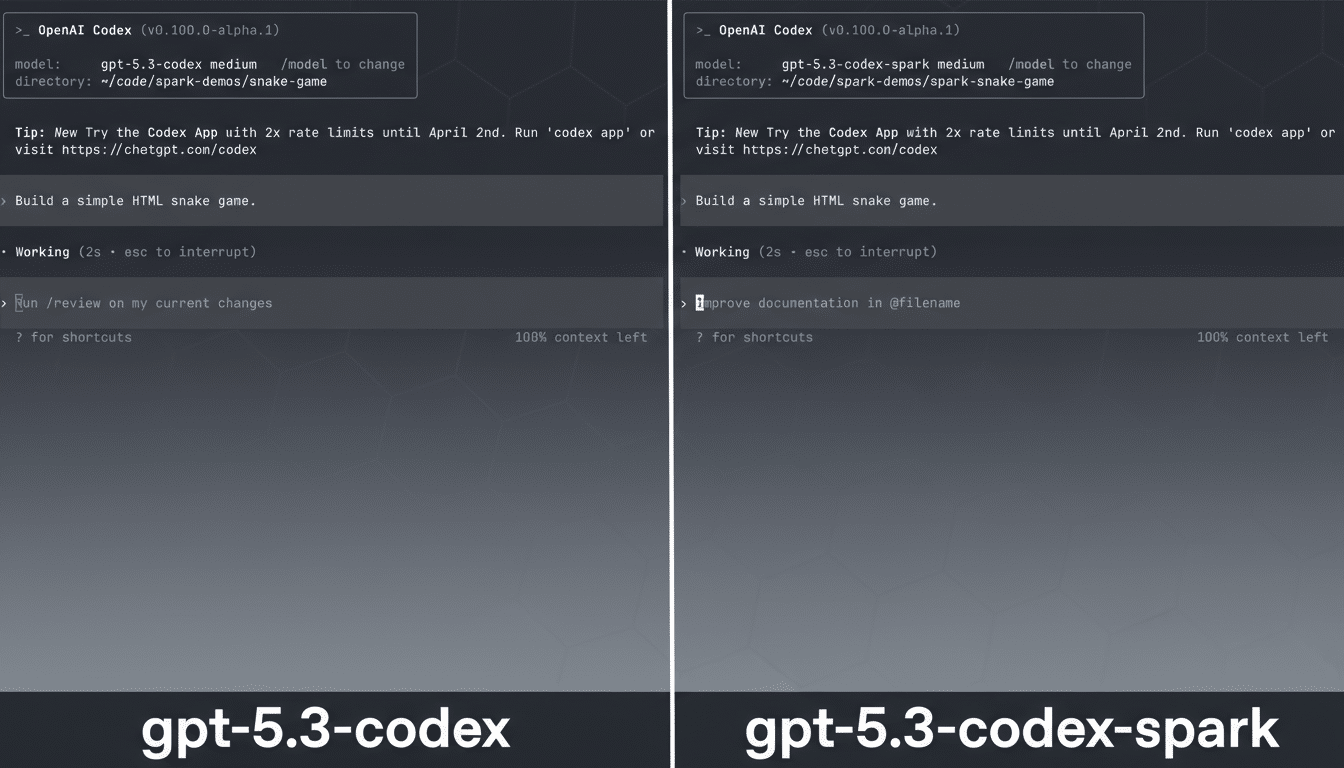

OpenAI’s latest research preview, GPT 5.3 Codex Spark, is built for real-time coding—and it’s fast. The company says Spark generates code up to 15x faster than the flagship GPT 5.3 Codex, delivering snappier edits, live refactors, and immediate feedback inside the Codex environment. The catch is that speed comes with measurable trade-offs in reasoning depth and security capability.

What Spark changes for coders in daily workflows

Spark is designed for tight, conversational loops rather than long agentic runs. Instead of issuing a request and waiting minutes for an agent to reason, plan, and execute, developers can nudge Spark through rapid micro-iterations—adjust a function signature, rewrite a loop, rename variables, then immediately see proposed edits. It favors lightweight, targeted changes over big-bang rewrites, and it won’t auto-run tests unless asked.

OpenAI attributes the responsiveness to end-to-end latency cuts. Reported improvements include an 80% reduction in client–server round-trip overhead, a 30% reduction in per-token overhead, and a 50% faster time-to-first-token thanks to session initialization and streaming optimizations. A persistent WebSocket keeps the conversation hot, avoiding the cost of renegotiating connections during iterative edits.

For everyday tasks—scaffolding components, producing boilerplate, patching small bugs—this can feel less like batch processing and more like pair programming. Think “fix this null check” or “split this function and add type hints,” answered in seconds instead of minutes.

The speed trade-off: faster output with less reasoning

Performance gains don’t come free. On SWE-Bench Pro and Terminal-Bench 2.0—benchmarks that stress agentic software engineering—OpenAI reports Spark underperforms GPT 5.3 Codex, even though it completes tasks much faster. In plain terms, it can move quickly, but it is more likely to miss steps or misinterpret complex intent when compared with the full model.

Security is another explicit limitation. OpenAI’s Preparedness Framework labels GPT 5.3 Codex as its first “high capability” model for cybersecurity. Spark does not meet that threshold and is not expected to. If you’re touching sensitive code paths, auth flows, or cryptography, treat Spark as a drafting assistant, not a final authority.

The practical takeaway: use Spark to accelerate the small stuff and to explore options quickly, then promote important changes to a slower, more meticulous model—or to human review with tests and static analysis—before merging. Think of it as a turbocharged editor, not an autonomous senior engineer.

Who gets access and how it runs during preview

During the preview, access is limited to Pro users at $200 per month, with dedicated rate limits and the possibility of queuing under heavy demand as OpenAI balances reliability. If past rollouts are a guide, broader availability could follow, but for now, Spark is a premium feature aimed at power users who value time-to-answer over deep deliberation.

Under the hood, Spark is the first public milestone in OpenAI’s partnership with Cerebras. The model runs on the Cerebras Wafer-Scale Engine 3, a single wafer-sized processor that keeps compute tightly coupled for high-throughput inference. Unlike traditional chips diced from a wafer into many packages, Cerebras uses one enormous die, which reduces interconnect bottlenecks and helps explain the responsiveness developers are seeing.

When to use Spark and when not to in real projects

Good fits for Spark include quick refactors, codebase navigation questions, snippet generation, writing scaffolds for tests, and UI logic tweaks. It also shines when you need to interrupt and redirect mid-task—“stop, revert that change, and add memoization instead”—without resetting the entire session.

Poor fits include multi-file architectural changes, complex migrations, intricate performance tuning, and anything that demands careful threat modeling or compliance context. For those jobs, graduate to GPT 5.3 Codex or another high-reasoning model, and pair the output with robust guardrails: unit and integration tests, SAST/DAST scans, dependency checks, and code review. Speed is an accelerant—use it where blast radius is low.

A pragmatic workflow many teams will try: draft rapidly with Spark, then validate with a slower model, and finally run CI gates before approval. You get early momentum without sacrificing quality on the merge path.

OpenAI’s vision for dual modes in future Codex tools

OpenAI frames Spark as step one toward Codex models with two complementary modes: longer-horizon reasoning and execution, plus real-time collaboration for rapid iteration. The roadmap envisions Codex keeping you in a tight loop while delegating background jobs to sub-agents or fanning tasks out in parallel when breadth matters, so developers won’t have to choose a single mode upfront.

If that vision lands, future tools could feel like working with a responsive pair partner who also manages a quiet team of specialists offscreen. For now, the choice is simpler: pick fast or pick smart. Spark makes the fast option legitimately compelling—so long as you keep your safety rails up.

Sources referenced include OpenAI’s Codex announcements and Preparedness Framework, benchmark suites such as SWE-Bench Pro and Terminal-Bench 2.0, and technical details shared by Cerebras on its Wafer-Scale Engine architecture.