OpenAI has inked a multi-year agreement with Cerebras worth a reported $10 billion, securing 750 megawatts of AI compute capacity through 2028. The tie-up is aimed squarely at real-time inference, promising faster responses across OpenAI’s products by tapping Cerebras’ wafer-scale systems instead of relying solely on GPU-based clusters.

Why Cerebras’ Wafer-Scale Systems Matter Right Now

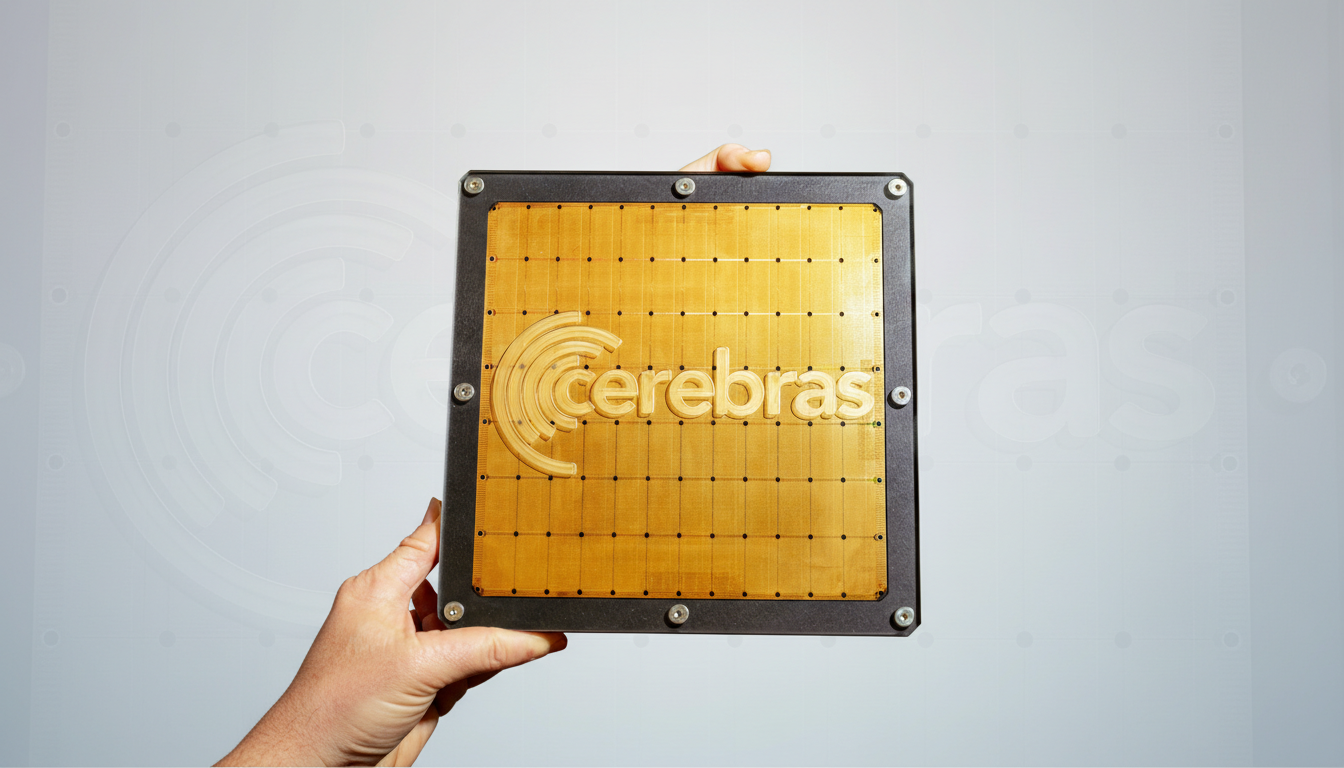

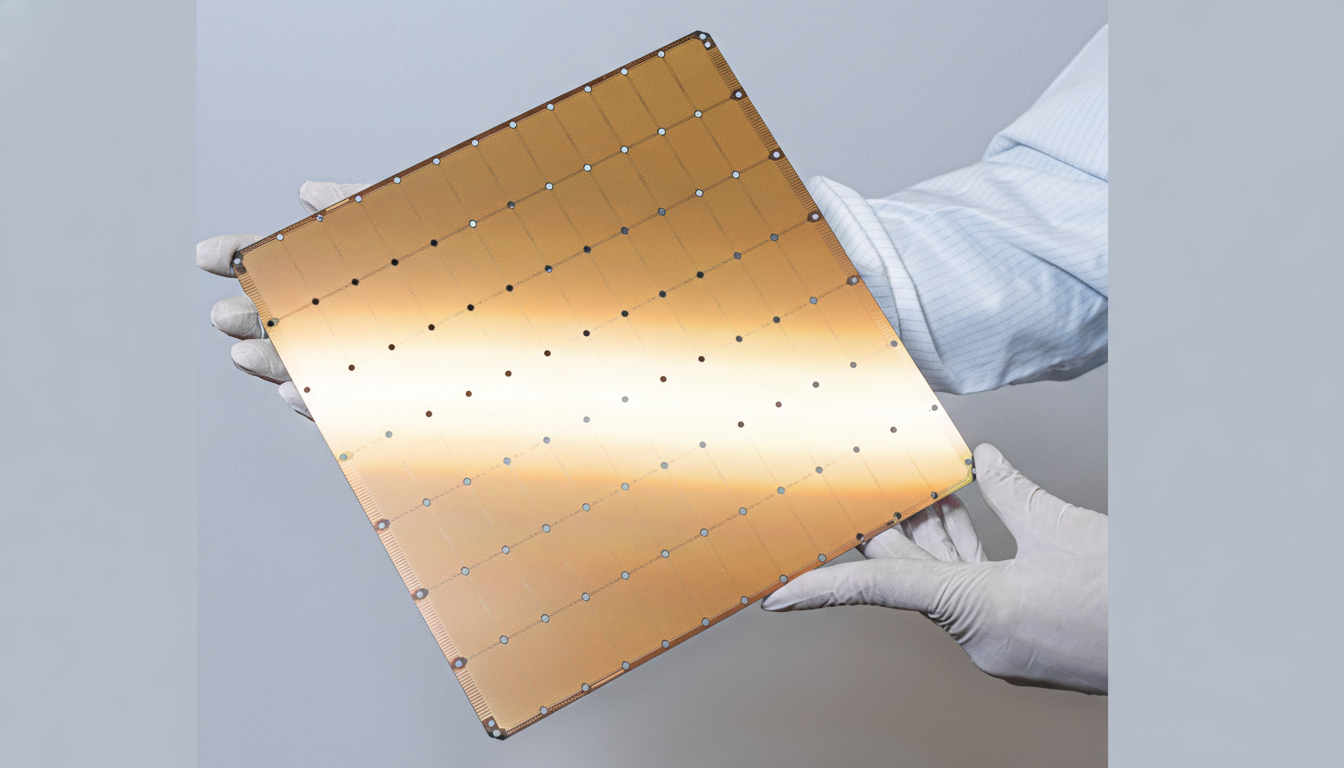

Cerebras built its reputation on the Wafer-Scale Engine, a single silicon chip the size of a full wafer with massive on-chip memory and bandwidth. The architecture minimizes communication hops and can reduce latency for inference workloads that struggle with GPU interconnect bottlenecks. In plain terms, it’s designed to keep more of a model’s computation local and fast, rather than constantly shuttling data across a network of accelerators.

OpenAI framed the deal as part of a diversified compute strategy that matches workloads to the right silicon and systems. Company leaders have emphasized that low-latency inference is now a core constraint for customer experiences, from voice agents to interactive coding tools. Cerebras, for its part, has argued since the debut of ChatGPT that the next leap in AI usability will come from cutting response times from seconds to near-instant.

The move builds on Cerebras’ recent momentum, including its Condor Galaxy network of AI supercomputers developed with partners, which showcased large-scale training and inference outside the typical GPU playbook. OpenAI’s selection validates wafer-scale computing as a credible pillar in the hyperscale AI stack.

What 750 MW of AI Data Center Power Really Means

Securing 750 megawatts is a statement about power as much as processors. Modern AI data center campuses often range from roughly 100 MW to 300 MW, so this allocation suggests multiple large sites or phases dedicated to OpenAI workloads. It also signals the growing reality that electricity, cooling, and grid access are becoming strategic inputs for AI growth, not just hardware availability.

Analysts at the Uptime Institute and the International Energy Agency have highlighted rising energy demand from AI data centers. While exact consumption will depend on utilization and efficiency, a commitment of this magnitude contemplates long-term power procurement, upgraded transmission, and careful attention to PUE, all of which can influence the total cost per query.

The GPU Squeeze And Competitive Landscape

Nvidia still dominates AI accelerators with an estimated market share above 80% according to firms such as Omdia and TrendForce, but capacity remains tight and expensive. AMD’s MI300 line is ramping, cloud providers are pushing custom silicon, and specialized inference players like Groq have entered the fray. In that context, OpenAI’s multi-vendor posture is both a hedge and a performance play.

OpenAI has said the goal is a resilient compute portfolio that places low-latency inference on dedicated systems, while training and other jobs continue on GPUs or custom accelerators. If Cerebras can consistently deliver sub-second responses at scale, it could shave infrastructure costs and open the door to richer, more conversational applications that depend on streaming responses.

Money, Governance, and Timing for the OpenAI-Cerebras Deal

Cerebras has been preparing for the public markets, having filed for an IPO and subsequently delayed those plans while continuing to raise capital. Recent reports indicated the company was in talks for additional funding at a valuation around $22 billion. The OpenAI agreement strengthens Cerebras’ revenue visibility, a useful signal to investors evaluating non-GPU alternatives.

It’s also notable that OpenAI CEO Sam Altman has previously invested in Cerebras, and past reports have suggested OpenAI once weighed an acquisition. That history puts a spotlight on governance and procurement rigor, but the sheer operational demands of delivering 750 MW over several years suggest commercial performance will be the ultimate arbiter.

What Changes For Developers And Customers

Developers care about latency, throughput, and cost. A low-latency inference tier could reduce tail latencies that frustrate real-time voice assistants, customer support agents, and live translation. It can also make multimodal interactions feel natural, sustaining conversational context without awkward pauses or token throttling.

Economically, inference becomes the dominant cost as usage scales. If Cerebras’ systems deliver higher tokens-per-second per dollar and stable queue times, OpenAI could pass on faster response tiers and new SLAs to enterprise customers. That, in turn, could broaden use cases where humans stay in the loop, such as regulated workflows that require immediate model-grounded suggestions.

Execution Risks and the Road Ahead for Both Companies

The promise is clear; execution is the test. Cerebras must stand up capacity on an aggressive schedule, secure grid access, and keep yields and uptime high. OpenAI, meanwhile, will have to optimize its models and serving stack for wafer-scale inference, integrate new compilers and runtime tooling, and manage routing across a heterogeneous fleet that includes GPUs and other accelerators.

If the rollout meets expectations, the industry could see a sharper split between training platforms and inference-first fabrics, with power planning and silicon diversity becoming as strategic as model architecture. For now, the deal marks a pivotal bet: that speed—measured in milliseconds—will define the next era of AI adoption.