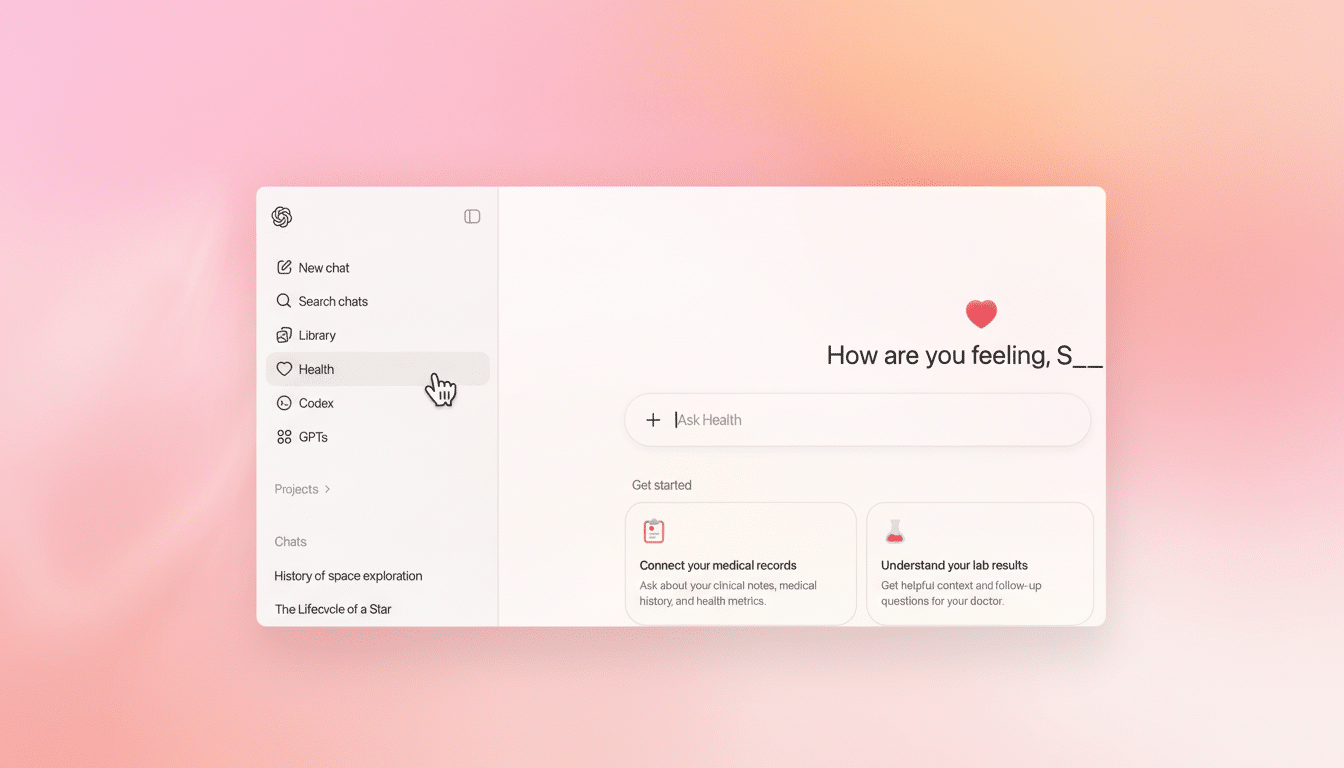

OpenAI is also introducing Health Mode in ChatGPT, a distinct experience meant to answer general health and wellness questions using your personal information if you decide to share that. Approximately 40 million people are already asking ChatGPT medical questions each day, OpenAI says, and Health Mode seeks to ground those conversations in your own records and wearable data—without trying to diagnose or replace clinicians.

What ChatGPT Health Mode Does and How It Supports Users

The Health Mode creates a distinct voice within ChatGPT focused on medical- and wellness-related conversations. It can pull in data from information you supply—including from visit summaries, lab results, and your activity or sleep trends—so you can get ready for a doctor’s visit, keep track of the questions you want to cover with him or her, or find patterns across medications, symptoms, and lifestyle.

It’s like a smart notebook for your health conversations. You might ask, “Summarize the changes in my blood pressure since March,” or, “Write three questions I can ask my cardiologist about these echocardiogram notes.” It can also produce nutrition or exercise plans that are specific to your characteristics, with the explicit disclaimer that it is not a diagnostic tool and should in no way be relied upon for decisions about treatment.

Using Health Mode: Access, Setup, and Connecting Your Data

Access begins with a waitlist. OpenAI will be asking a few early users to join initially, with wider availability as it iterates the experience across web and iOS. Choosing Health from the ChatGPT menu on the left side of the screen will take you to a dedicated space when logged into your account.

From there, you can link sources such as patient portals, lab reports that you upload, pharmacy information that you submit, and supported health-tracking apps. You can keep it light—asking general questions without sourcing data—or let Health Mode refer to your records for a more customized answer.

Good starting prompts include:

- “Create a pre-appointment checklist that asks about the issues in this clinic note.”

- “Explain my lipid panel in plain language and list follow-up questions.”

- “Compare last month’s sleep duration to this month’s and flag anything different.”

You can upload files to a chat, specify preferences, and also specify the list of connections that may be active at any given time.

Privacy and Security Promises Behind ChatGPT Health Mode

OpenAI stresses that data about health is deeply personal. In addition to the default at-rest and in-transit encryption of ChatGPT, this further “layers extra protections with health in mind,” which supposedly brings additional isolation so that the health conversations remain parsable. Health chats, connected apps, and files live in their own memory and won’t commingle with your other conversations, although relevant context from standard chats may float to the surface in Health if it’s helpful.

OpenAI also claims conversations about Health will not be used to train its base models. Users can review their connections, disconnect apps, and delete files. As with any consumer health tool, it’s wise to minimize unnecessary identifiers under your account settings, check the data-sharing settings, and consider occasionally transferring or deleting everything you no longer need.

Regulators have stepped up scrutiny of health apps. The Health Breach Notification Rule has been used by the Federal Trade Commission to punish companies that have mishandled sensitive health data, and privacy guidance from agencies and advocacy groups advocate for clear consent and transparency. Users should verify how their providers manage data shared through third-party tools, and be aware that not all consumer apps are bound by the same legal requirements as clinical systems.

Why It Matters for Organizing Your Personal Health Data

People’s health information is scattered—on provider portals, in companies’ wearable dashboards, medical and pharmaceutical records, and personal notes. According to the U.S. Office of the National Coordinator for Health IT, nearly all non-federal acute care hospitals (96%) have adopted certified electronic health records, yet patients are still forced to navigate multiple logins and formats. A single conversational layer might reduce that friction and help us to view the whole.

Consumer context is expanding, too. Pew Research Center has found that roughly 21% of American adults wear a smartwatch or fitness tracker, pouring streams of activity, heart rate, and sleep data into the world. By bringing these signals alongside labs and medications, users can ask better questions and plan more efficient visits.

Still, caution is warranted. Traditional symptom checkers have also fallen short in terms of accuracy, with an often-quoted BMJ study discovering that they got the right diagnoses only 34% of the time and demonstrated varied performance when it came to triaging users, highlighting risks associated with automated guidance. More recently, an analysis in JAMA Internal Medicine found that clinicians rated ChatGPT’s answers in online health forums as of higher quality and empathy than physicians’ responses, though the study didn’t test safety or clinical outcomes. Health Mode’s worth will rely on excellent guardrails, clear scope, and the extent to which it captures nuance and uncertainty.

Early Caveats and What You Can Expect from Health Mode

ChatGPT Health is not intended to be used for urgent care or diagnosis, but rather everyday questions and organization. In case of red-flag symptoms or emergency, consult a clinician or local emergency services. As the waitlist opens up, more features are targeting the integration process, along with granular consent control and explainability—for example, citations to which parts of your records were used when creating a summary.

If OpenAI can strike a balance among personalization, privacy, and precision, Health Mode could become a helpful intermediary between fragmentary health data and the conversations that count most—those with your care team. For now, it’s a promising first push toward making isolated information useful, usable context.