OpenAI unveiled Prism, a free AI workspace built for scientists, positioning it as a research-first environment that blends a word processor, literature tool, and reasoning assistant. Available to anyone with a ChatGPT account, Prism centers on GPT-5.2 to help assess claims, tighten prose, and surface prior work without attempting to run experiments on its own.

The pitch is straightforward: give researchers an interface that respects scientific workflows, speaks LaTeX fluently, and keeps project context intact so the model’s suggestions are specific to a paper, dataset, or line of inquiry. OpenAI executives say the goal is acceleration, not automation — a co-pilot for scientists much like AI coding environments reshaped software teams.

What Prism offers inside OpenAI’s research-first workspace

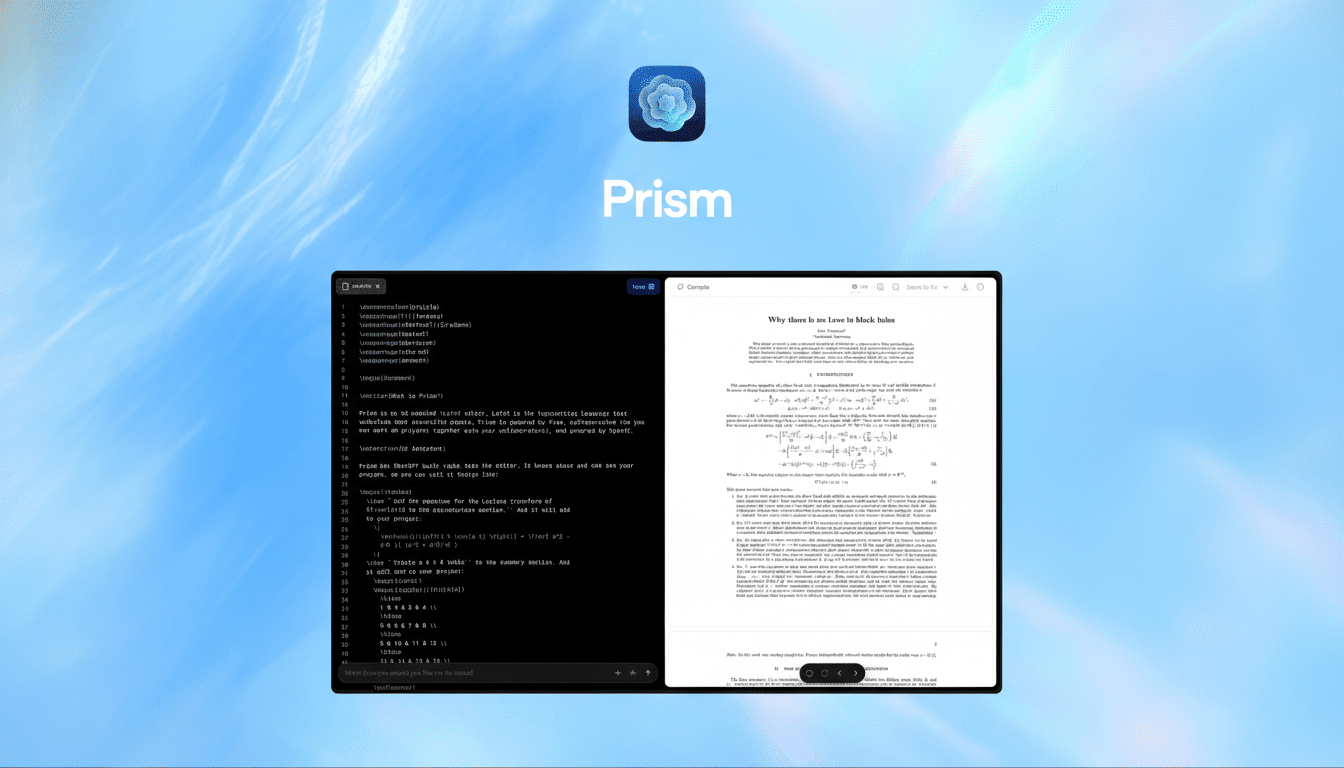

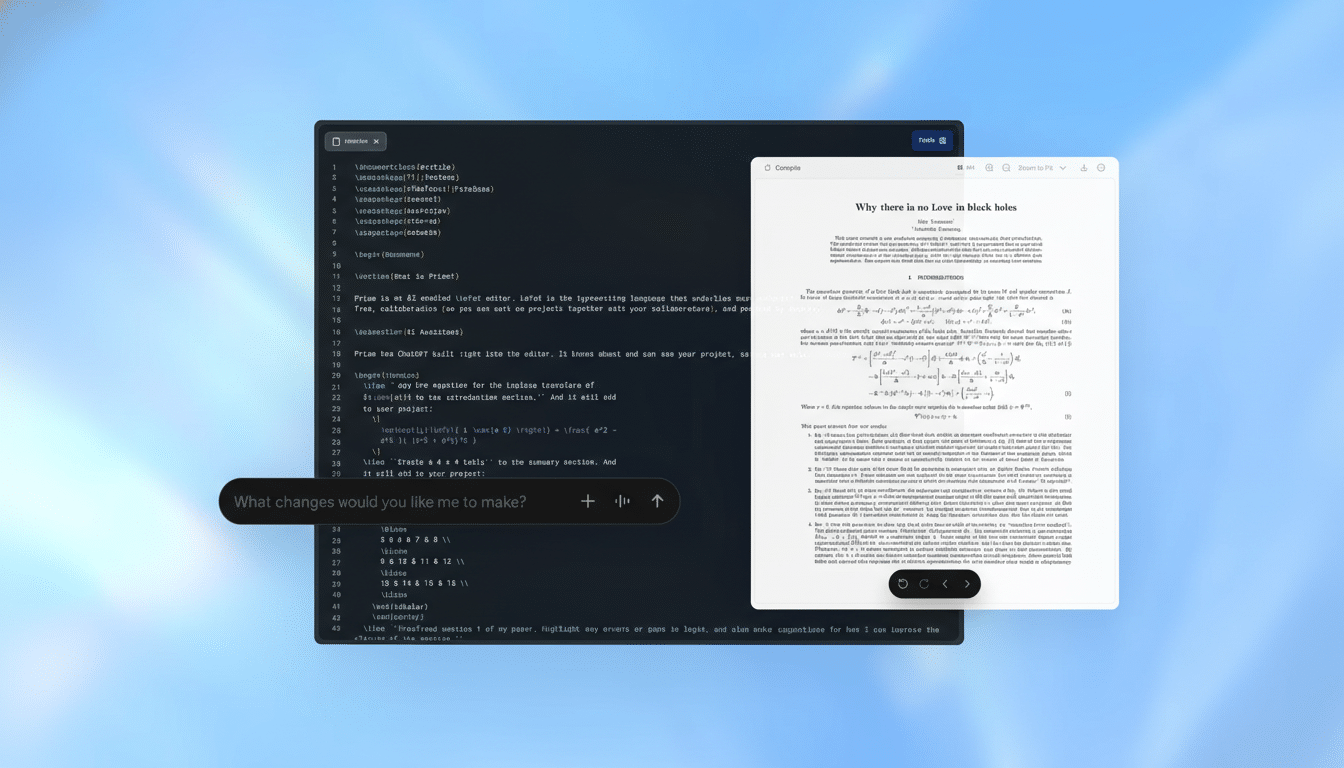

At its core, Prism is an AI-enhanced editor. Scientists can draft in LaTeX, ask GPT-5.2 to verify logical consistency, and request literature pointers with citations they can check. The system’s context window carries the full scope of a project across sessions, making feedback less generic and more attuned to the argument, methods, and results at hand.

Visual reasoning is part of the design. Researchers can sketch on a digital whiteboard and have the model help assemble clear diagrams for figures — a common bottleneck when moving from scratch notes to publication-grade schematics. Because it’s a web app layered on ChatGPT, there’s no special install and no paywall beyond an existing account.

OpenAI likens the experience to modern coding workspaces such as Cursor or Windsurf: powerful models matter, but productivity comes from integrating into the actual workflow. Expect in-line edits, literature cross-references, and context-aware suggestions to feel native rather than bolted on.

Why Prism matters for research quality and scientific rigor

OpenAI reports that ChatGPT already fields roughly 8.4 million weekly messages on advanced topics across the hard sciences, a signal that researchers and students are pushing general-purpose tools into specialized tasks. Prism funnels that demand into a workspace designed for rigor.

The shift isn’t theoretical. AI systems have started contributing to mathematical proofs — including recent progress on problems in the Erdős tradition — by proposing lemmas and mapping connections across literature. A December statistics paper credited GPT-5.2 Pro with helping craft new proofs around a core axiom, with humans guiding and verifying each step. OpenAI has framed these results as evidence that, in axiomatic fields, frontier models can accelerate hypothesis testing and proof discovery when paired with formal verification.

Prism arrives as academia wrestles with both opportunity and risk. A landmark Nature survey found widespread reproducibility concerns, with most researchers reporting difficulties replicating published findings. Tools that standardize reasoning steps, enforce citation checks, and keep context anchored could help reduce sloppy errors and amplify the signal in fast-moving fields.

How Prism fits alongside existing academic tools and workflows

For drafts and collaboration, Overleaf remains the de facto LaTeX hub, while reference managers like Zotero and EndNote dominate citations. Prism doesn’t replace those ecosystems so much as it tries to sit in the authoring moment where ideas, sources, and figures come together. If it can hand off clean LaTeX and accurate bibliographies, it can complement existing pipelines rather than force a switch.

Compared with general note-taking or consumer chatbots, Prism’s differentiator is context continuity tied to a research project. That helps avoid the model “drift” researchers complain about when each query starts cold. It’s the same principle that made AI coding assistants jump from novelty to necessity: persistent state turns clever completions into actual productivity.

Early use cases for Prism and the limits researchers face

Expect early adoption in fields where text and theory dominate: mathematics, theoretical physics, econometrics, and parts of computational biology. Typical tasks include sanity-checking derivations, clarifying notation, compressing dense related work sections, and translating whiteboard derivations into publication-ready figures.

But limits remain. Large models can hallucinate citations or oversimplify edge cases, and no assistant should substitute for domain expertise or peer review. Labs will also ask hard questions about privacy of unpublished manuscripts, handling of proprietary data, and auditability — concerns already flagged by research offices at major universities. Prism’s impact will hinge on clear data governance and the ability to run in compliance-conscious environments.

What to watch next as Prism integrates into research work

Three signals will indicate whether Prism becomes a staple:

- Measurable cuts in time-to-submission for manuscripts

- Lower revision cycles from journals due to clearer methods and claims

- Integrations with tools scientists already use, from arXiv workflows to citation managers and code notebooks

OpenAI’s science lead framed 2026 as the year AI becomes as embedded in research as it did in software engineering the year prior. If Prism can marry strong models with deep workflow fit, it may turn today’s sporadic prompts into a reliable part of the scientific method — not by replacing scientists, but by helping them think, write, and verify faster.