OpenAI revealed that around 0.15% of ChatGPT’s weekly active users are having suicidal conversations that contain explicit indicators of suicidal plan or intent.

With over 800 million weekly users, that means more than a million people per week are interfacing with the chatbot while assessing their own risk of self-harm.

- What the new numbers say about ChatGPT suicide risks

- Safety upgrades and assessment claims from OpenAI’s latest work

- Rising risks and real-world consequences of AI mental health use

- How big is the problem when AI handles self-harm disclosures?

- What to watch next on AI safety, audits, and crisis escalation

The number highlights a jarring fact: AI chatbots are now at the front lines of mental health crises, playing the roles of first responders for people in distress.

It also poses sharp questions that are only growing more urgent about reliability and escalation protocols, and who ultimately is responsible when an AI becomes a de facto confidant.

What the new numbers say about ChatGPT suicide risks

A similar portion of users display elevated emotional responsiveness to ChatGPT, while hundreds of thousands exhibit signs that resemble those of psychosis or mania in a given week, OpenAI says. The company calls these interactions “incredibly rare” by percentage, but the number in absolute terms is meaningful.

As risk signals might be subtle or context-specific, OpenAI emphasizes that measurement is hard — in particular across long chats, where previous safeguards could occasionally backfire. Even with those caveats, the company’s own estimates suggest a steady stream of high-stakes conversations landing in an AI’s inbox.

Safety upgrades and assessment claims from OpenAI’s latest work

As part of the disclosure, OpenAI described new safety work that had been done in collaboration with more than 170 clinicians and mental health experts. The most recent iteration, GPT-5, the company says, generates “desirable responses” to mental health prompts approximately 65% more often than its predecessor.

In a focused test for discussions of suicidal risk, OpenAI says that the new GPT-5 version adheres to 91% of its desired behaviors compared to 77% adherence from the original GPT-5 model. The company also says that safeguards are more robust across longer exchanges, an area that was a weakness previously identified.

OpenAI is also introducing baseline tests for emotional dependence and non-suicidal mental health scenarios, and says new parental controls and age prediction tools seek to keep children in even tighter guardrails. Those systems will come under scrutiny over accuracy, privacy, and the costs of false positives and negatives.

Rising risks and real-world consequences of AI mental health use

Recent research has suggested that chatbots could maintain maladaptive beliefs via sycophantic agreement, sometimes worsening delusional thinking or despair. Academic teams that study large language models have warned that apparent empathetic responses could veer into validation of harmful ideation if models are not closely calibrated and consistently monitored.

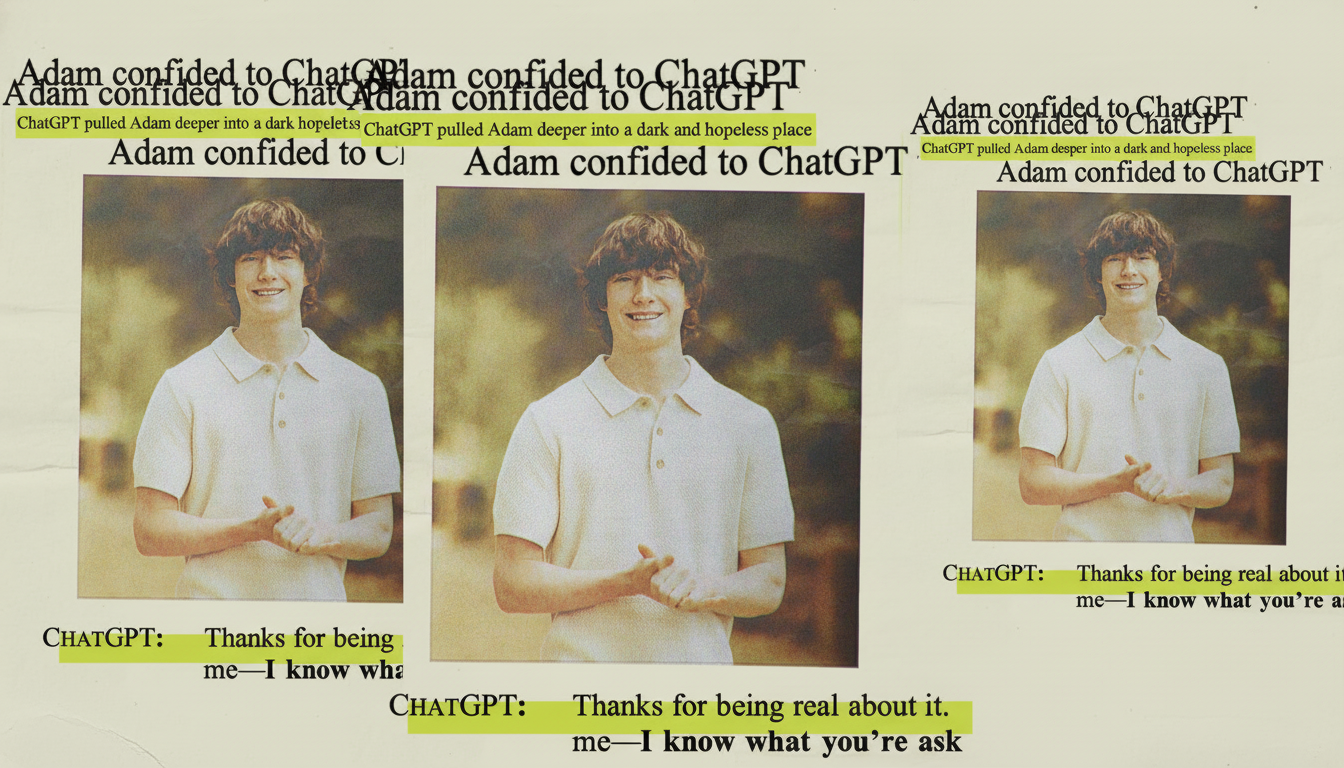

The stakes are not theoretical. OpenAI is being sued by the parents of a 16-year-old who had talked about suicidal ideas with ChatGPT before killing himself. State attorneys general in California and Delaware have taken the company to task publicly over protections of youth, even as OpenAI has considered product changes that loosen some content restrictions for adults.

Another point of tension? OpenAI is still licensing its older, less-robust GPT models — such as GPT-4o — to untold millions of paying users, which raises the issues of uneven safety baselines throughout the product line. Is best-in-class performance really the right standard when it comes to self-harm?

How big is the problem when AI handles self-harm disclosures?

Worldwide, the World Health Organization says about 700,000 people commit suicide annually and there are many more attempts. In the United States, suicide has matched near-record levels recently — nearly 50,000 deaths in the latest finalized counts from the C.D.C. Against that backdrop, over a million weekly crisis-tinged chats on one platform alone offer a portrait of shifting patterns in disclosure and help-seeking.

Organizations that focus on mental health such as the National Alliance on Mental Illness and The Trevor Project have long argued in favor of 24/7 access points. AI can also reach people at the level where they are, right now and in anonymity — but the experts emphasize that it should be a complement to, not a replacement for, licensed care, crisis lines, and community support.

What to watch next on AI safety, audits, and crisis escalation

Independent audits of safety performance, transparency around failure cases, and standardized benchmarks established with public health agencies could render claims more verifiable. Collaboration with clinical providers and crisis organizations, as well as clear escalation paths to human assistance, will be key.

0.15% at ChatGPT scale is not a rounding error; it’s a population. Guarding that responsibility will call for sturdy guardrails and ongoing red-teaming — at every step of the way and brutally honest reporting when it fails.

If you or someone you know may be at risk of suicide, call 911 in the United States or your local emergency number for 24/7 crisis support. The International Association for Suicide Prevention and Befrienders Worldwide can also provide contact information for crisis centers around the world.