OpenAI is shedding light on how its AI agents steer clear of dangerous corners of the web, outlining a system designed to keep automated browsing useful without sacrificing safety. As more users delegate everyday tasks to AI that reads emails, clicks links, and fills forms, the company says it’s building defenses to keep agents from wandering into phishing traps or obeying hidden instructions planted on webpages.

Why AI Agents Need Strong Link Hygiene and Caution Online

AI agents act like power users on autopilot, which means they face the same online threats as humans—only at machine speed. Phishing, malware, and social engineering remain prolific across the open web. The FBI’s Internet Crime Complaint Center has consistently reported phishing as the most common cybercrime category, and business email compromise alone accounted for billions in annual losses. Verizon’s Data Breach Investigations Report has long found that around 68% of breaches involve the human element, a reminder that attackers thrive on tricking whoever—or whatever—does the clicking.

How an Independent Web Index Guides Safer Agent Browsing

Rather than confining agents to a rigid allowlist of “approved” websites—which would cripple utility—OpenAI describes a different gate: an independent web index. This index records public URLs known to exist broadly on the internet, built separately from any individual’s private data. If a page is in that index, the agent can open it as part of a workflow. If not, the user gets a clear warning and must grant explicit permission before the agent proceeds.

The goal is to shift the safety question from subjective trust in a domain to an objective signal about whether a specific address has appeared publicly without depending on user data. That reduces the risk of agents following bespoke, one-off links designed to exfiltrate information or lure the model into hostile territory. It also gives users a simple, understandable checkpoint when an agent encounters something that looks off the beaten path.

Imagine an agent triaging your inbox. A shipping notification includes a tracking URL that isn’t on the public index. Instead of auto-clicking, the agent pauses and asks for confirmation, explaining the risk. That friction is intentional: it’s easier to prevent a bad click than to unwind the consequences.

Guarding Against Prompt Injection in Untrusted Web Content

Links aren’t the only hazard. Webpages can contain “prompt injections”—hidden or overt instructions crafted to make the model ignore its rules, leak data, or execute unintended actions. The issue has become a top concern across the industry; the OWASP Top 10 for LLM Applications lists prompt injection as its first risk category, and security researchers have repeatedly shown how untrusted web content can coerce agents into tool misuse.

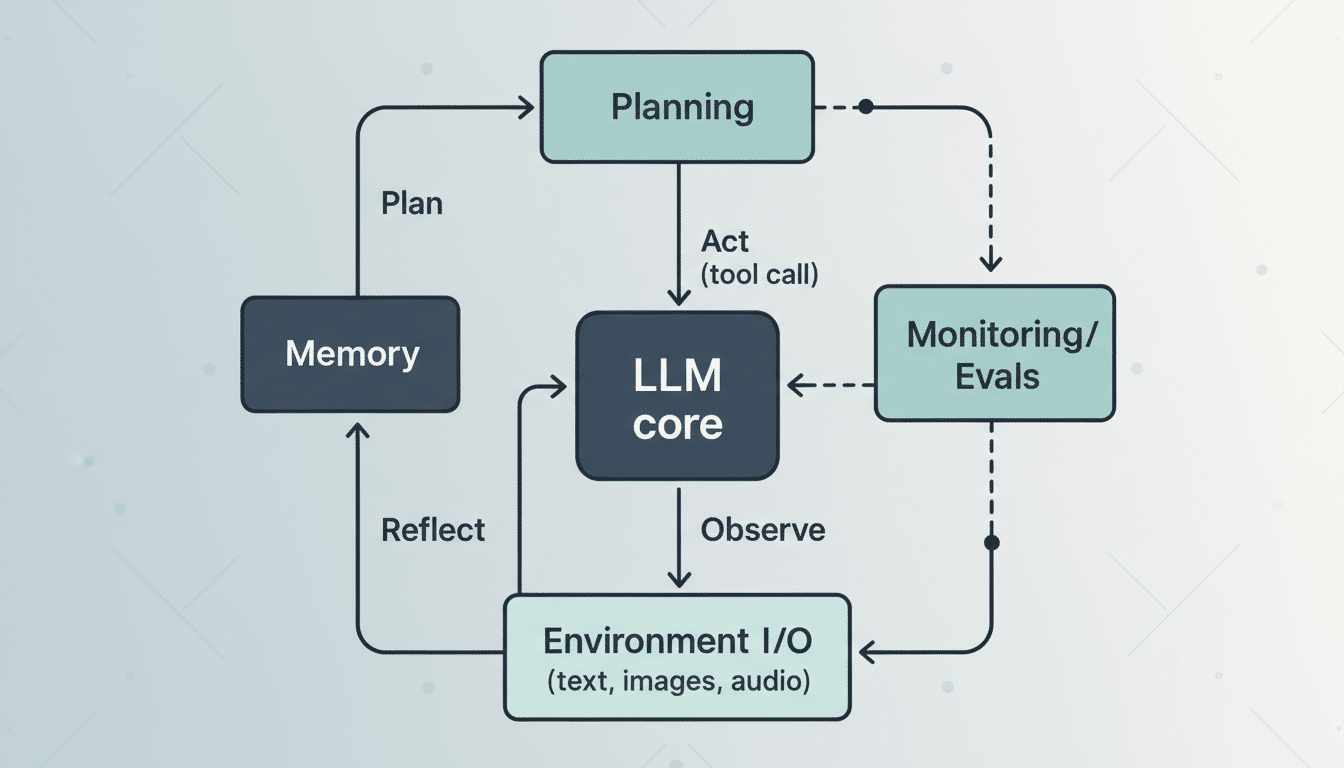

OpenAI frames the web index as one layer in a larger stack. In practice, layered defenses typically include content scanning for instruction-like patterns, restricting what tools the agent can invoke on untrusted pages, and requiring user approval for high-impact actions like downloading executables, forwarding emails, or retrieving sensitive files. Logging and sandboxed browsing add containment, while rate limits and timeouts blunt automated exploitation attempts.

OpenAI’s earlier research on agentic behavior highlights the broader challenge: an agent that reads and acts on web content can be manipulated unless it continuously questions source reliability and intent. The company emphasizes that no single mechanism—indexing included—eliminates risk; it reduces the blast radius and raises the cost for attackers.

Tradeoffs And How This Differs From Blacklists

A traditional blacklist or allowlist is easy to explain but brittle in the wild. The web changes constantly, and attackers routinely spin up fresh infrastructure. An independent index offers broader coverage with fewer false blocks, ensuring agents can still fetch legitimate documents hosted on smaller sites or new subdomains while putting brakes on obscure or ephemeral links that haven’t appeared publicly.

There are limits. A publicly indexed page can still be malicious, and sophisticated prompt injections can live on popular, legitimate domains. That’s why OpenAI stresses user-in-the-loop confirmations and multiple guardrails. The system is about reducing blind trust, not granting blanket approval to everything that’s been seen before.

What It Means for Users and Developers Building With Agents

For end users, the practical change is more transparent checkpoints: agents flag suspicious links, explain why, and ask for consent when uncertainty is high. For developers building on top of agent platforms, it underscores a security posture that treats the open web as untrusted by default and scopes what the agent can do based on context and provenance.

The bigger picture is that safety for AI agents can’t rely solely on model alignment or static blocklists. It requires infrastructure—like an independent web index—backed by real-time risk signals, clear user prompts, and conservative defaults. As more organizations roll out autonomous workflows, the companies that combine usable automation with layered defenses will be the ones users trust.

OpenAI’s message is straightforward: make agents powerful enough to be helpful, cautious enough to avoid obvious traps, and honest about the moments when a human decision still matters.