Nvidia has announced a $5 billion equity investment in Intel and an expansive plan to jointly develop multiple generations of data center and PC silicon, potentially altering competition and supply chains up and down the AI hardware industry. The investment, at $23.28 a share according to reporting from Reuters, would give Nvidia about a 4 percent stake in Intel. Market reaction was fast and strong, with Intel shares jumping as investors absorbed the likelihood of a CPU–GPU roadmap built for accelerated computing.

At the core of the deal is architectural integration. Through the offering, which is intended to combine Intel x86 processors and Nvidia GPUs connected over NVLink, Nvidia’s high-bandwidth interconnect tech, both companies hope to eliminate data flow bottlenecks that bog down large-scale AI training and inference. The partnership ranges from custom server CPUs optimized for Nvidia’s platforms to new PC chips that marry Intel x86 cores with Nvidia RTX graphics chiplets—products the two companies are referring to as “x86 RTX SoCs” for now.

Inside the collaboration on co-designed CPUs, GPUs and SoCs

For data centers, Intel will manufacture a line of x86 CPUs that are specifically designed to work with Nvidia’s AI systems for hyperscalers and enterprises. We can anticipate core counts, cache hierarchies, I/O and memory channels to be tailored for GPU-attached workloads and high-throughput fabrics. The companies will be tapping into NVLink interconnects, instead of being dependent on regular PCI Express paths, in order to increase utilization and decrease latency for their multi-GPU clusters.

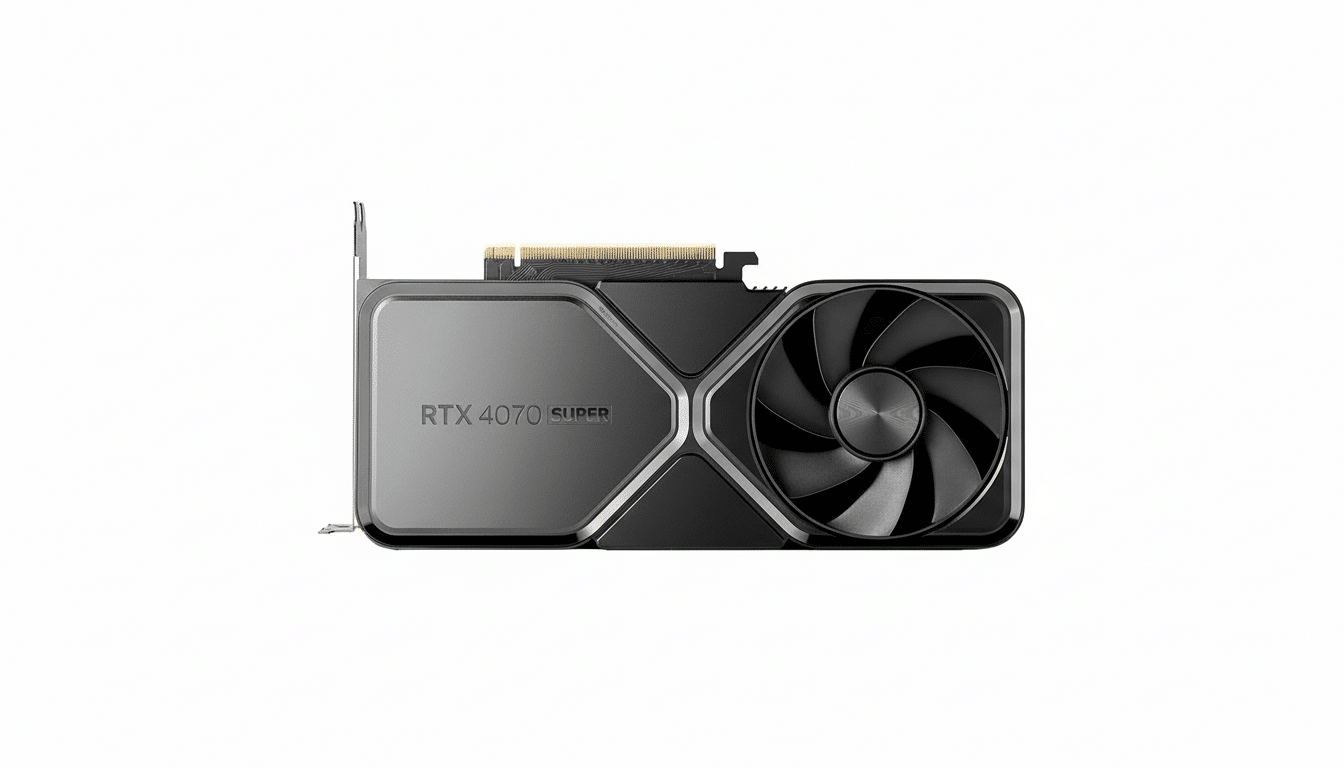

Client-side, Intel intends to create x86 SoCs with Nvidia RTX chiplets via advanced packaging. It fits with the way the industry is starting to move in general with its chiplet-based processor designs, where using a mix of process nodes and IP blocks can sometimes offer better performance-per-watt and quicker time to market. It’s also a straight-up middle-finger gesture toward competing PC options with x86 CPUs and integrated or add-in GPUs.

What the pact means for AI data centers and GPU clusters

The bandwidth between CPUs and GPUs is a limiting factor in AI clusters. PCIe 5.0 x16 maxes out at around 64 GB/s per direction; in comparison, Nvidia’s NVLink combines hundreds of gigabytes per second of bandwidth fed to and from the GPU across multiple links. That extra headroom is critical as model sizes, numbers of parameters and memory footprints continue to grow, and as operators scale out to racks of closely linked accelerators.

Custom x86 parts purpose-designed for GPU-accelerated workloads can also tidy system design up significantly—CPU core counts sized for orchestration rather than general-purpose compute, and memory subsystems tailored to feeding GPUs with training data. Combine that with CXL-based memory expansion and faster NVLink fabrics, and stalls could be minimized while effective performance per watt – an increasingly important metric for cloud providers wrangling tight power envelopes – increases.

Real-world precedent supports the strategy. Nvidia’s DGX and HGX platforms already rely on dense interconnects and sophisticated packaging to keep accelerators fully occupied. Inserting CPU design into that conversation with a co-optimized roadmap may help squeeze that much more efficiency out of every GPU dollar—especially in a climate where demand is significantly outpacing supply on the top-end accelerators.

PC strategy: x86 RTX SoCs using Intel packaging and RTX

The x86 RTX SoC in PCs is the ideal chiplet-first future. Intel’s packaging technologies – like EMIB and Foveros – can place dissimilar tiles next to one another or on top of each other, while Nvidia offers graphics and AI acceleration optimized for creator workflows and on-device inference. The end product could be laptops and desktops that offer discrete-class RTX performance combined with better thermals and battery life than legacy two-chip implementations.

The move also ups the competitive stakes for consumer rigs. AMD has brought integrated graphics and AI NPUs to its latest x86 parts, while Arm-based designs remain focused on efficiency in some form factors. A co-engineered Intel–Nvidia customer roadmap is a clear signal from both parties that there is more headroom at the premium end of the PC and workstation markets with performance-class GPUs and AI-driven feature determinism as purchase motivators.

Supply chain and foundry angle: capacity, packaging, HBM

More than product blueprints, this is about the supply chain.

Nvidia has been relying appreciably on TSMC for leading-edge nodes and CoWoS packaging, where demand has exceeded capacity. Access to Intel’s manufacturing and advanced packaging could introduce some diversification of supply over time, and that in itself could add resiliency in the long run even if the highest-end Nvidia GPUs continue to be made outside of the company. Advanced packaging, along with high-bandwidth memory, is a choke point that industry analysts have cited repeatedly for AI hardware.

TrendForce has commented on three-digit HBM bit-shipment annual growth as AI training increases in scale, further emphasizing how memory and packaging set the pace for system availability. Synchronizing the roadmaps for CPUs, interconnects and manufacturing capacity increases the chances that full platforms — not just individual chips — ship on time and in volume.

Market implications and open questions for both companies

The symbolic and strategic stake amounts to $5 billion. It is not a commanding position, but it publicly ties the companies’ roadmaps together in an industry where time-to-capacity can be as valuable as raw capacity. The share purchase would make Nvidia one of Intel’s larger investors, Reuters reports — an interesting move as Intel focuses more on its foundry business and Nvidia attempts to keep up with the insatiable demand for AI.

There are complexities. Intel is still building its own accelerators, for instance, and Nvidia already makes Arm-based Grace CPUs available as part of some of its servers. Clear segmentation will be necessary in balancing partner–competitor dynamics. Regulators will also look at whether the closer integration has any impact on competition, especially in the data center. Nonetheless, the logic of the deal is simple enough: Combine Nvidia’s leadership in accelerated compute with Intel’s CPU platforms and manufacturing to create AI systems that are more predictable and scalable.

If execution matches intent, businesses might be able to fit denser and better-balanced racks and PC users could soon receive RTX-class performance in more svelte designs—all while the industry earns another method for scaling out the hardware that underpins generative AI. As one industry observer put it, the quickest way to more AI isn’t a miracle chip breakthrough; it’s removing bottlenecks across the entire stack.