On the desktop side, Nvidia has begun selling the DGX Spark, a small-form-factor, developer-centric mini PC for $3,999 that brings the firm’s data center DNA to your desk.

Billed as “the world’s smallest AI supercomputer,” the 2.6-pound system is designed for local model training, fine-tuning, and high-throughput inference, not for consumer gaming or everyday productivity.

DGX Spark launch details and pricing information

DGX Spark can be ordered directly from Nvidia, as well as through select retailers, with an MSRP of $3,999 before local taxes or tariffs.

This is a mini PC, workstation-class tool that has AI developers, research teams, and students in its crosshairs as it targets reliable on-prem compute without wrangling cloud quotas or spot-instance volatility.

Instead of Windows, the system boots to DGX OS, Nvidia’s curated but otherwise Ubuntu-based platform that comes preconfigured with the CUDA stack and container tooling, as well as access to Nvidia’s NGC catalog of optimized frameworks and model containers. The out-of-the-box experience encourages minimal setup time—pull the containers, mount datasets, and start training or serving your models.

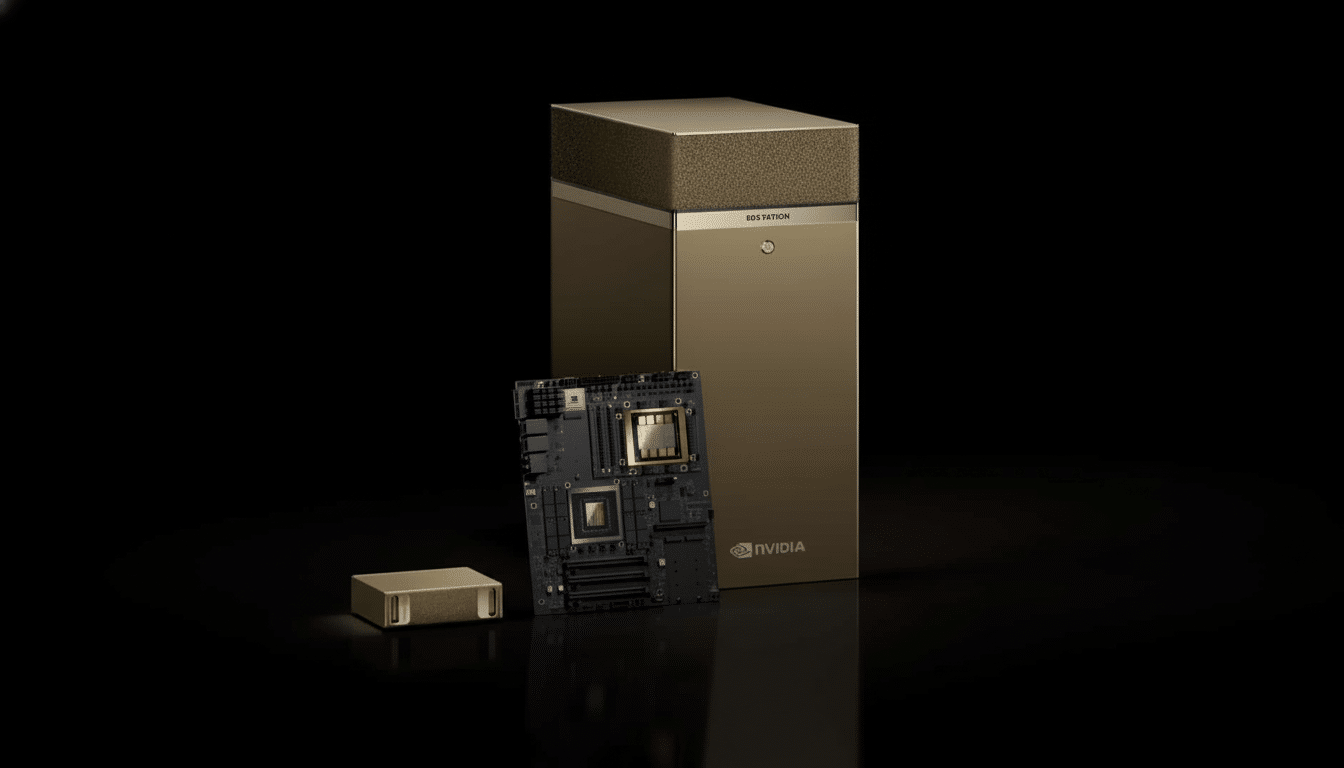

DGX Spark hardware components and software stack overview

Central to DGX Spark is Nvidia’s GB10 “superchip,” combining a 20-core Arm-based Grace CPU with a Blackwell-class GPU. Nvidia claims the GPU has a feature set and CUDA core configuration similar to a GeForce RTX 5070, but says the setup is optimized for AI workloads and not rasterized graphics. The system makes use of 128GB of LPDDR5X unified memory, which is shared between the CPU and GPU—ideal for large tensor pipelines that models pass through in DLA workloads (eliminating PCIe memory hops seen with discrete setups).

Local datasets and checkpoints have plenty of storage, with 4 terabytes of NVMe onboard. Connectivity comprises four USB-C ports, Wi-Fi 7, and HDMI for an easy desk setup. More important, it plugs into a regular AC outlet so you don’t need data center power or cooling to keep it running.

DGX OS comes with Nvidia’s AI software already in place: CUDA and cuDNN for acceleration, TensorRT and TensorRT-LLM for optimized inference, as well as Triton Inference Server for serving. The stack is designed to enable modern, containerized workflows common in industry and academia, providing PyTorch and JAX environments that can be pulled from NGC with reproducible performance baselines.

Who DGX Spark is designed for and primary use cases

DGX Spark is for teams that iterate on models locally, either because privacy and data gravity or cost predictability are not available in the cloud. It can be used by university labs, startups, and enterprise R&D groups alike for rapid prototyping work, customizing domain-specific LLMs, and fitting classical ML pipelines, or computer vision models, without having to wait for shared cluster time.

From a cost perspective, public price sheets that cloud providers publish show that GPU instances usually start at a couple of dollars per hour, in the low double digits depending on the onboard chip and its configuration. A $3,999 desktop can be the price equivalent of hundreds of hours of midrange GPU rental for continual workloads, with the added bonus that data doesn’t have to leave your premises and there are no egress fees. The other side of this: it’s a single-node box, not something to replace multi-GPU training jobs that require H100-class throughput.

Competition landscape and the growing DGX Spark ecosystem

Nvidia gave partners a preview of the design at Computex, with ecosystem players Acer, Asus, Dell, Gigabyte, HP, Lenovo, and MSI showing their own takes on the DGX Spark concept. The Veriton GN100 AI Mini Workstation by Acer is just one example of such an offering, based around Nvidia’s platform. For heavier lifting, Nvidia is laying the groundwork on its larger DGX Station counterpart—a full-tower workstation based on the GB300 Grace Blackwell Ultra platform and distributed via vendors such as Asus, Boxx, Dell, HP, and Supermicro.

DGX Spark is also ahead of Nvidia’s Jetson portfolio. Whereas Jetson boards are optimal for low-power edge inferencing, Spark focuses on desktop-scale development with more memory headroom and a richer software stack. Versus upstart “AI PCs” from CPU vendors, Spark goes all in on CUDA and makes no apologies for Nvidia’s enterprise tooling, focusing instead on training and high-performance inference over “consumer” features.

Early takeaways and initial impressions of Nvidia DGX Spark

Developed with unified memory and a tuned Blackwell GPU, the DGX Spark is well suited for fine-tuning small to mid-size foundation models, experimenting with retrieval-augmented generation, and serving quantized LLMs at low latency. For developers working on computer vision pipelines, speech models, or tabular ML with RAPIDS, the container-first approach and minimal friction from prototype to pilot would be a good fit.

The restrictions are simple: it is not a gaming rig, and it is not going to stand in for multi-node training infrastructure on huge models. But as a personal AI workstation—one that boots into a known-good stack and runs from an ordinary outlet—it fills a hole between hobbyist dev kits and the sticker shock of data center GPUs.

Nvidia’s push into desk-friendly AI hardware also points to where the market is going. Nvidia has also seen growing interest in on-device or on-prem AI development, as teams look for faster iteration and tighter control over their data, opting for local AI solutions instead. With DGX Spark, Nvidia is betting that the downsides won’t outweigh the appeal of reducing the form factor without thinning out your software ecosystem—and that it’s exactly what a large chunk of developers have been waiting for.