In back-to-back appearances at the World Economic Forum in Davos, Nvidia’s Jensen Huang and Microsoft’s Satya Nadella pushed back on the idea that artificial intelligence is in a speculative bubble. Huang framed the wave of spending as a historic build-out of computing infrastructure, while Nadella argued the technology’s value will hinge on how fast it spreads across the real economy.

What Is Fueling Their Confidence in AI’s Long-Term Value

Huang called today’s AI investment cycle “the largest infrastructure build-out in human history,” pointing to a global pipeline of data centers, specialized GPUs, networking gear, and power upgrades that he says are already matched to workloads. Nvidia’s growth has been propelled by demand for its accelerator chips, and the company’s valuation has swelled to roughly $4 trillion on expectations that AI training and inference will keep absorbing capacity for years.

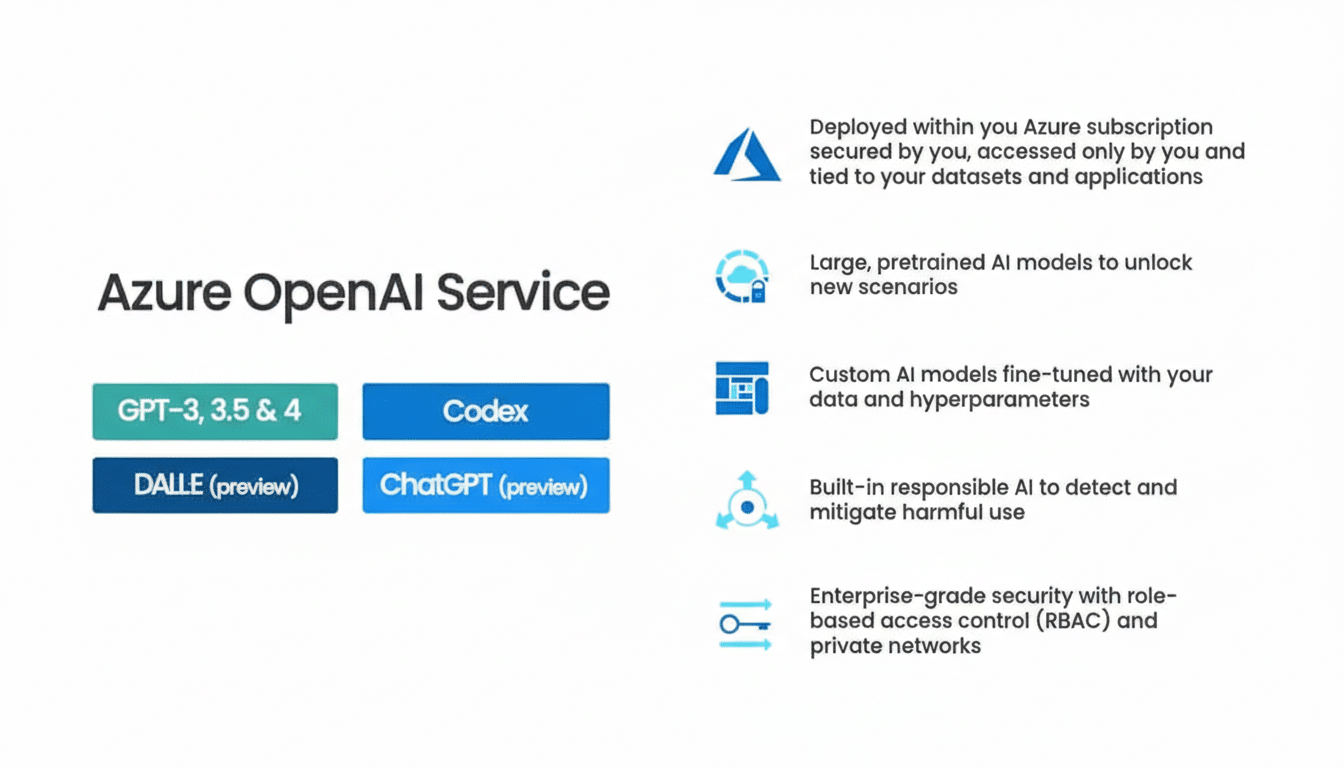

Nadella, for his part, emphasized adoption over hype. He noted that for AI to avoid bubble dynamics, productivity gains must reach sectors far beyond Big Tech. Microsoft has said that more than 50,000 organizations use its Azure OpenAI services, and its enterprise AI copilots are being rolled out across coding, productivity suites, and customer service. In recent quarters, Microsoft told investors that AI services added multiple points to Azure’s growth, an early signal that customers are paying for real utility rather than just pilots.

The Infrastructure Math And The Power Question

Follow the capex: hyperscalers have guided to eye-popping outlays as they race to build AI capacity. Company disclosures and analyst tallies put combined annual data center and AI spending by the largest cloud providers comfortably in the hundreds of billions of dollars. Meta lifted its capex plans into the $35–$40 billion range, while Microsoft, Amazon, and Google have all telegraphed elevated investment tied explicitly to AI clusters and network fabric.

That spending collides with a practical constraint: electricity. The International Energy Agency has warned that global data center power use could roughly double within a few years, approaching 1,000 terawatt-hours if current AI trends persist. Utilities are scrambling to accommodate new loads, and chipmakers are racing to improve performance per watt. Nvidia’s roadmap—moving from H100 to H200 and into its next-generation platforms—aims to push total cost of ownership down, but data center siting, grid capacity, and cooling remain the gating factors to AI’s pace.

Signals From Markets And Skeptics On AI Valuations

Skeptics see bubbly signs in soaring valuations and marketing-heavy product launches that haven’t yet delivered commensurate profit. Supporters counter that this looks more like the broadband build-out of the 1990s than tulip mania: expensive, uneven, but ultimately laying rails for decades of applications. In Davos, BlackRock’s Larry Fink captured the nuance: there will be big failures, he said, but that doesn’t mean the entire sector is a bubble.

The “returns” question is central. Management consultancies such as McKinsey estimate generative AI could add $2.6–$4.4 trillion in annual economic value as use cases scale. Early field results are mixed but encouraging: controlled studies around coding assistants have reported 20–50% task-time reductions, and customer support copilots show measurable gains in resolution speed and satisfaction. If those gains persist across finance, healthcare, manufacturing, and the public sector, the current capex could be justified. If not, utilization rates will fall and valuations will rerate.

Winners, Diffusion And The Next Tests For AI Adoption

Nadella’s litmus test is diffusion. For AI to dodge bubble conditions, benefits must spread beyond early adopters and beyond a handful of platforms. That means lowering inference costs, reducing latency, simplifying deployment, and addressing data governance and safety so regulated industries can move from pilots to production. Policy clarity will also shape adoption: the EU AI Act, U.K. safety initiatives, and U.S. agency guidance are setting guardrails that large buyers increasingly require.

Key indicators to watch over the next year:

- GPU and accelerator utilization in cloud regions

- The ratio of AI capex to realized AI revenue

- Power purchase agreements tied to new data centers

- Whether unit economics improve—tokens per dollar, tasks per watt, and accuracy per parameter

Market structure will matter too. If a few platforms capture most of the value, the “rational bubble” thesis suggests others will deflate; if tools become cheaper and more open, demand may broaden even as margins compress.

The message from Davos was clear: industry leaders reject the bubble label, betting that infrastructure, power, and practical use cases will converge into a durable cycle. The burden of proof now shifts from chip shipments and demo days to measurable productivity at scale. If AI’s promised gains show up on income statements across the economy, the skeptics fade. If they don’t, the bubble talk will return with a vengeance.