An individual participating in Neuralink’s clinical trial with ALS has achieved mind control over a robotic arm to lift a cup and move it toward his mouth using the straw. One of them, Nick Wray, shared video proof and talked about using the system to accomplish simple-seeming tasks like putting on a hat, opening the fridge, or feeding himself—milestones that mark a rapid upward evolution from “can control little cursor thing” to “actual dexterous assistive robotics.”

The brain-reading device, which the researchers called a neural implant, translates a user’s intention to move into computer commands that control the movement of the arm and reports back from sensors in its fingers and palm. Wray has also tested the interface’s slow speed for wheelchair navigation in his own home and recorded new personal records in standardized assessments of manual dexterity, including transferring 39 metal cylinders from a box into wells as fast as possible (five minutes) and flipping plastic pegs upright as a task used to evaluate upper-extremity function after stroke.

From mind to machine: real-time decoding and control

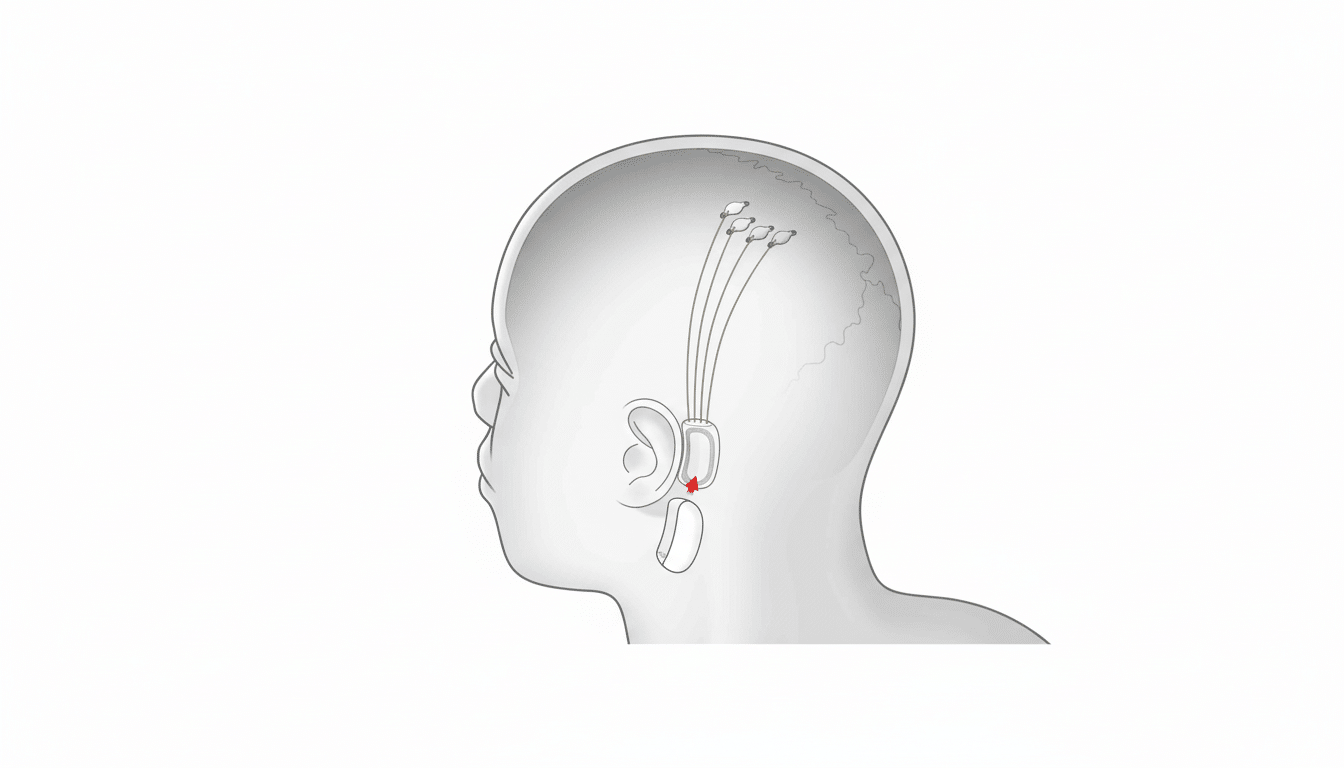

Neuralink’s system intends to read the electrical patterns that represent an action from the motor tracts of the brain using ultra-thin, flexible electrodes. These signals are processed by onboard electronics, which transmit them wirelessly over a low-power link to a computer that decodes the patterns into constant control of a robotic manipulator. Controlling an on-screen cursor and typing have been previously demonstrated by the company; guiding a six-degree-of-freedom arm incurs additional complexity associated with trajectory planning, grasp initiation, and force control.

From a practical perspective, the Wray video frames this as a closed loop: he pictures moving his hand, the decoder interprets that intention and gets the robot arm to implement it in near real time. The system is trained to optimally associate neural signals with different motions. Early sessions are usually used for calibration and learning the association of neural features with movements. In practice, however, users frequently become faster and more accurate the longer they use the computer, and both model and human adjust to each other.

Why this could matter for assistive tech

For the person living with paralysis or extreme motor impairment, a partial restoration of reach-and-grasp can radically alter daily life. The ALS Association says about 5,000 new cases of ALS are diagnosed each year in the U.S., and many patients may ultimately lose their ability to use their hands. Caregiving burden would be minimized and a degree of autonomy for activities such as self-feeding, dressing, or controlling household equipment could also be restored using neural-powered robotic assistance.

The field is not going from zero. Academic programs like BrainGate, involving partners from Brown University, Stanford and the Department of Veterans Affairs, have shown that intracortical brain-computer interfaces have made it possible for people with tetraplegia to control robotic limbs — a demonstration that has been widely interpreted by how a participant used a robotic hand to feed herself by guiding the hand up to her mouth. The difference with Neuralink is a cosmetically neutral, completely implanted unit with high-channel-count electrodes and untethered operation — features that, if safe enough to prove reliable outside of the lab environment, will make home use more practical.

How the neural decoding probably works in practice

One important class of intracortical systems extracts features from spikes and local field potentials across hundreds of channels, then inputs this reduced information into machine-learning decoders. Whereas the classical control methods such as Kalman filter are based on continuous velocity, recent methods apply deep recurrent or convolutional neural networks to better capture the nonlinear dynamics and reduce noise. For robot arms, many research teams layer in shared autonomy — software that stabilizes end-effector movement or helps align the grasp — so that the user can attend to intent rather than micromanaging every joint angle.

Neuralink has claimed that its implant can record from as many as 1,024 channels, a number for which other designers of brain technology are developing — since more recording sites can increase signal-to-noise and allow richer control spaces. Wray’s reported performance on timed dexterity tasks indicates improved throughput and may also offer reliable performance with greater experience, although standardized, peer-reviewed metrics will be required to make comparisons between different systems.

Safety oversight and the key open questions ahead

The U.S. regulatory oversight is covered by the Food and Drug Administration Investigational Device Exemption framework for first-in-human studies. Neuralink previously acknowledged an early problem where some electrode threads had retracted, leading to lower-quality signals on one participant; software updates and algorithmic tweaks purportedly mitigated the loss of performance. Longer-term questions linger about durability, infection risk, explant procedures, and how well signals hold up over years.

They also stress accessibility and reimbursement as well.

Further improvements in performance notwithstanding, embedding brain-controlled robotics on care pathways will need evidence from independent studies, unambiguous risk-benefit profiles, and agreement with insurers and public health authorities.

What comes next for brain-controlled assistive robots

Wray’s clip has provided an unambiguous public glimpse at Neuralink’s effort to move beyond screen-based interactions. The company has reported implantation of its device in an increasing number of participants, and methods of objectively demonstrating performance on functional tasks — along with peer-reviewed publications — will be essential to vetting claims of the system’s efficacy. If the technology turns out to be safe, can last, and is also reproducible outside of a laboratory, brain-controlled assistive robots might leap from research prototypes into practical tools for daily life.

For now, the takeaway is simple: converting neural intent into purposeful, multi-joint movement has become a reality beyond the laboratory.

For those who need it most, it’s starting to look like a realistic path to financial independence.