Humanoid robots are getting prettier, smarter and better at repeating rehearsed factory tricks, but the abominable robot still screws up your socks. It’s not for lack of ambition or compute. It’s Moravec’s Paradox, a defining idea in robotics from the 1980s that posits what humans find easy — perception, dexterous manipulation, balance — is precisely what machines are bad at. Laundry, with its slick fabrics and unruly piles, is a crash course in those “easy for us, hard for robots” skills.

Reasons Machines Struggle With Simple Chores

Roboticist Hans Moravec wrote back in 1988 that for computers high-level reasoning is cheap, sensorimotor intelligence is fiendishly expensive. The contrast flips our intuition inside out: A robot can integrate a thousand equations in the blink of an eye, but to pick up a crumpled T-shirt it must depend on sensitive tactile perception and real-time control — and on a rich world model that enables predictions about how cloth will wrinkle, drape and catch.

Decades of work by pioneers such as Rodney Brooks revealed that animal-like perception and control require layered feedback loops, not just computations on a screen. In homes, those loops must compete with the clutter, reflection off stainless steel, deformable objects and endless novelty. The things we don’t think about — wrinkles, seams, textures — are the very things the robot has to figure out how to measure and replicate.

Laundry Is a Deformable-Object Gauntlet for Robots

Folding laundry involves a challenging trifecta of problems: occlusion-aware recognition, deformable dynamics modeling, and contact-rich manipulation without tearing or slipping. There are nearly endless combinations of possible configurations in a towel; two corners can be identical and do not fold the same. The robot requires tactile cues to sense edges, grasp just enough to slide fabric without stretching and compensate when a sleeve conceals a sock.

Which is why training data has grown into a cottage industry. In an investigation, the Los Angeles Times described offshore teams with head-mounted cameras recording hours of towel folding and dish loading for robots to learn subtleties such as finger pressure or how fabric moves. Google Robotics has released policy-learning systems such as RT-1, which has been trained on more than 130K real robot episodes, and RT-2, which transfers knowledge from web-scale vision-language models to actuation. These are significant breakthroughs, but deformable-object long-horizon tasks are still brittle outside of the lab.

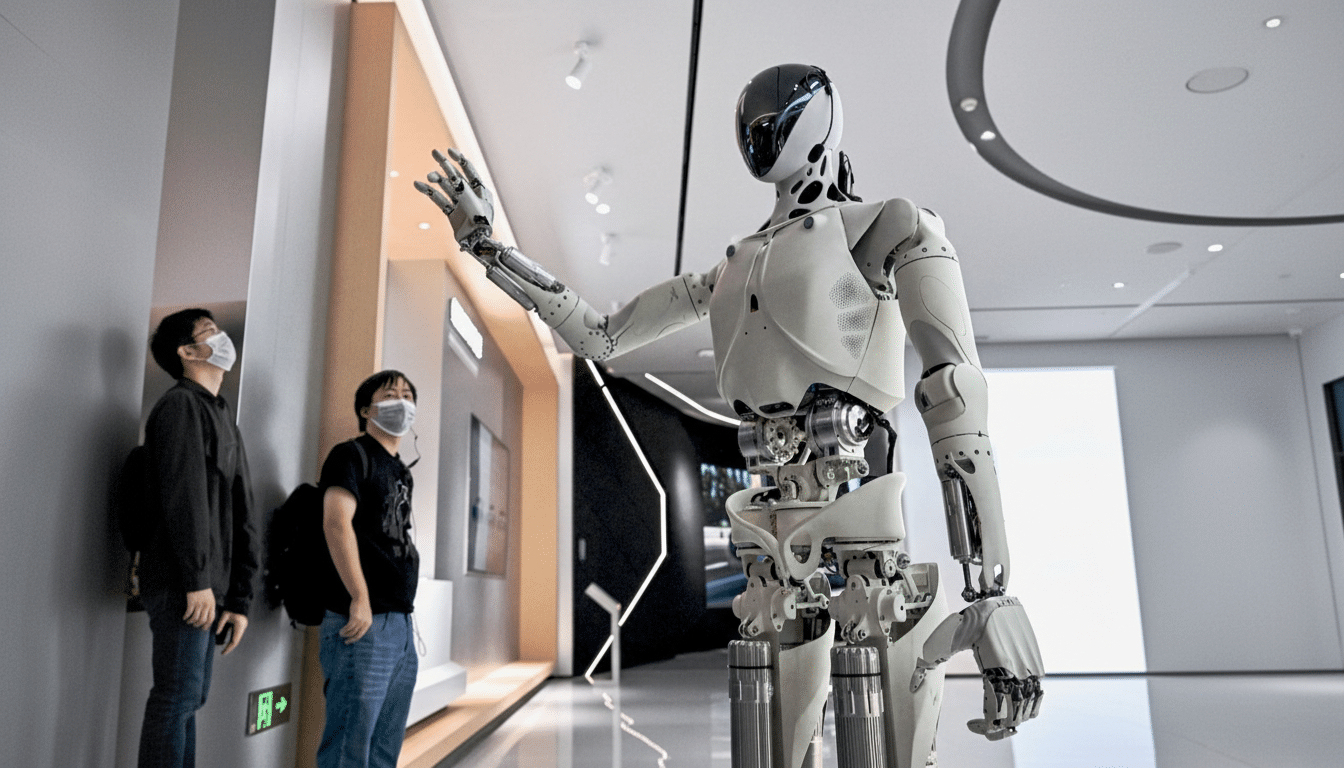

Humanoids Impress and Yet Also Struggle at Home

Demos of humanoids from big labs and startups — electric Atlas from Boston Dynamics, Optimus from Tesla, Digit from Agility Robotics and prototypes tethered to automakers — demonstrate incremental advancements in locomotion and staged pick-and-place. But household chores are unforgiving. Even small miscommunications can lead to, say, dropped plates or jammed dish racks. Most public demos are also hand-scripted, teleoperated or confined to sterile environments due to safety concerns.

Quietly, industrial robots excel in those places where the world is managed. Millions of industrial robots — not Roombas or Amazon Kiva systems, but machines that can cost up to a half-million dollars — are already in workplaces around the world, increasingly helping move goods and powering tasks from huge turbines to delicate drilling. Bring that arm back home, and the assumptions fall away — lighting’s different, objects are one of a kind and nothing stays where you left it.

AI Aids Robotics but Is Not a Silver Bullet

AI has narrowed the gap on perception and planning. Foundation models can tag objects, understand instructions and even generate action proposals from video. Project GR00T and similar efforts by NVIDIA seek to provide humanoids with a general prior for dexterous skills. MIT CSAIL, Stanford, UC Berkeley and Toyota Research Institute have published outstanding results on grasping, whole-body control, and cloth manipulation.

Yet three hurdles remain.

- First, sim-to-real transfer: simulations typically don’t model the messy physics of fabric and friction.

- Second, sensing: The majority of hands do not have dense tactile arrays like our skin does, but emerging technologies such as GelSight and SynTouch show promise.

- Third, reliability: homes expect near-0% catastrophic failure. A 95%-right robot is still throwing detergent around on the floor too frequently.

What Really Closes the Gap Between Robots and Homes

More and more experts are looking toward a hybrid road. Part one is improved robot bodies — grippers that are soft and compliant, with fingers that see their own tips; wrists hardened to shear and slip; feedback loops drawn tighter; world models developed to predict how cloth moves. Part two is smarter environments: laundry bins that draw clothes flat, appliances with fiducial markers, furniture built to stick a robot in. We don’t expect the dexterity of a violinist from a power drill; homes, perhaps, may have to go partway to meet robots.

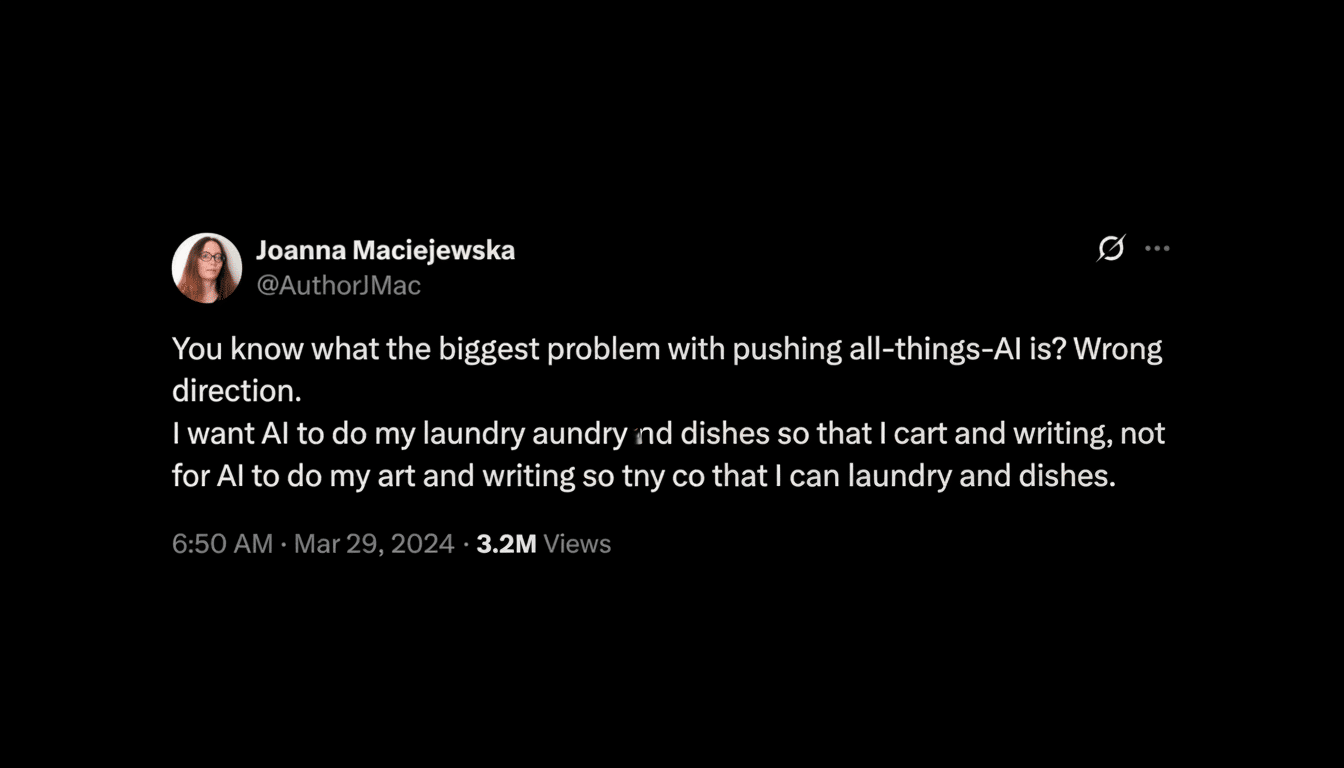

There’s also the issue of size and shape. Humanoids are so appealing because our world is constructed for us, but when it comes to machines made for a specific task, they often seem to win out. Robot vacuums did reign in the floor’s particulate kingdom, and they did so by recasting a chore. In the short term, a more realistic scenario is probably a set of modest specialized helpers — one that sorts, another that carries in and out of the washer-dryer, a third that folds with help from a guided surface — working together under the coordination of a home orchestration AI.

Moravec’s Paradox hasn’t been debunked; it has been elucidated. Intelligence isn’t just about making inferences — it is both perception and action, too, in a chaotic world. Until robots achieve the sensorimotor mastery that evolution gifted us gratis, your laundry basket will serve as a rude awakening. The good news: The chasm is closing, folding neatly on a bath towel between richer data, tactile hardware and AI that reasons over physics.