A senior Microsoft executive argues that agentic AI is reshaping startup economics as dramatically as the public cloud did, collapsing the cost and time needed to launch and scale new companies. Amanda Silver, corporate vice president for Microsoft’s CoreAI, says startups can now route whole categories of work to autonomous and semi-autonomous systems, allowing small teams to punch far above their headcount.

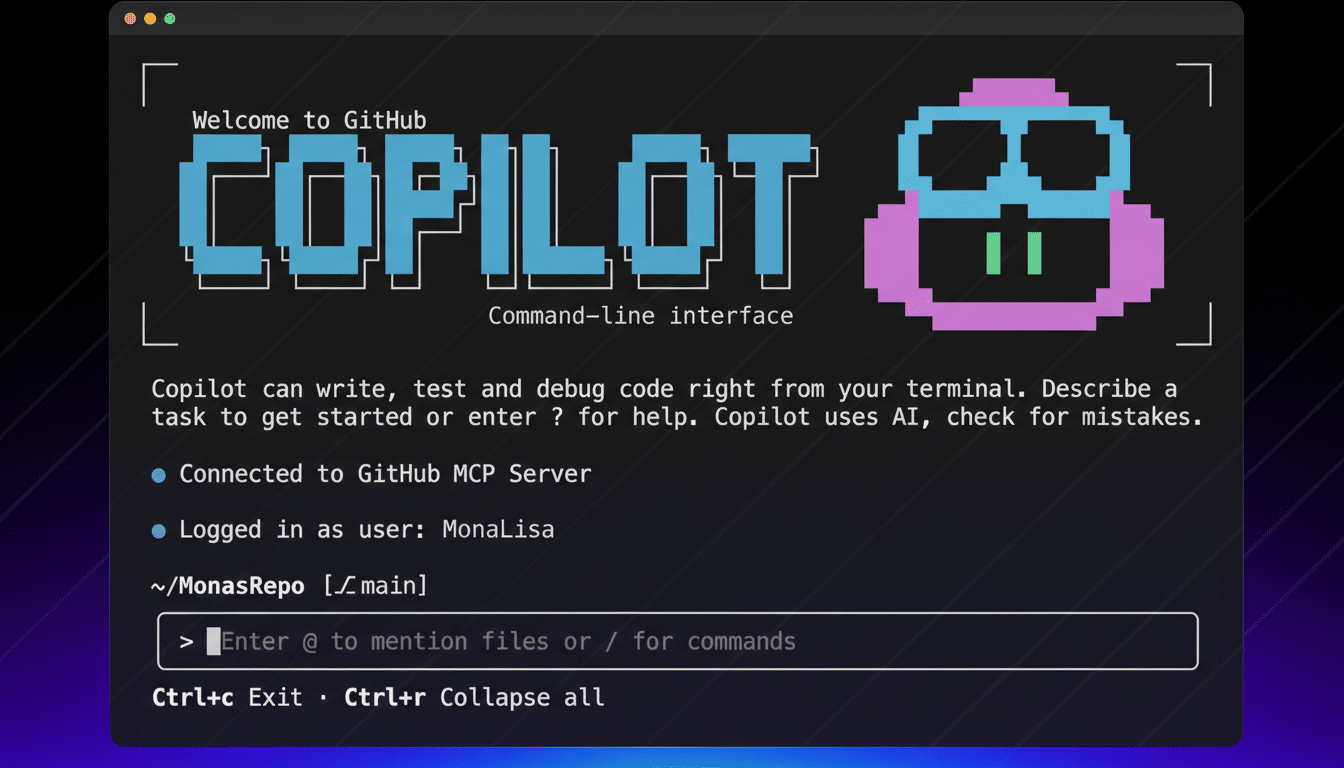

Silver, who helped shepherd GitHub Copilot and now oversees Azure AI Foundry tooling for building and governing enterprise agents, says the early winners are targeting unglamorous but expensive workflows: code maintenance, live operations, customer support and internal compliance. The result, she contends, is a leaner labor model and faster velocity without sacrificing oversight.

Agentic Systems Cut Costs And Expand Capacity

Founders have long trimmed capital outlays by renting compute in the cloud. AI agents push that logic into day-to-day operations. Repetitive, rules-based work—triaging tickets, analyzing contracts against policy, preparing marketing variants—can be handed to agents that plan multistep tasks, call tools, and ask for help only when confidence drops.

External benchmarks support the productivity thesis. GitHub reported in a controlled study that developers using Copilot completed coding tasks 55% faster. McKinsey estimates generative AI could add trillions in annual value across functions like customer service and software engineering, while Goldman Sachs projects up to 25% of work tasks could be automated or augmented. That macro picture aligns with what Silver sees inside enterprise deployments: more output per person and a bias toward automation-first workflows.

Automating The Unsexy Work Of Software Development

One of the most immediate wins, Silver says, is automated codebase upkeep. Startups routinely lag on dependency upgrades, SDK migrations and security patches because the work is tedious and brittle. Multistep agents can now scan a repository, plan changes, run tests, propose targeted pull requests and iterate on reviewer feedback—compressing effort dramatically. In scenarios like dependency modernization, she cites time reductions on the order of 70–80% when agents are properly instrumented and allowed to operate end to end.

Operations is another hotspot. AIOps agents monitor telemetry, correlate anomalies, recommend rollbacks and even execute runbooks when guardrails permit. That shifts human attention to the genuinely novel incidents and reduces pager fatigue. Industry reports from firms like Gartner and service-management vendors point to lower mean time to resolution when AI is paired with standard operating procedures—an efficiency gain with direct revenue implications for any team selling uptime.

Why Adoption Lags And How To Unblock It At Scale

Despite clear ROI cases, agent deployments have been slower than the hype suggested. Silver says the blocker is rarely model capability; it’s product clarity. Teams rush to “add an agent” before specifying the job to be done, the decision boundary, the acceptable error rate, and the data the agent may use. Without that framing, evaluations are fuzzy and trust never materializes.

The emerging best practice looks unglamorous: pick a narrow, high-volume workflow; define success metrics and failure modes; provide the right tools and context; and build an evaluation harness with golden datasets, trace logging and human-in-the-loop checkpoints. Retrieval-based designs keep responses grounded. Policy engines and approvals govern sensitive actions like committing code or incurring contractual obligations. Frameworks such as the NIST AI Risk Management Framework help formalize these guardrails so pilots can graduate into production.

Human oversight is not disappearing; it is moving to the edge cases. In routine scenarios—say, visual inspection for a product return—computer vision can handle the bulk of decisions with only the ambiguous cases escalated. In critical paths like legal commitments or production deploys, human approval remains mandatory while agents automate the surrounding paperwork, testing and analysis.

New Unit Economics For Founders Building With AI

AI changes the cost stack. Instead of hiring for every function, startups substitute compute and orchestration. That pushes variable costs into model inference, vector search, and evaluation runs. Founders now tune unit economics not just with headcount and hosting, but with prompt design, model choice, context length, caching, batching and fine-tuning strategies.

Two implications stand out. First, revenue per employee becomes a more revealing metric than raw headcount growth; lean teams can credibly attack bigger markets. Second, gross margins hinge on model efficiency: swapping a general-purpose LLM for a smaller, task-specific model—or offloading to an open-source model deployed on GPUs you control—can slash inference cost while preserving quality. Azure AI Foundry and similar platforms are racing to make those trade-offs auditable, with scenario testing, cost tracking and governance integrated into the build loop.

A Playbook For The Next Wave Of Agentic AI

Silver’s view amounts to a pragmatic playbook: start with narrow agents that own outcomes, not just suggestions; instrument everything; measure ROI early; and keep people in the loop where risk demands it. Prioritize workflows that are frequent, rules-bound and expensive when done manually. Treat models as components to be swapped for cost and quality, and make evaluation a first-class discipline—not an afterthought.

If the cloud unbundled hardware from ambition, agents are unbundling labor from momentum. For founders who design around that reality, the math of building a startup no longer assumes a large team. It assumes a capable one—with agents doing the busywork and humans steering the business.