Microsoft is expanding the roster of Copilot models, introducing Anthropic’s (Claude Opus 4.1 and Claude Sonnet 4) alongside its existing OpenAI selections. To that end, the move brings enterprises a multi-model switch inside Copilot, so teams can select the best engine for research, coding, content, or agent workflows — without having to risk leaving the Microsoft ecosystem in order to get started.

Why this is important for Copilot customers

To date, the Copilot experience was heavily built on OpenAI’s own models. Including Anthropic in the mix marks a conscious multi-model strategy: reduce single-vendor risk, broaden coverage across types of tasks, and give buyers more control over trade-offs around cost, latency, and safety. It fits quite neatly, too, with regulatory scrutiny of dominant AI partnerships, something emphasized by questions from both the U.S. Federal Trade Commission and the UK Competition and Markets Authority.

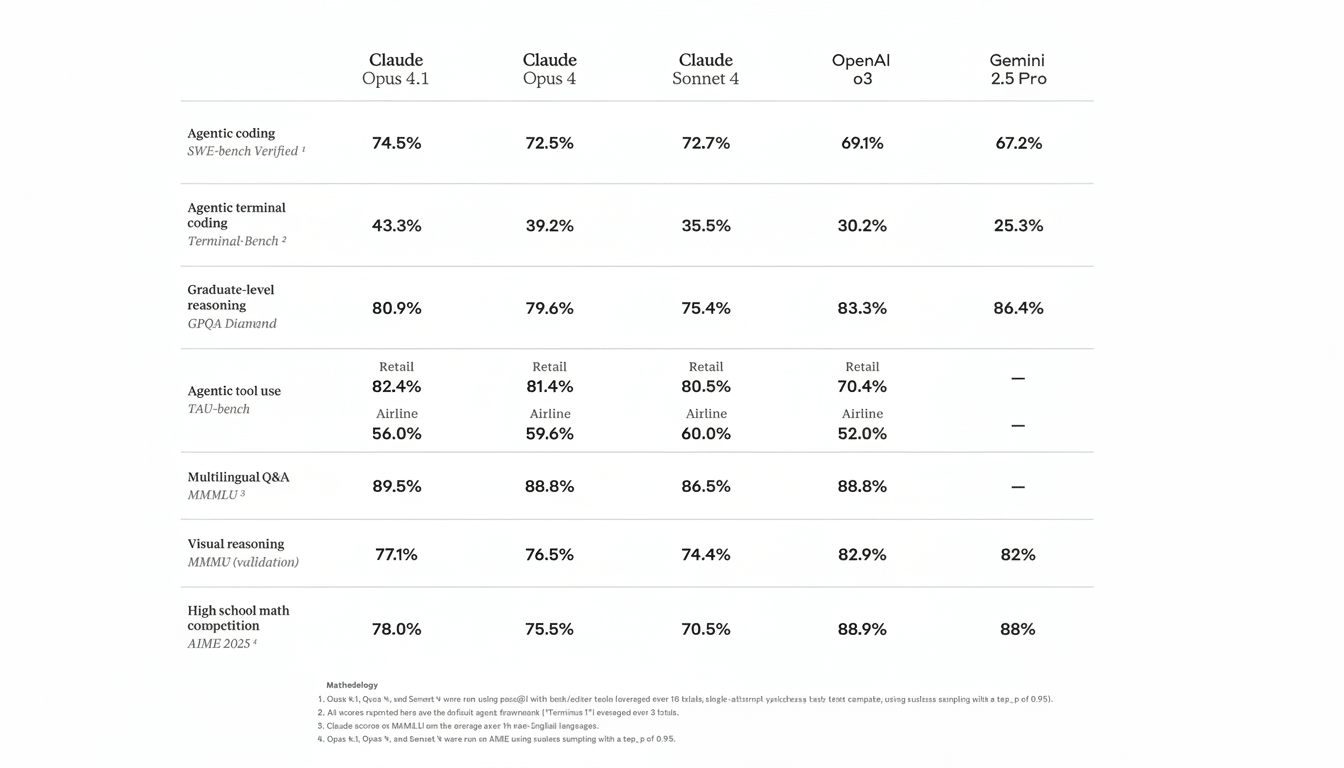

Choice here isn’t just branding. Claude Opus 4.1 focuses on complex reasoning, long-horizon planning, and deep code or architecture work. Claude Sonnet 4 is, by design, focused on everyday development, big data processing, and content streaming. OpenAI’s newest deep-reasoning product keeps on keepin’ on, but customers are free to choose per project or assistant, or even per message (depending on how Microsoft makes the selector available inside Copilot and Copilot Studio).

For a product team, that might mean using Opus for hairy refactors or threat modeling, Sonnet for batch transformations of knowledge-base content, and OpenAI’s reasoning models to quickly come up with ideas and summarizations. Similarly, legal and compliance can route their most sensitive workloads to the best model for their governance posture, and document that decision in policy, besides.

Anthropic’s appeal: safety, context and tooling

Anthropic has described itself as an outfit specializing in “constitutional AI,” and the company downplays the idea of letting driverless cars or other machinery learn how to behave on their own, using transparent guardrails it can steer. That framing is appealing to regulated industries where predictable no-go behaviors and slowed outputs are not nice-to-haves but minimum requirements. It is perhaps most commonly used by practitioners who find Anthropic’s model cards and system prompts easier to modify for enterprise policy.

Capability-wise, recent Claude generations have performed well on long-context tasks, code comprehension, and document-heavy analysis. In application, that translates into a Copilot-like agent being able to effectively parse large SharePoint libraries, product specs, and meeting transcripts when combined with retrieval-augmented generation — without hallucinating or breaking context as often under heavy load.

Microsoft’s value-add continues to be the wrapper: tenant-aware groundwork, audit logs, data loss prevention, and role-based access inherited from Microsoft 365. According to Microsoft’s documentation, Copilot respects organization boundaries and doesn’t ingest customer data by default for training third-party foundation models — which is probably what buyers of AI acceptable-use policies are worried about.

A very multi-model turn in the platform race

The industry trend is unmistakable. Cloud platforms now police for pluralism: whether in the form of AWS Bedrock, Google’s Vertex AI, Databricks Model Serving, or Snowflake Cortex, these services offer menus of foundation models with policy and routing layers on top. Now, Microsoft is effectively shipping that approach to its consumer-facing assistant as well, not just to its developer services.

The benefits with Copilot are threefold:

- Performance coverage: Different models spike on different benchmarks and real-world prompts.

- Resilience: supply or policy disruptions at one provider don’t bring critical workflows to a halt.

- Economics: a team can leverage the more heavyweight model for its sophisticated reasoning and drop back to a lighter one when on horse-gang detail in order to manage spend without compromising outcomes.

Look for Microsoft to rely on evaluation tooling — prompt tests, A/B splits, cost and latency dashboards — to assist admins in setting defaults and guardrails at the tenant or project level. The endgame is “model routing” largely invisible to end users, with IT dictating when it should prefer Opus, Sonnet, or an OpenAI model based on task, content type, or sensitivity.

Developers and admins — what changes to expect

Anthropic should be another first-class target for custom copilots and enterprise agents in Copilot Studio that builders get to see. That involves control tools for retrieval sources, system prompts, and evaluation sets that mirror actual business documents and exceptions. The most optimal rollouts will combine model choice with strong prompt governance, versioning, and human-in-the-loop review for higher-stakes actions.

Procurement organizations will appreciate clear knobs: model-by-model SLAs, region availability, and cost profiles. Security teams will investigate how prompts, completions, and embeddings are managed in transit and at rest, as well as whether data residency and retention settings apply consistently across Anthropic and OpenAI endpoints in Copilot.

The competitive read on Microsoft’s Copilot shift

Rivals are already mimicking similar playbooks. Google, meanwhile, has been blending several Gemini tiers into Workspace, while AWS casts Q as a front door to Bedrock’s model catalog. Microsoft’s inclusion of Anthropic in Copilot allows it to remain a contender on that front, while also responding to enterprise requests for choice and control.

Its use case is a simple one: to help people at every level of an organization make better decisions.

The takeaway, however, couldn’t be more straightforward: Copilot is transitioning from being an assistant informed by one family of models to a policy-driven orchestration layer across many.

For clients, that equals a better fit for purpose. For Microsoft, it’s a strategic hedge — and a small step toward making AI in the enterprise less of a monolith and more of a toolkit.