Memory just became the new bottleneck in consumer tech, and Micron says relief is farther off than most buyers hoped. The company’s leadership warns that the RAM crunch squeezing PCs, consoles, and smartphones will not ease meaningfully until new capacity comes online—and that will take years, not quarters.

In a recent interview, a Micron executive outlined how AI data centers are absorbing nearly all incremental supply, forcing the company to prioritize high-margin server memory and HBM stacks over mainstream PC and mobile DRAM. New cleanroom space in Idaho is slated to start up in 2027, but Micron expects material output only by 2028 after tool installs and customer qualification. Until then, tight supply is the baseline.

Why AI Workloads Are Absorbing Most Memory Supply

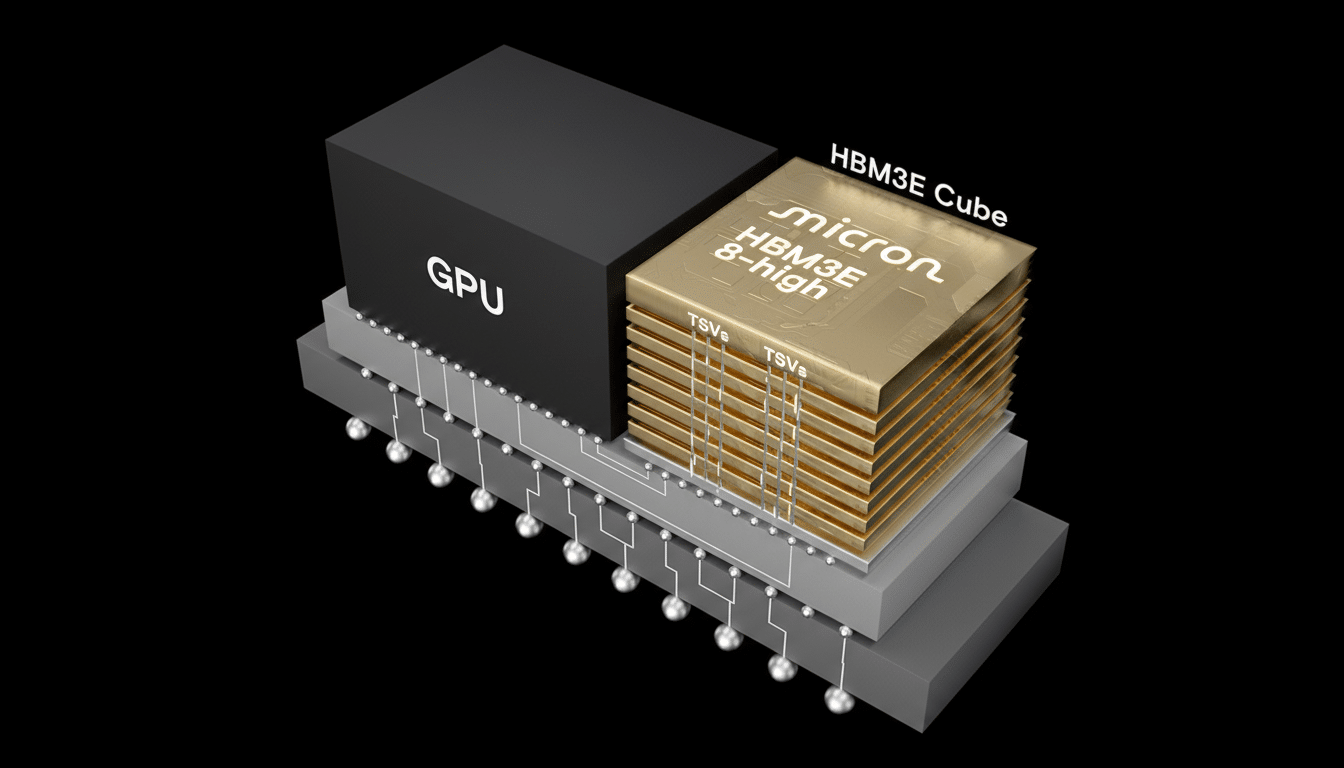

AI accelerators are ravenous for memory bandwidth and capacity. Each top-tier GPU cluster consumes vast amounts of DRAM, increasingly in the form of HBM, which stacks multiple dies with through-silicon vias and advanced packaging. That makes every wafer more valuable when pointed at AI, nudging suppliers to shift allocation away from commodity DDR5 and LPDDR5 used in consumer devices.

Analysts at TrendForce have tracked a surge in HBM orders and note that HBM’s share of DRAM revenue has been climbing rapidly, with expectations it could approach one-third of industry revenue as AI deployments scale. That mix shift tightens availability for standard RAM even if overall wafer starts do not fall.

Micron’s Capacity Roadmap Points To A Longer Slog

Building out memory supply is not as simple as flipping a switch. Micron says the critical path is cleanroom space and tool installation, followed by months of yield tuning and customer qualification on new nodes. The company broke ground on a major Idaho facility that targets mid-2027 initial readiness, but emphasizes that meaningful, customer-qualified bit output will land closer to 2028.

Compounding the delay, next-gen DRAM nodes (1-gamma and beyond) rely on more EUV layers and complex patterning, stretching cycle times. That means bit growth per wafer is slower just as demand is rebounding. After deep CapEx cuts in 2023, the industry is only now re-accelerating investment, and those dollars do not convert into shippable bits overnight.

Price Outlook and Who Will Pay More for Memory

The price picture has already turned. TrendForce reported double-digit DRAM contract price increases across multiple quarters, and retail DDR5 kits for PC builders have risen notably from last year’s lows. Gartner and IDC both cited memory ASP recovery as a key driver of semiconductor revenue growth, a polite way of saying components cost more for everyone downstream.

Consumers feel it in multiple ways. Boutique laptop makers have publicly flagged higher bill-of-materials costs tied to memory, and modular brands like Framework have adjusted configurations and pricing to keep systems in stock. On the gaming side, industry chatter suggests console roadmaps are being stress-tested against elevated RAM costs, which can influence either launch timing or memory configurations.

Rivals Are Not Stepping In To Flood The Market

Micron is not alone in prioritizing AI. Samsung and SK hynix, the two largest DRAM suppliers, have signaled disciplined supply growth and an aggressive tilt toward HBM and server DRAM on their earnings calls. Even as they add capacity, advanced packaging constraints and the higher profitability of HBM make it unlikely that vendors will overproduce standard PC and mobile RAM in the near term.

Put simply, the industry has a strong incentive to keep the supply-demand balance tight while AI demand remains red hot. Unless a sudden demand shock materializes, the pricing environment should stay firm through the next several product cycles.

What To Expect If You Are Buying Memory Soon

For PC builders and IT buyers, plan for RAM to stay a premium line item. If you must buy, lock in configurations early and consider approved prebuilt systems, which sometimes secure better component pricing through OEM contracts. If you can wait, watch inventory levels and promotional windows rather than expecting a broad price collapse.

For developers and enterprises deploying AI, the calculus is different. Budget for memory as a strategic resource and explore architectures that optimize bandwidth per dollar, including tiered memory, sparsity, and model compression. Every gigabyte you do not need is real money saved in today’s market.

Bottom Line: Elevated Memory Prices Are Likely to Persist

Micron’s message is blunt: the RAM shortage has structural roots in the AI boom and the long lead times of modern fabs. With new capacity not delivering meaningful relief until 2028, elevated memory prices are likely to persist. Plan purchases accordingly—and do not count on a quick reversion to the fire-sale deals of the last cycle.