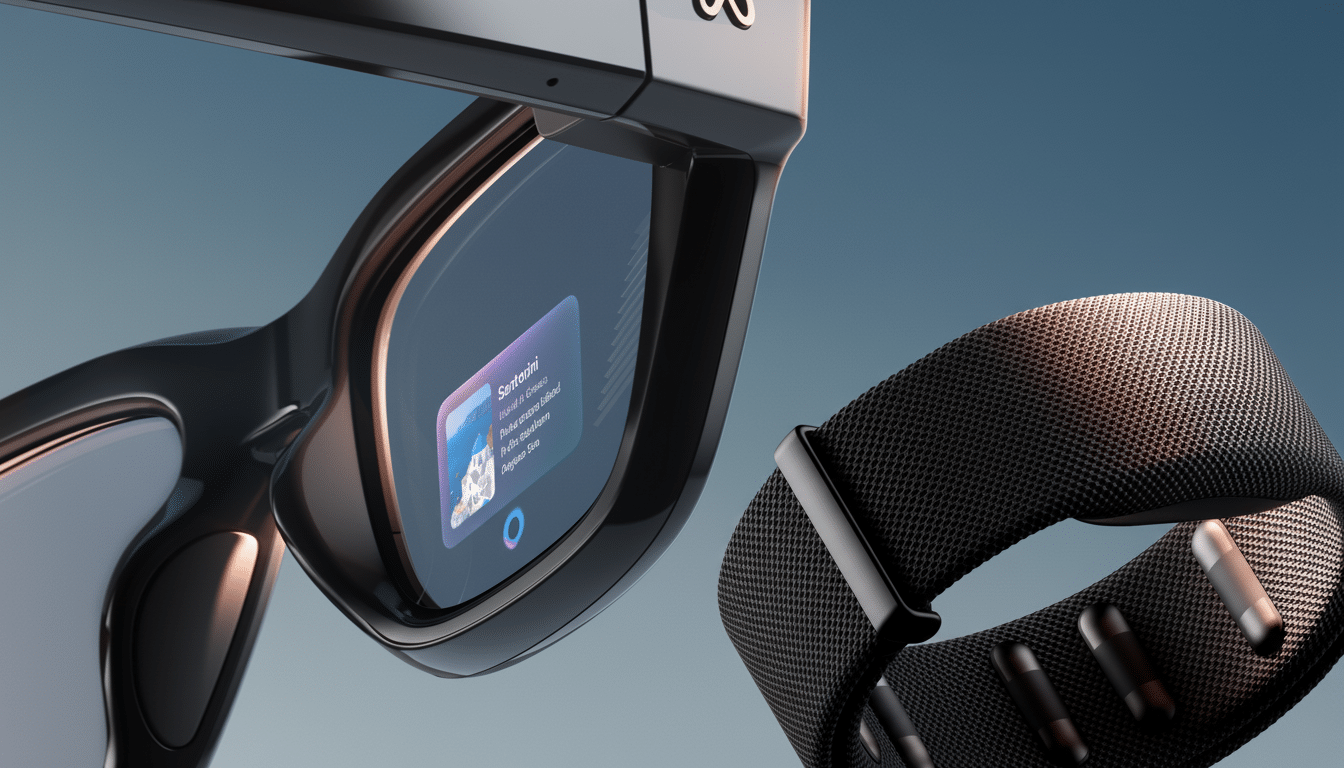

Meta has made a major move toward mainstream augmented wearables with the debut of Ray‑Ban smart glasses equipped with an in-lens display and a wrist-worn controller called the Meta Neural Band. The glasses bring apps, alerts, instructions and live translation directly into the user’s line of sight, while the band allows users to control it through subtle wrist-based gestures so they don’t need taps or voice commands.

The system, which costs $799, is the latest in Meta’s efforts in mixed-reality eyewear that began with earlier Ray‑Ban collaborations with EssilorLuxottica; the company said those had sold millions of units. This time, the headline is the display: a glanceable screen on the right lens that’s meant to make notifications and quick tasks feel less like a check of your phone and more like a natural look.

An at-a-glance display for apps and information

Ray‑Ban Display glasses project information into the wearer’s field of view in a new type of interface that enables at‑a‑glance interactions and features constant eye contact with the world around them. The glasses can surface Instagram, WhatsApp and Facebook updates, provide turn‑by‑turn directions and display real-time translations — things that in the past would make you pull out your phone — Meta says.

Onboard cameras, speakers and microphones make a return from previous models, along with Meta’s AI assistant for questions and hands‑free capture. It’s a familiar idea, but here even more fluid: Instead of hearing an answer, or looking down at your phone screen, necessary bits spring up in the lens where you can take action straightaway.

Neural Band enables discreet wrist control with EMG

Guiding the experience is the Meta Neural Band, a screenless wrist-worn accessory that appears like a stripped-down fitness tracker. It relies on electromyography (EMG) to detect small electrical signals naturally sent from the brain to the hand, translating slight finger and wrist movements into commands. The band also lasts up to 18 hours and is water‑resistant, designed for all-day wear, according to Meta.

The EMG method builds on technology Meta acquired by purchasing CTRL‑labs in 2019, and it has the practical advantage over voice or touch that micro‑gestures can be quiet and private—useful for meetings, commutes, loud environments. Done right, this could be the missing component that would help smart glasses seem less gimmicky and more like a reliable personal interface.

Positioning, price and the push into first‑party hardware

At $799, the Ray‑Ban Display costs less than many mixed‑reality headsets but it’s designed to be worn daily, not just for occasional viewing sessions. Even the visually arduous nature of this product feels like a choice — to meet users where they already are, on their faces, without the heft of a visor. Meta had long depended on devices made by Apple and Google’s Android to get to consumers; shipping its own ultralightweight glasses is an attempt to control more of the stack.

The effort comes after months of leaks and reports from outfits like CNBC and Bloomberg noting the project under its code name, Hypernova. Brand and commercial availability mark a shift from research demos to something that Meta hopes everyday consumers — not just developers or early adopters — will become comfortable using.

How it stacks up against prototypes and rivals

Meta’s Orion and industry rivals

The new Ray‑Ban Display is meant to look simpler than Meta’s Orion prototype from an earlier Connect show. With Orion, it showed full augmented‑reality lenses and eye tracking — which remain problematic to package into a stylish, lightweight form factor. The Ray‑Ban model barters raw capability for wearability and cost, a practical decision to jump-start the market today.

Competition looms. Apple, for one, is still working on its lightweight glasses that would eventually come after its mixed‑reality headset, according to a recent Bloomberg report; Google has experimented with multiple AR eyewear projects and develops visual AI features in Android. And if either company does ship glasses more closely integrated with their operating system(s), they’ll also have a head start on notifications and payments and dynamically linked/dispatched apps.

What to watch: adoption, privacy and real‑world use

Combined AR/VR devices shipped only in the high single‑digit millions in volume in 2017, according to IDC analysts, and those small units underscore just how early these platforms are. Consumer “smart glasses” have faltered particularly when it comes to comfort, usability and battery life — three issues Meta is attempting to resolve with a fashion‑first frame and EMG control. Success will depend on whether the everyday experiences — of navigation, translation, messaging and quick image captures — really feel as if they are happening that much more quickly than reaching for a phone.

Privacy will continue to be in the spotlight. Earlier camera‑equipped eyewear from the big brands prompted questions from European regulators about the visibility to others of recording indicators and bystander consent. Meta says it has learned from those rollouts; the confluence of clear capture signals, on‑device controls and wrist‑based input will be judged not only by watchdogs but also by users who have to decide whether the social contract implicit in wearing a camera on your face feels acceptable.

For Meta, the Ray‑Ban Display and Neural Band are more than a gizmo — they’re a wager that discreet, ever-available computing can leave the lab and become part of ordinary life.

If the display is helpful with just a glance and the wrist interface recedes, it may be when smart glasses go from curiosity to something approaching habit.