Meta is gearing up to integrate conversations from its AI products into the vast machinery of its ad-targeting system, transforming what users say to chatbots into signals that help determine the ads they see on Facebook and Instagram.

What Meta Says Will Happen With AI Chat Ad Targeting

The company wants to leverage data from discussions with its AI assistant to augment the behavioral profiles it already constructs from activity across its platforms. Meta says more than a billion people interact with its AI every month, frequently in long and context-rich conversations — exactly the kind of intent and interest data advertisers hunger for.

Interactions with Meta AI will influence ad targeting only if the customer is signed into the same account across any of its services. There is no opt-out. Meta now says it’s still building the systems that will turn AI interactions into ad signals, but the writing is on the wall: what you ask of a bot could affect which ads you see.

Where It Holds and Where It Doesn’t Apply Globally

The rollout is worldwide but there are also some noteworthy carve-outs. The exceptions are in the European Union, the U.K., and South Korea — countries with stronger regulations around data privacy and a history of enforcement. In those areas, consent rules and regulatory scrutiny over behavioral advertising restrict the ability for Meta to recycle chat data into ads.

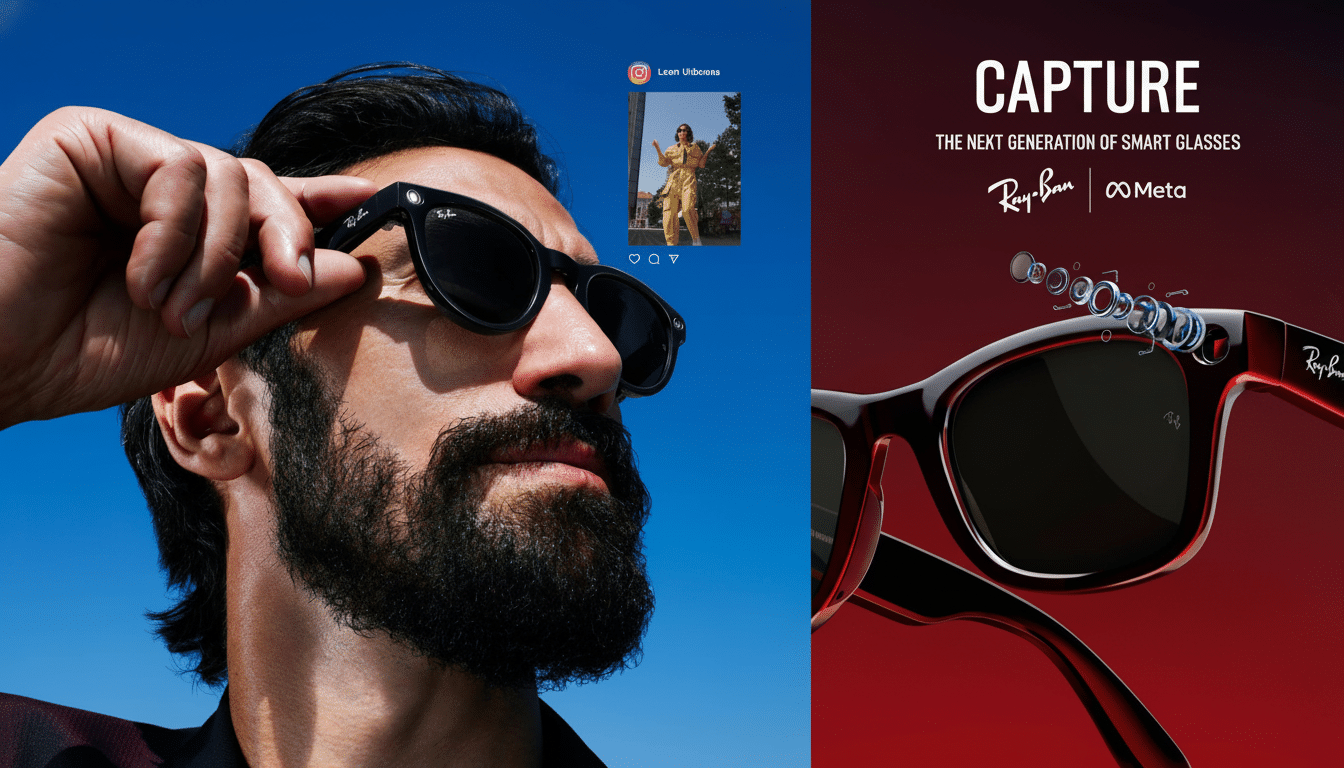

The change also snags signals from newer AI features such as Ray-Ban Meta smart glasses, where voice snippets, images, and videos are processed by the AI to help target ads, and also through the likes of the AI video feed Vibes and the Imagine image generator.

Sensitive Topics and Guardrails for Ad Targeting Use

Meta says it won’t use AI chat content that relates to sensitive categories for ad targeting, a list of which includes health, religious beliefs, political views, sexual orientation, racial or ethnic origin, philosophical beliefs and trade union membership. That comports with existing internal advertising policies and legal lines in many areas.

The company also says it doesn’t have an immediate plan to insert ads directly inside its AI products, either, but management has said monetizing within AI experiences could happen in the future.

Why Chat Data Could Change the Ad Game for Meta

Conventional social data signals — likes and follows and clicks — are noisy. AI chats can be explicit. If someone’s asking for a recommendation on trail-running shoes for muddy terrain, that’s a high-intent signal which performs better than generic interest categories. That is the kind of precision that advertisers pay a premium for.

Industry analysts estimate Meta gets about one-fifth of global digital ad spending. Adding conversational context offers Meta a new flow of first-party data at a time when third-party cookies are deprecating and mobile identifiers are limited. Anticipate Meta’s ad auction to mix in embeddings or summarized themes from chats with traditional behavioral and contextual signals — presumably aggregated and filtered to exclude sensitive content — before feeding into conversion-optimized models.

The bet is a simple one: richer intent signals increase click-through rates and ROAS, which in turn increases bid density and revenue. For Meta, that translates free AI use into measurable ad lift without demanding payment from users.

Regulatory and Legal Pressure on Behavioral Advertising

Regulators have already begun to tighten the screws on behavioral advertising. The European Data Protection Board has called for explicit consent for personalized ads, and national authorities in Europe have fined Meta over tracking-based advertising without valid consent. The data protection regulator in the U.K. has raised concerns about sensitive inferences for targeting, and South Korea’s Personal Information Protection Commission is one of the strictest on profiling.

In the United States, the oversight patchwork is less dense, but the Federal Trade Commission has sent warning shots to platforms about deceptive data practices and using sensitive inferences made by AI systems. If AI chat adds up to ad decisions that seem too invasive or mysterious, you can expect complaints, and potentially enforcement under unfair or deceptive practices laws.

What Users and Advertisers Need to Watch

Users can assume that non-sensitive AI chats could inform ad personalization when they are signed in, according to the company.

Practical steps include trying not to have sensitive conversations with the AI, reviewing privacy controls across Meta accounts, managing data settings on Ray-Ban Meta smart glasses, and deleting AI interactions where tools allow for it on an occasional basis. Consumer survey research indicates that most people hardly trust social platforms with their data; transparency and simple, direct controls will be essential if Meta wants to avoid blowback.

Advertisers can expect to see new categories for targeting segments based on conversation themes, potentially extending reach to high-intent audiences in verticals such as travel, fitness, and home improvement. What to watch: lift in CTR, cost per action, and model-reported incrementals when chat-derived signals are turned on. Brands from regulated verticals should ensure that there are sensitive inference filters in place and that campaign categories do not violate platform policies and local law.

The strategic lesson is larger than any one policy revision. AI assistants are increasingly turning into data engines. As more of everyday life migrates to conversation interfaces, from shopping to troubleshooting, companies with scaled AI chats and dominant ad platforms have a structural advantage. Now Meta is testing that advantage in the open.