Meta is temporarily cutting off teens from its AI characters across its apps while it readies a new version with stronger safeguards and parental controls. The company says the pause is designed to give parents more insight into how their kids interact with AI and to ensure responses are explicitly age-appropriate when the updated experience returns.

Why Meta Is Making This Move on Teen AI Access

Meta has been under intensifying scrutiny over youth safety. The pause lands as the company faces litigation in New Mexico alleging failures to protect children from exploitation on its platforms, and amid broader lawsuits accusing social apps of fostering addictive use among minors. Wired recently reported that Meta sought to narrow discovery requests related to teen mental health impacts, underscoring the legal pressure surrounding youth protections.

In explaining the change, Meta said it heard a clear message from parents: more control and transparency. The company plans to gate access for anyone who has indicated a teen birthday and to apply its age prediction technology to identify and restrict accounts it suspects belong to minors, even if they claim to be adults. That approach is intended to close a longstanding loophole—self-declared ages—that platforms have struggled to police.

What Will Change for Teens and Parents When AI Returns

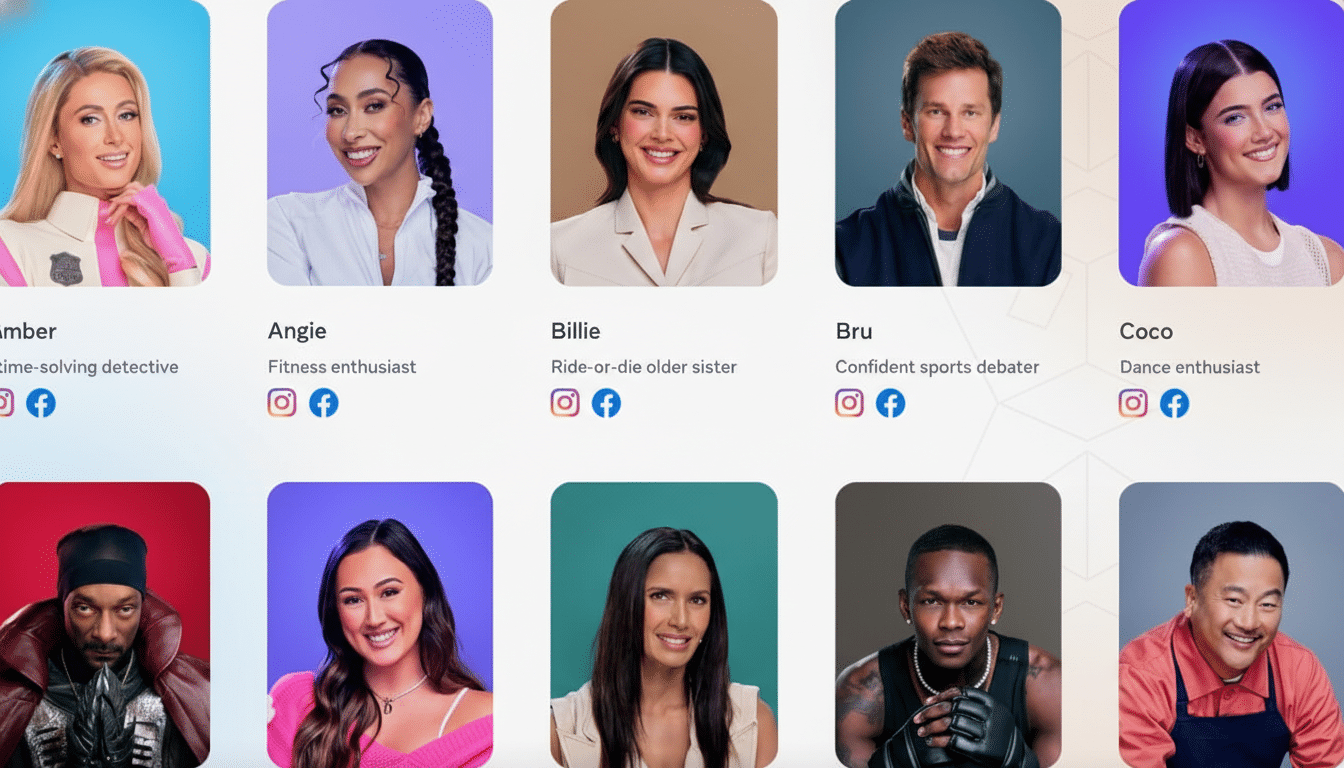

When Meta’s revamped AI characters relaunch, they are slated to ship with built-in parental controls. In an earlier preview, the company said guardians would be able to view topics of conversation, block specific AI characters, and even shut off access entirely—controls that align with expectations set by ratings systems like PG-13.

The characters themselves are expected to be tuned for age-appropriate responses and to steer toward topics such as schoolwork help, sports, and hobbies, while filtering out sensitive categories like explicit sexual content, graphic violence, and detailed drug use. Instagram already introduced teen-focused AI limits inspired by movie-style guidance, and the new system aims to expand those guardrails across Meta’s AI interfaces.

The Enforcement Challenge of Age Prediction at Scale

Reliance on age prediction technology signals a shift from purely self-reported ages to probabilistic models that infer age from user signals. That can reduce gaps in coverage but brings trade-offs: false positives can frustrate adults wrongly flagged as minors, and false negatives can leave some teens unprotected. Privacy advocates will watch how Meta calibrates these models and what data is used, particularly given regulatory regimes like COPPA and regional age-assurance expectations.

The move also reflects a wider safety consensus. The U.S. Surgeon General has warned about potential harms of social media for youth mental health, and UNICEF’s Policy Guidance on AI for Children calls for age-appropriate design and parental oversight by default. Meta’s approach tries to operationalize those principles within conversational AI, where outputs are unpredictable and context-rich.

How Rivals Are Adjusting Teen AI with New Guardrails

Meta is not alone in tightening teen access to generative tools. Character.AI barred open-ended chatbot conversations for users under 18 and later said it would build curated, interactive stories for kids. OpenAI introduced new teen safety rules for ChatGPT and began estimating user age to apply stricter content filters. Google made its chatbot available to teens with guardrails and educational prompts, while Snap added parental insights for its My AI assistant as part of its Family Center controls.

These changes are converging on a common model: default restrictions for young users, clearer parent controls, and AI tuned to avoid sensitive outputs while retaining educational and creative utility.

What to Watch Next on Meta’s Teen-Focused AI Plans

Key questions now are timing, transparency, and measurement. Parents will want clear dashboards, adjustable settings, and auditability of conversations. Policymakers will look for evidence that AI interactions actually shift toward safer topics and that risky prompts are consistently deflected.

Scale is also a factor. According to Pew Research Center, roughly 60% of U.S. teens use Instagram, and any change to AI features can ripple across classrooms and households. If Meta’s relaunch proves both useful and safer, it could become a template for teen-focused AI across the industry; if it falls short, lawmakers and courts are likely to push for stricter, enforceable standards.

For now, the company is choosing to pause rather than patch. That may be the clearest signal yet that teen-facing AI will be governed less by novelty and more by guardrails—and that the next phase of AI assistants will be shaped as much by family controls and compliance as by model size or speed.