I got to try Lumus’ latest augmented reality waveguide demos on the show floor at CES and had as close a look as I’ve ever had of where the everyday AR glasses trend seems to be heading, for better or worse. The company’s all-new optical engines push field of view, brightness efficiency and glass thinness in ways that feel meaningfully better than the status quo — ultimately producing a delicate but mind-blowing 70° prototype showing off immersive AR without the weight of a headset.

What I Saw Through the Lens on the CES show floor

That kind of work is already in the hands of consumers, in the case of Lumus via the Meta Ray-Ban Display, a 20° geometric waveguide with about 5,000 nits at the eye for outdoor visibility. That mass-appeal endorsement is paving the way for the firm’s next moves, an upgraded Z-30 engine and a svelter Z-30 2.0 preview, both designed for all-day wear and glanceable utility.

The Z-30 unit I tested is an 11-gram optical engine optimized for efficiency and rated at roughly 8,000 nits per watt. In practice, that meant that Zemax could draw out crisp and readable text across a 30° 1:1 aspect field of view — small fonts as well as dense test patterns were sharp without chromatic fringing. Colors popped on see-through scenes, and I felt the overall clarity was clean-looking with none of the rainbowing that can affect diffractive optics.

Two incremental jumps stood out. First, a Z-30 model, which is supposed to be about 40% brighter than the standard display and good for direct sunlight. Second, a Z-30 2.0 sneak peek which reduces the glass stack by around 40% and shaves about 30% of the weight… quite literally cutting it up in slices, creating a valuable comfort win that will also make component manufacturing as well as standard-cut yields simpler.

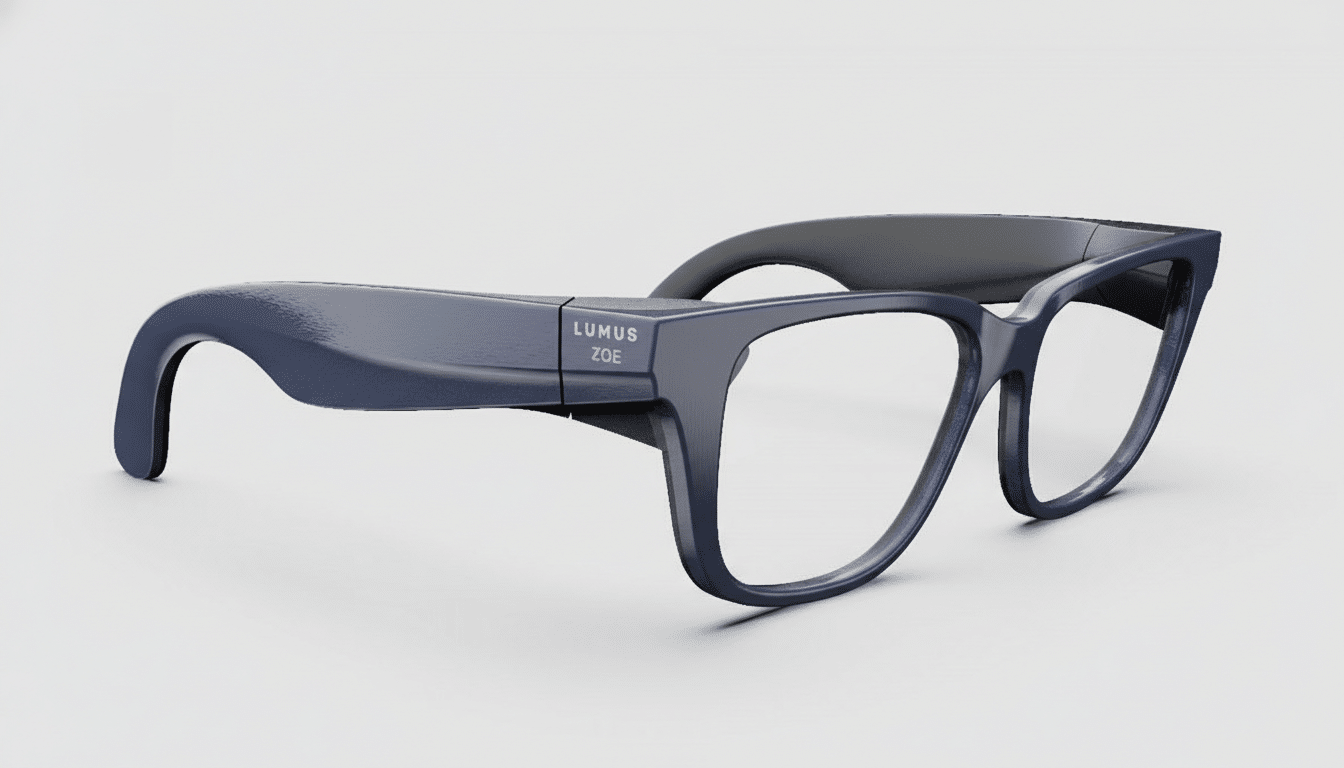

Inside the ZOE prototype with a 70-degree field of view

The star was another taped-at-the-edges proof of concept dubbed ZOE, providing a 70° FOV at 1080p which it feeds into what is essentially ordinary spectacle glass. It’s early and brittle — engineers treated it like a museum piece — but the effect is jarring. A demo scene rolled deep into my peripheral vision, more like a scaled-down headset than glasses, but without any kind of giant optics or hefty visor.

Consumer AR glasses tend to float at a 20–30° FOV in order to stay thin and feel discreet. Enterprise headsets including Microsoft’s HoloLens 2 pushed that envelope a great deal, with some widely quoted as around 50° diagonal, but at the cost of size and weight. That’s a very different story from what such a field of view looks like in glasses-like form: it becomes a wide canvas for spatial entertainment, multitasking dashboards and heads-up data overlays, all without giving up the aesthetic of something you might wear on, or behind, your eyes every day.

I had a small concern about safety — much of my field of view was cluttered with content — but control is the key. You don’t need to illuminate every pixel all the time. With a broader canvas, systems can situate graphics contextually and selectively, hugging real-world edges rather than obscuring them. Even at prototype brightness levels, text and images were easily readable with excellent color reproduction and minimal distortion.

Why this reflection-based approach to AR matters

Lumus features reflection-based waveguides made up of geometric mirrors, as opposed to diffractive gratings.

“And the good news is, it will be simple optical paths of light that are color preserving and low in power draw, so there’s higher perceived brightness per watt.” The company applies AR coatings, too: These reduce light leak in the forward direction, not only keeping what you’re viewing a secret but also preventing that telltale glow that can distract others around you.

Another practical advantage: prescription readiness. About 70% of the world’s population requires some form of vision correction, and these waveguides can bond prescription glass directly to the optical stack, eliminating dust-encouraging air gaps and keeping the assembly slim. That’s key to making something that could be widely adopted — comfort and clarity will defeat spectacle when you’re wearing a device like this all day.

Industry momentum is real. Analysts at IDC and Counterpoint Research have cited consistent double-digit growth potential, as the devices become lighter, brighter and more stylish. The camera-first smart glasses succeeded in demonstrating an appetite for useful, low-friction wearables; adding high-quality displays without compromising on style is the next competitive battleground.

What’s next for AR glasses in everyday consumer use

The market is segmenting fast. There’s a “daily driver” level that hits 20–30° FOV for notifications, turn-by-turn navigation cues, translation, and quick captures — this is all about making your battery last longer and not looking like an antisocial tool. Pushing a second tier higher, towards 50–70°, will make productivity and media experiences more immersive for people who care about larger aesthetic virtual displays and peripheral cues. Both benefit as their optics get thinner, brighter and more efficient.

Computing is likely to remain largely offboard for now — hitchhiking on phones or pucks — and the sensors and cameras will be carefully weighed against privacy regulations. Apple’s Vision Pro does demonstrate what high-end spatial computing can be with that passthrough video; the race for glasses is about getting enough immersion out of transparent waveguides to allow spatial computing to feel natural in public.

After testing Lumus’ selection, my conclusion is simple: the technology curve is bending towards normal-looking glasses that have both daylight legibility and genuinely wide fields of view. AR all day with the Z-30 family. Now, the 70° prototype makes it seem inevitable.