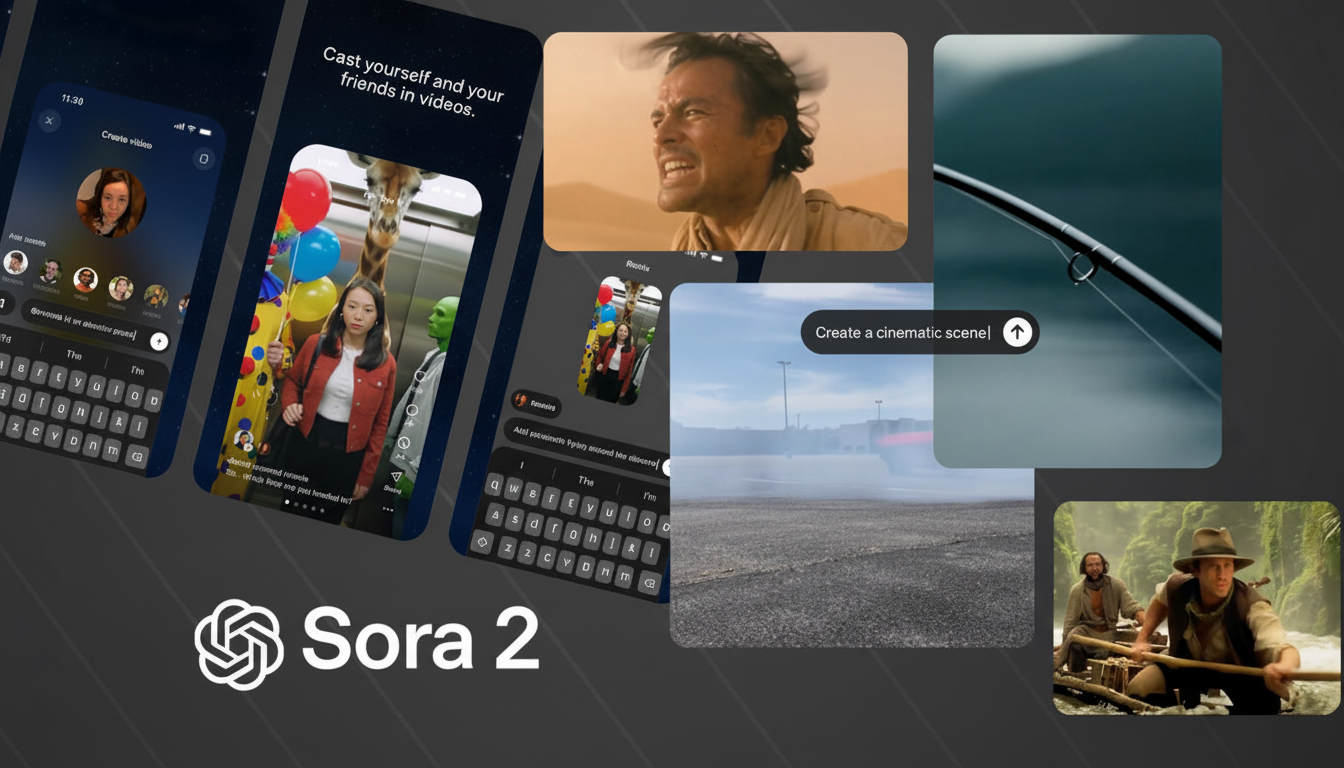

OpenAI’s Sora 2 and a fast-expanding field of AI video generators make it stunningly easy to craft realistic clips from text prompts. Alongside the creative buzz, a clear question now confronts creators, marketers, and businesses alike: how risky is it to use these tools? A legal scholar’s assessment is blunt—liability usually follows the human, and the law is closing gaps faster than many users realize.

What the Law Says About AI Video Output Today

U.S. copyright law still centers on human authorship. The U.S. Copyright Office has repeatedly said works that lack sufficient human creativity cannot be protected, and a federal court in Thaler v. Perlmutter upheld that stance by rejecting registration for AI-authored material. Practically, that means fully machine-generated video may be difficult to own in the traditional sense, even if you paid for the tool.

- What the Law Says About AI Video Output Today

- Who Bears Liability When Things Go Wrong

- Training Data And Model Risk Are Flashpoints

- Likeness Rights And Deepfakes Raise Extra Exposure

- How Platforms Are Responding to Legal and Safety Risks

- A Practical Risk Checklist For Creators And Brands

- Bottom Line: Accountable Use Is Essential With AI Video

However, human contributions still matter. Storyboarding, shot selection, prompt engineering with detailed scene direction, and substantial human editing can create protectable elements. The catch is that ownership may only extend to the human-authored portions, complicating contracts, licensing, and downstream monetization.

Who Bears Liability When Things Go Wrong

Sean O’Brien, founder of the Yale Privacy Lab at Yale Law School, characterizes the risk in straightforward terms: when people or organizations deploy an AI system to produce content, they generally shoulder responsibility for how that content is used. If an AI-generated clip infringes someone’s copyright, or violates a right of publicity by replicating a recognizable face or voice, the user who created and distributed it is a likely target for claims.

Attorney Richard Santalesa, who advises on technology and IP, notes that platform terms typically prohibit infringement and place compliance duties on users. That does not immunize providers from all exposure, but it does underline a practical reality: your account, brand, and budget are on the line if your output crosses legal boundaries.

Training Data And Model Risk Are Flashpoints

Separate from output liability, training-data disputes are reshaping the landscape. Lawsuits brought by news organizations, book authors, and image libraries argue that ingesting copyrighted works without permission infringes exclusive rights. Courts will decide how fair use and transformative use apply at scale, but the litigation itself raises real business risk for vendors and enterprises relying on their tools for production.

O’Brien points to a coalescing legal view: only humans can author copyrighted works; AI-only outputs may be uncopyrightable; users remain accountable for infringements in what they generate; and using copyrighted material for training without permission is increasingly treated as actionable. Whether courts ultimately draw the line in exactly those places, the direction of travel is clear enough for risk management.

Likeness Rights And Deepfakes Raise Extra Exposure

Re-creating celebrities, brand characters, or private individuals invites right-of-publicity claims and deceptive-practices liability. Unlabeled political deepfakes compound the problem, as several U.S. states now require disclosures or restrict synthetic media in election contexts. Tennessee’s ELVIS Act expanded voice protections, and California and New York have robust publicity statutes—each with meaningful penalties.

Studios and unions are pushing back. The Motion Picture Association has criticized AI videos that mimic protected characters, while SAG-AFTRA has pressed for stronger safeguards over performers’ likeness and voice. Providers tout watermarking and provenance standards like the C2PA framework, but those measures are not foolproof and do not replace consent, licensing, or disclosures where required.

How Platforms Are Responding to Legal and Safety Risks

Major AI video platforms have added guardrails that reject prompts invoking famous characters or known trademarks, and they publish system cards describing safety policies. Reporting tools, opt-out mechanisms for rights holders, and content filters are evolving quickly. Still, enforcement is uneven, and the burden commonly falls on users to steer clear of obvious IP, secure releases, and avoid deceptive uses.

A Practical Risk Checklist For Creators And Brands

- Vet your vendor. Read model and platform terms closely, including IP warranty, indemnity, and training-data disclosures. Ask if enterprise plans offer stronger protections or content provenance tools.

- License what you can. Use cleared assets, stock media with explicit AI permissions, and commercial fonts, music, and voices. For real people, obtain written releases that cover synthetic alteration.

- Avoid famous marks and characters. References to distinctive trade dress, logos, brand mascots, or well-known fictional universes invite infringement claims.

- Disclose when synthetic media is used. For ads, political content, and sensitive contexts, clear labeling reduces deception risk and aligns with emerging rules.

- Document human authorship. Keep prompt logs, edit histories, and evidence of creative choices. This supports copyright claims in your human contributions and helps with compliance inquiries.

- Plan for detection and takedowns. Use internal review, deepfake detection services, and swift response workflows if flagged material appears on your channels.

Bottom Line: Accountable Use Is Essential With AI Video

Sora 2 and its competitors are powerful, but they are not risk-free. The consensus from legal experts is consistent: treat AI video like any other production pipeline—license inputs, respect likeness rights, avoid confusion with real people or brands, and assume you are accountable for what you publish. Creativity may scale with these tools, but so does the need for rigorous permissions, transparency, and legal hygiene.