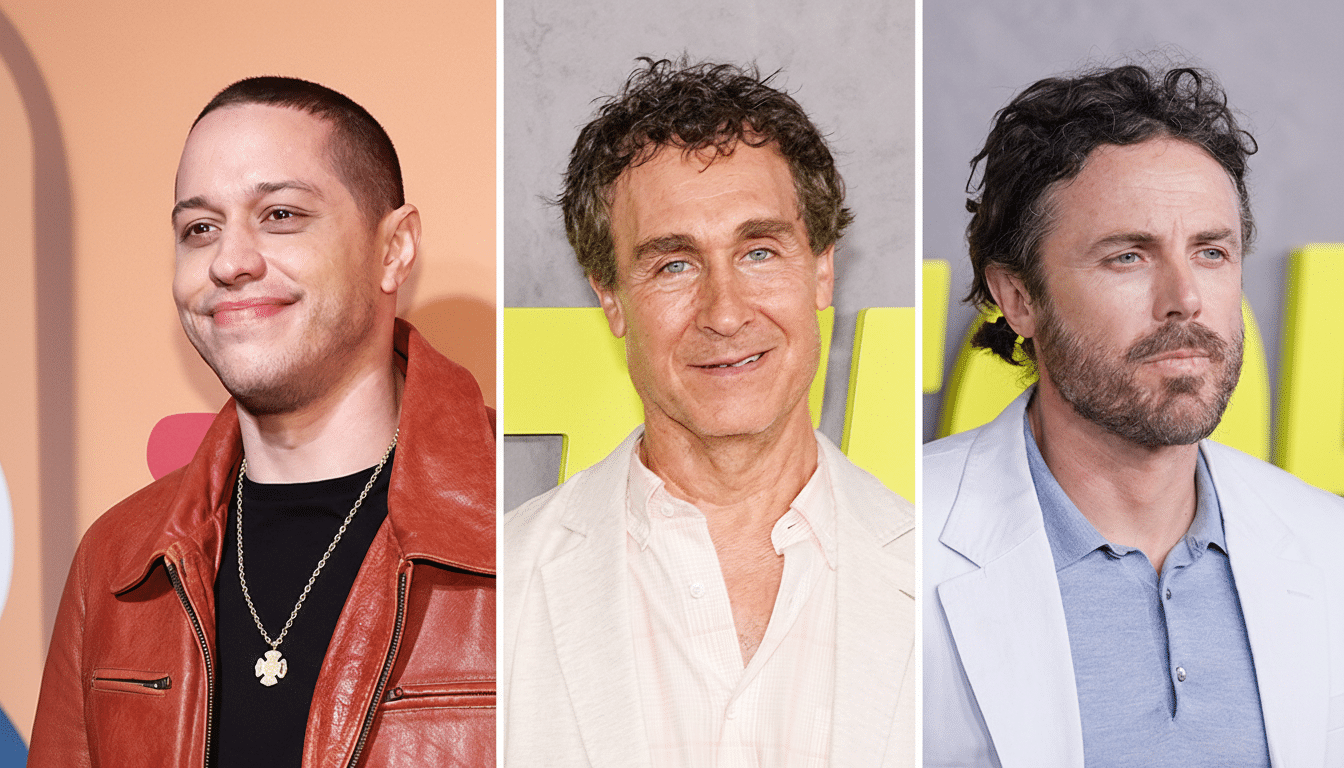

A new Bitcoin biopic is stepping directly into Hollywood’s hottest fault line. Killing Satoshi, from director Doug Liman and starring Casey Affleck and Pete Davidson, is leaning heavily on generative AI for virtual environments and post-production performance tweaks—an approach already drawing applause for efficiency and criticism for its implications.

According to casting materials reported by Variety, the production reserves broad rights to modify on-camera work and is staging performances on a markerless capture setup rather than traditional locations. Producer Ryan Kavanaugh has said the team aims to use performance-capture-based tools, not fabricate wholly synthetic actors, positioning AI as a workflow accelerator rather than a headcount reducer.

AI Jumps From Backgrounds To Performances

For years, AI quietly supported visual effects: object removal, rotoscoping, or upscaling archival footage. Killing Satoshi signals a new phase—where machine learning shapes the feel of performances themselves. Markerless performance capture uses multi-camera arrays and pose-estimation models to track actors without suits or dots, giving editors a high-fidelity performance mesh that can be re-lit, re-timed, or composited into AI-generated settings.

Studios have already dabbled at the edges. The thriller Fall famously used AI-driven lip-sync to alter dialogue during ratings edits. Lucasfilm’s work on de-aging and facial refinement for legacy characters showed how neural pipelines can convincingly reshape faces. Val Kilmer’s voice in Top Gun: Maverick was aided by a trained model after health challenges. Killing Satoshi appears to combine these strands into a front-of-house strategy rather than a last-resort patch.

Proponents argue this means fewer costly reshoots, faster localization, and the freedom to rebuild a scene’s emotional cadence without calling the cast back. For a mystery-laden story about Satoshi Nakamoto—where anonymity and myth matter—AI-crafted environments and malleable performances could create an intentionally uncertain, almost liminal aesthetic.

Actors And Guilds Push For AI Guardrails And Consent

The industry’s unease is not abstract. SAG-AFTRA’s latest theatrical and TV agreements harden rules around digital replicas: informed consent, scope limits, separate compensation, and clear disclosures, especially for background scans. UK union Equity has similarly warned against “rights grabs” in boilerplate contracts that allow perpetual reuse or unbounded AI training on performances.

Two flashpoints tend to dominate: control and credit. Control concerns who decides when and how an actor’s likeness or line reading is altered after the fact. Credit touches residuals and authorship—if an emotional beat was substantially reshaped by a model, how do you apportion creative ownership? These worries spiked as voice-cloning tools matured and high-profile disputes over vocal likenesses thrust consent into the mainstream.

Killing Satoshi could become an early test of whether producers can reconcile AI-enabled flexibility with transparent, actor-forward consent. The public messaging matters as much as the tech: clear documentation of what was captured, what was generated, and what was changed is rapidly becoming a best practice.

Law And Policy Are Racing To Catch Up On AI In Film

Regulators are circling the issue from multiple angles. The US Copyright Office has reiterated that works produced without meaningful human authorship are not copyrightable and has launched a policy study on AI training data and attribution that drew thousands of public comments. A federal court ruling in the Thaler case affirmed that non-human authors cannot hold copyright—raising questions about how far AI can shape a scene before authorship blurs.

Across the Atlantic, the EU’s AI Act moves toward labeling and transparency requirements for synthetic media and general-purpose AI systems. Within the industry, standards groups like the Coalition for Content Provenance and Authenticity are pushing watermarking and provenance metadata so audiences, distributors, and guild auditors can see how a frame was made.

A Bitcoin Story With An AI-Era Production Playbook

Bitcoin’s origin myth is tailor-made for cinematic experimentation. Satoshi Nakamoto’s disappearance, the cypherpunk roots, and the rise of a trillion-dollar crypto economy invite creative staging. Generative backdrops and performance retiming could let Liman juxtapose competing “truths” about Satoshi in a way traditional shoots would struggle to capture within budget.

But the movie’s reception may hinge less on aesthetics than on trust. If viewers feel AI was used as a brush, not a bulldozer—enhancing continuity, enabling bolder storytelling, and respecting performers’ agency—Killing Satoshi could chart a path other productions follow. If it reads as cost-cutting at the expense of craft, expect pushback from audiences and unions alike.

Either way, this film is poised to be a bellwether. The last time workflows shifted this dramatically—think digital intermediate color or LED-stage virtual production—the winners were shops that paired new tools with clear ethics and transparent credits. Killing Satoshi is about the most elusive figure in tech; its bigger legacy may be how it defines what’s acceptable when the auteur is part human, part machine.