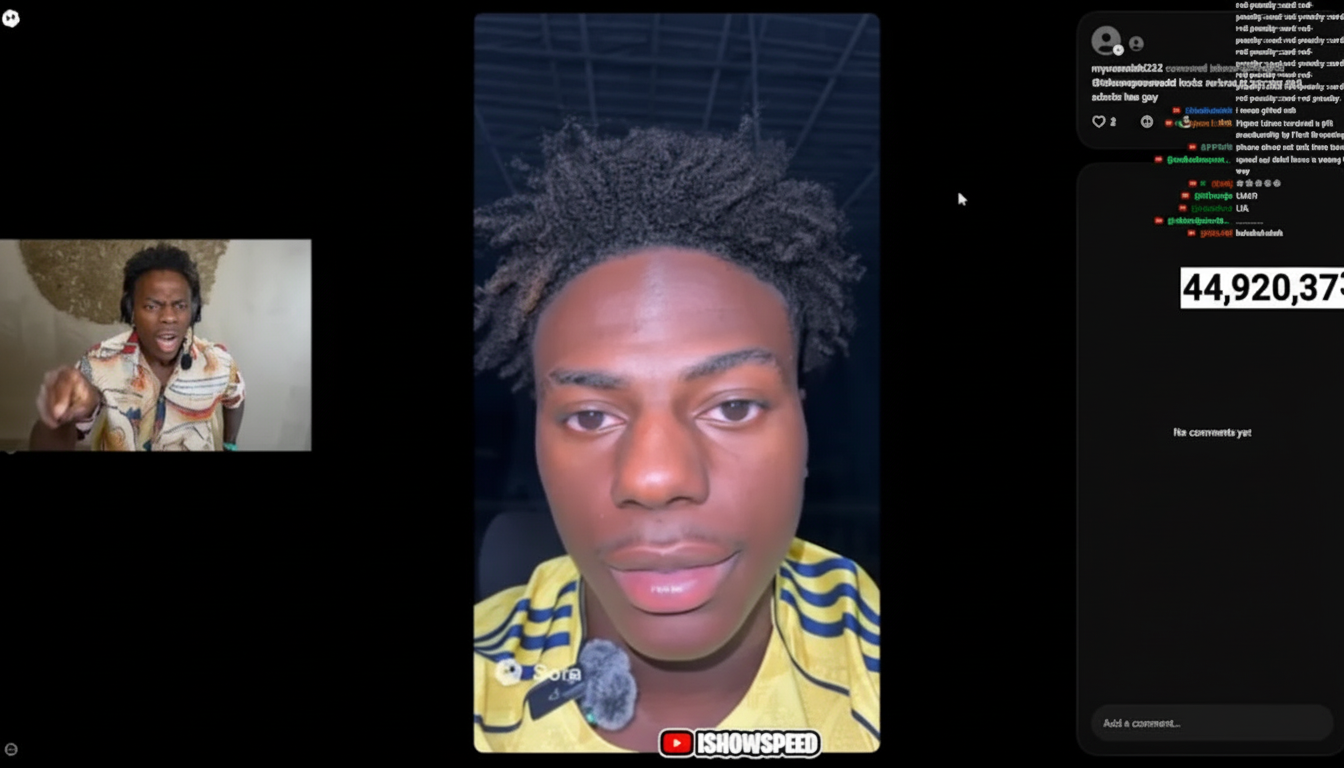

IShowSpeed has slammed a wave of hyperreal AI deepfakes after streaming Sora 2 videos of his very own face and persona, turning lighthearted viewing into a stern warning about consent, safety, and reputational risk for digital creators.

The YouTube sensation, followed by tens of millions, sifted through clips depicting him belly laughing in cacophonous dreamscapes, disclosing self-realizations he’d never had, locking lips with a fan at one point (a human on the receiving end), racing against a cheetah, and touring abroad — even though he confessed that he has never been to those places.

He shut it down as the video became more convincing, saying the realism crossed a line. He also admitted that he had signed on to Sora 2’s celebrity-likeness program and regretted the decision, telling viewers it was not in his best interest and could create safety concerns.

Why Sora 2 Highlights New Consent Questions

OpenAI’s Sora 2 is aimed at generating video from text prompts and has a consent process that requires public figures to opt in before their likeness is available. On paper, it sounds like progress. In practice, it underscores a new gray area: “Opt-in” is only as secure as a creator’s capacity to foresee what prompts are coming down the line, remixing contexts and downstream virality. For live creators who trade in authenticity and improv, even a performance that is entirely voluntary can turn into a trap once audiences or bad actors begin producing scenes that distort tone, intent, and identity.

Generative video compounds patterns that have already emerged in image and voice cloning. According to Sensity AI, in practice 90%+ of deepfake content online today tends to be of a sexual nature and its volume has been growing year-on-year. In the meantime, deepfakes have jumped into politics and scams — from synthetic robocalls to forged endorsements — intensifying a crisis of confidence across platforms. The essence is the same: It takes just one seed of consent to flower into iterations so numerous a person never even thought of before.

Platform rules are playing catch-up. YouTube has added tools to allow people to request the removal of synthetic media that impersonates a person’s identity and has begun labeling some AI-generated media as such. Meta has expanded “Made with AI” labels to other apps. For AI-generated content, TikTok requires disclosures. These efforts are useful, but labels often come too late to prevent damage and removal processes cannot keep pace with the speed of replication after clips go viral.

Creators Push Platforms and Lawmakers to Act

IShowSpeed’s lament is among a growing chorus. Zelda Williams has made a plea to fans asking them to stop sharing AI renditions of her late father, Robin Williams, calling attention to the emotional cost of synthetic resurrection. Celebrities like Steve Harvey and Scarlett Johansson have publicly backed stronger statutes targeting deepfakes, which would help ensure there are clearer routes to recourse when likenesses get misused. Their fears reflect wider public concern: polls from Pew Research Center found large majorities of people were concerned about AI-powered misinformation and the way it could distort public comprehension.

Regulators are laying groundwork. The FCC voted to ban AI-cloned voice in robocalls. Legislators in states including California, Texas, and New York have proposed or passed right-of-publicity reforms and amended laws specifically to address deepfakes — on issues ranging from election integrity to nonconsensual sexual content. In Europe, the EU AI Act requires transparency for certain deepfakes. In Hollywood, SAG-AFTRA was able, by contract, to require consent and compensation for digital doubles. Each step takes the ecosystem a little closer to clearer consent rules — but enforcement and consistency across borders are still sticking points.

The Opt-In Dilemma for Sora 2 and Creator Control Online

What occurred here is an object lesson in “permission at the top, chaos at the edges.” Even when a creator like IShowSpeed does consent, the flood of prompts from fans and impersonators can quickly push out videos that suggest statements, emotions, or actions that simply did not happen. The damage is reputational — not only brand confusion but the erosion of trust with an audience that no longer knows whether it’s seeing improv or imitation. When a clip is circulated, context is frequently lost and what remains behind is the onus of defense and cleanup for the creator.

Meaningful safeguards for model providers would look different from opt-in checkboxes. Granular consent (yes to comedy, no to political speech; yes to usage for brand ads, no to use in romance or sexual contexts), strong default filters, auditable provenance through watermarks or cryptographic signatures, and creator dashboards that show where and how a likeness is used have all been recommended. Without those controls, “consent” could become a one-time switch that gives the forever nod to exploitation.

What’s Next for Streamers and Fans After Sora 2 Deepfakes

Creators can act immediately: Review, and remove where unnecessary, any opt-ins; file takedown requests under impersonation terms of the relevant platform; watermark official content that has not been manipulated in an unauthorized way; and make it clear to viewers when a video is authentic, as opposed to synthetic. For live talent, using prewritten statements and pinned posts to explain one’s stance on AI derivatives does help inoculate against confusion over fakes once they start popping up.

Fans also play a role. Be wary of sensational clips that haven’t been verified, look for signals of provenance, and respect creator boundaries when a likeness is employed without context or consent. When a person’s identity becomes a playground, the entertainment value of AI mashups is not worth the human cost.

The on-stream recoil of IShowSpeed was not just gut-level: it was an up-to-the-minute stress test for what level of AI video is persuasive and disorienting. If his next step is a formal opt-out, or campaigning to have the platform taken down, or advocating for tighter controls in Sora 2, the baseline message to industry is clear: realism without robust consent isn’t progress. It’s a liability.