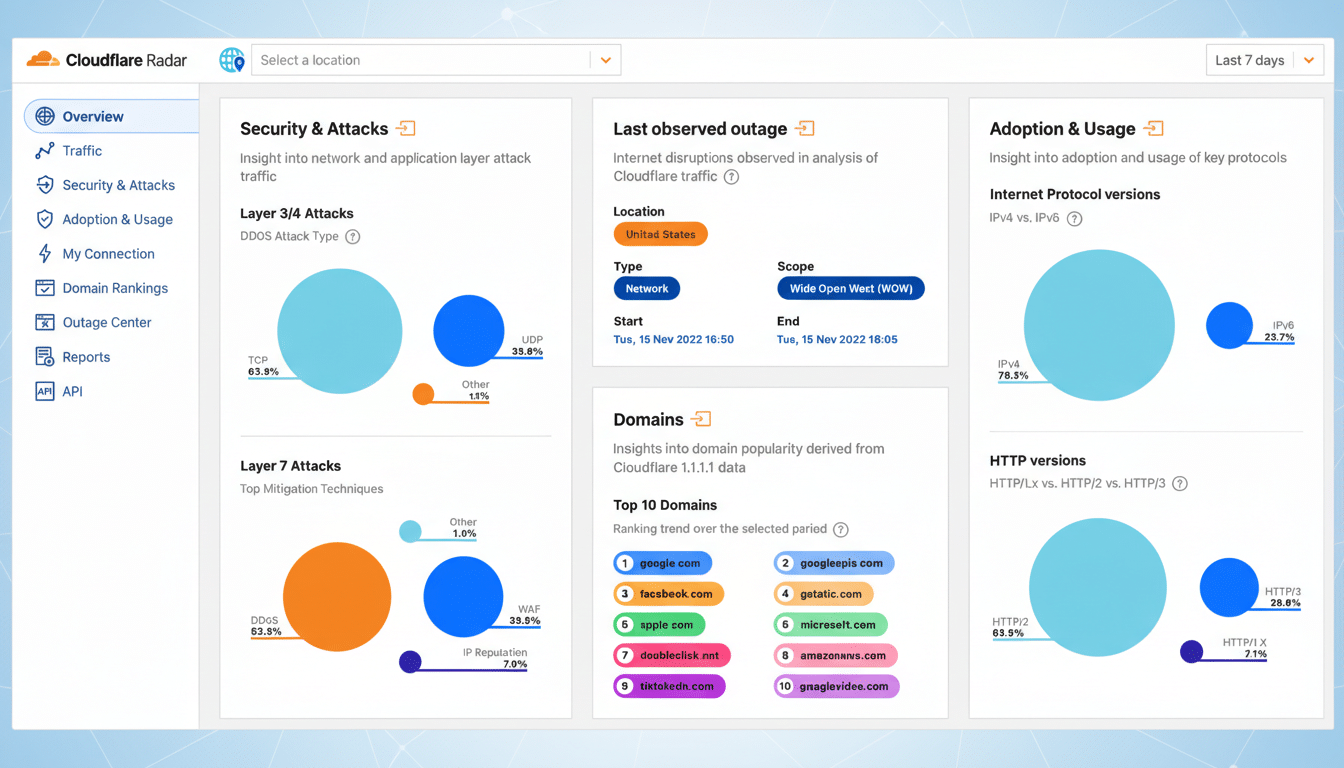

If it felt like the internet kept collapsing and taking your favorite sites with it, you weren’t imagining it. A new disruptions analysis from Cloudflare points to a year defined by brittle dependencies: DNS hiccups that cascaded globally, cloud platform incidents that rippled across thousands of apps, and physical infrastructure failures—submarine cable breaks and power grid faults—that knocked entire countries offline.

The Year the Weak Links in Global Internet Snapped

Cloudflare’s latest report tallied more than 180 notable disruptions worldwide over the year, from brief regional blips to multiday national outages. The most damaging episodes were rooted in the physical world, where redundancies are hardest to improvise on the fly.

- The Year the Weak Links in Global Internet Snapped

- DNS And The Hidden Single Points Of Failure

- Cloud Centralization Raised the Stakes for All

- Power Grids, Weather, and War Now Shape Connectivity

- How To Build Resilience When Everything Depends On Everything

- The Big Picture: Making the Internet Boring Again

In Haiti, two separate international fiber cuts to Digicel traffic drove connectivity near zero during one incident, underscoring how a few critical paths can define a nation’s internet experience. Power grid failures produced country-scale outages in the Dominican Republic—where a transmission line fault trimmed internet traffic by nearly 50%—and in Kenya, where an interconnection issue depressed national traffic by up to 18% for nearly four hours.

Conflict also left a clear fingerprint. Cloudflare’s telemetry showed a Russian drone strike in Odessa slicing throughput by 57%, a reminder that kinetic events now echo instantly across the digital realm.

DNS And The Hidden Single Points Of Failure

Seasoned network engineers have a dark joke for days like these: “It’s always DNS.” That was borne out repeatedly. A resolver meltdown at Italy’s Fastweb slashed wired traffic by more than 75%, illustrating how failures in name resolution—which maps human-readable domains to IP addresses—can functionally make the internet disappear even when links and servers are fine.

One of the year’s most disruptive cloud incidents also stemmed from DNS. When authoritative lookups, resolver caches, or load-balanced anycast clusters go sideways, the blast radius is vast. Low TTLs can amplify query storms; misconfigurations propagate at machine speed; and dependency chains—think identity providers, API gateways, CDNs—magnify the user impact. The lesson is simple: name resolution is infrastructure, not a commodity.

Cloud Centralization Raised the Stakes for All

As more of the web runs on a handful of hyperscalers, outages have become less frequent per workload but more consequential per event. Cloudflare’s new Radar Cloud Observatory tracks availability at AWS, Google Cloud, Microsoft Azure, and Oracle Cloud by region, highlighting how a hiccup in one managed service can strand countless dependent applications—even when their compute nodes remain healthy.

Even the resilience providers had rough days. Cloudflare acknowledged two incidents that made the global tally: a software failure tied to a database permissions change that broke a Bot Management feature file, and a separate change to request body parsing introduced during a security mitigation that disrupted roughly 28% of HTTP traffic on its network. The takeaway for operators is clear: blast-radius control, staged rollouts, and kill switches aren’t luxuries, they’re survival gear.

Power Grids, Weather, and War Now Shape Connectivity

Internet reliability is increasingly coupled to electricity reliability. Grid operators have warned that extreme weather and surging data center demand—driven in part by AI training and inference—are tightening margins. When the grid sneezes, the internet catches a cold. The Dominican Republic and Kenya outages were stark examples, but the risk is broader: even well-provisioned regions face heat waves, storms, wildfire smoke, and equipment fatigue.

Submarine cables remain another chokepoint. Repairs can take days to weeks as ships, permits, and weather align. Meanwhile, geopolitical tensions raise the threat of both intentional and collateral damage to critical infrastructure. Measurement firms such as Kentik and ThousandEyes, along with regional internet registries like RIPE NCC and APNIC, have documented how single cable faults can distort latency and capacity across entire continents.

How To Build Resilience When Everything Depends On Everything

For site and app operators: diversify and test. Use multi-region (or multi-cloud) architectures with explicit dependency maps; place DNS, auth, and storage in separate failure domains; and exercise failover playbooks during business hours, not just in chaos drills. Adopt RPKI to protect BGP routes, enable DNSSEC where it fits, and tune TTLs to balance agility with cache stability. Stagger deployments with feature flags and practice quick reverts. Set SLOs with honest error budgets—and honor them.

For businesses and end users: hedge your access. Keep a cellular hotspot or secondary ISP for critical work, configure more than one reputable DNS resolver, cache essential documents for offline access, and subscribe to provider status feeds. None of this eliminates risk, but it shrinks the window where an upstream problem becomes your outage.

The Big Picture: Making the Internet Boring Again

Decades ago, the internet’s decentralized design prized continuity over convenience. Today’s reality—centralized clouds, complex software supply chains, fragile power and cable infrastructure—trades simplicity for scale. The answer isn’t nostalgia; it’s engineering. More diversity, fewer hidden dependencies, and more transparent operations will make the network feel boring again—in the best possible way.