Intel’s next 18A client platform is Panther Lake, and it’s more than just another “Lake.” It’s Intel showcasing the company’s new node: a mobile-first family that marries newly wrought CPU cores, a modified GPU tile, and a 50 TOPS NPU with an architectural jump on top of RibbonFET and PowerVia. If Intel is able to deliver on its promises, it will likely shape what the AI PC generation driven by PC makers, software partners, and the wider ecosystem looks like.

Panther Lake is architected to combine the area/cost savings of Lunar Lake with the throughput of Arrow Lake, as well as being Intel’s first platform to include 18A CPU tiles built at its U.S. fabs. And that formula — new architecture and a new node on an AI-focused platform — makes this family of products the company’s most significant client launch in years.

- What Intel 18A changes under the hood for performance

- A tile-first design tailored to scale across SKUs

- CPU cores, NPU capabilities, and the evolving AI mix

- Graphics featuring Xe3 and on-chip acceleration

- Memory support and I/O connectivity options by tier

- Performance claims and broader market context for 18A

What Intel 18A changes under the hood for performance

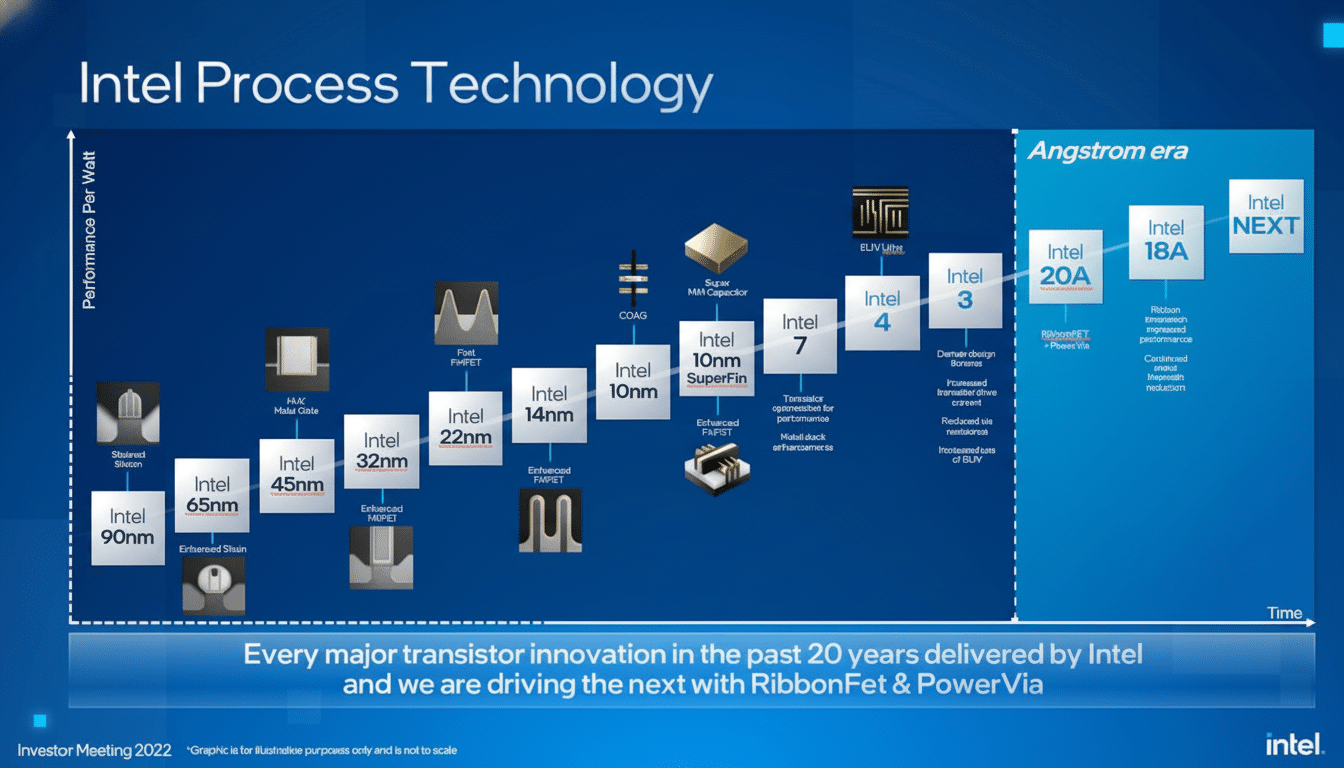

Intel 18A, the company’s “2nm-class” process, introduces two archetypes: RibbonFET (a gate-all-around transistor) and PowerVia (backside power delivery). RibbonFET gates fully encase the channel to minimize leakage and better control it, in contrast with the FinFET’s side-gated fins. PowerVia separates power routing from signal routing; passing power through the back of the die reduces IR drop and frees top-side routing for high performance and density.

According to Intel, the combination provides up to 20% higher performance per watt compared with Intel 3 and enables denser logic but did not name an exact figure, without inflating the power budget. What’s important is that Arizona and Oregon are gearing up for 18A production, which dovetails with the company’s “manufacturing-first” strategy and its broader U.S. semiconductor investment initiatives.

A tile-first design tailored to scale across SKUs

Panther Lake rests on a tiled architecture united through Foveros-S 2.5D packaging, as well as a new Scalable Fabric Gen 2 interconnect. The CPU compute tile is manufactured on 18A, the platform controller tile is TSMC-sourced, and the Xe3 graphics tile comes in two sizes: Intel 3 small or TSMC large. By detaching the GPU tile from the CPU tile, OEMs can dial graphics up or down without touching the CPU silicon.

CPU cores, NPU capabilities, and the evolving AI mix

There are three main client configurations planned. The entry configuration includes 8 CPU cores (4 Performance cores and 4 Low-Power Efficient cores) with an Xe3 GPU tile. Performance enablement: the mid and high packages scale up to 16 CPU cores (4 Performance cores, 8 Efficient cores, and 4 Low-Power Efficient cores). Intel’s new cores are Cougar Cove (P-cores) and Darkmont (E-cores), featuring larger caches — Cougar Cove increases L3 capacity — and better branch prediction and memory scheduling to level out latency-sensitive workloads.

The platform’s NPU5 delivers 50 TOPS and incorporates native FP8 support for speedy, memory-light inferencing when precision isn’t as important. Intel claims it doubled MAC density per square millimeter compared with the previous generation and achieves approximately 40% better TOPS per mm² compared to Lunar Lake’s NPU. The company lists a 3.8x uplift against the lightweight NPU blocks in Arrow Lake-H. Noteworthy: it meets and exceeds Microsoft’s Copilot+ PC baseline for on-device AI acceleration.

Graphics featuring Xe3 and on-chip acceleration

Xe3 comes in two baked-in flavors, one with a 4-tile design with four ray-tracing units, and a larger 12-tile option with 12 RT units and up to 16 MB of L2 cache. Doubling L2 to 16 MB can reduce memory-interface traffic by up to 36 percent, Intel claims, for more sustained performance within laptop power limits. Each slice combines eight 512-bit vector engines and eight 2,048-bit XMX engines, and Intel is pushing Xe3 to handle more per-pixel AI work now that it’s starting to take on basic rasterizing and ray tracing workloads.

Intel quotes up to 120 “platform TOPS” — a measure that factors the graphics accelerator’s AI math alongside other blocks — for the top integrated configuration, a footnote in the processor slide deck, again marking the movement to hybrid CPU–GPU–NPU workflows in creative, gaming, and AI-assisted applications.

Memory support and I/O connectivity options by tier

Memory support includes LPDDR5x and DDR5 with SKU-dependent ceilings:

- Entry: LPDDR5x up to 6,800 MT/s or DDR5 up to 6,400 MT/s

- Mid: LPDDR5x up to 8,533 MT/s or DDR5 up to 7,200 MT/s

- Top: LPDDR5x up to 9,600 MT/s and DDR5 up to 7,200 MT/s; capacity options include LRDIMM up to 96 GB and UDIMM 64/128 GB, with DDR5-5200 also referenced for certain UDIMM configurations

PCIe and I/O scale by tier: the 8-core package presents with 12 lanes; the mid-tier bumps up to 20 lanes (12 Gen5, 8 Gen4), while the top-shelf 16-core/12-GPU-core option includes 12 lanes (4 Gen5, 8 Gen4).

Thunderbolt 4 is built in; Thunderbolt 5 can be added through a separate controller. Wireless is topped by Wi‑Fi 7 Revision 2 with Multi-Link Operation and Multi-Link Reconfiguration, as well as an updated higher-bandwidth STEP interface connecting the Wi‑Fi silicon to the SoC. Auracast is supported on Bluetooth 5 with LE Audio, and Intel is boasting high-speed dual-antenna Bluetooth as a first for stronger connections.

Performance claims and broader market context for 18A

Intel says that at equal power, Panther Lake can offer more than 50% greater CPU and GPU performance versus Lunar Lake. At the same multithreaded level, its power consumption would be about 30% lower than that of Arrow Lake, and single-thread throughput is estimated to be around 10% higher than Lunar Lake at a similar power. Intel is quoting around 10% lower power versus Lunar Lake and roughly 40% lower versus Arrow Lake at the SoC level — due largely to the transition to its newly introduced, more efficient, higher-density 18A node along with additional E-core resources and platform-level efficiency tunings.

Timelines are always cautious until independent testing lands, but early silicon is with partners. On the server side, Intel’s 18A push comes in lockstep with Clearwater Forest, an Xeon 6+ family based on Darkmont E-cores which will offer much higher core densities. In combination, these releases reflect that 18A is a key driver for Intel in both the client and the data center. Competitively, the 50 TOPS NPU and new Xe3 graphics squares Panther Lake against AMD’s newest AI-enabled Ryzen, Qualcomm’s Snapdragon X series (which has just announced a higher target for NPU TOPS), and Apple M-class systems — all gunning to localize AI workloads on new system-on-chips with longer battery life.

The bottom line: Panther Lake is Intel’s play to reset the pace — new cores on a new node, a flexible tile strategy, and AI acceleration across the stack. If the performance-per-watt story pans out in shipping laptops, 18A’s arrival could be a true inflection point for the Windows AI PC era.