I fired up a $20 AI coding assistant within my editor and plowed through 24 work days in about 12 hours. It refactored crusty UI, squashed long‑standing bugs and scaffolded new features at warp speed. But the deal came with a hidden cost: blunt usage throttles that left me stranded mid-run, mysterious limits, and a productivity crash that only a more expensive plan could remedy.

How a $20 plan brought jaw-dropping speed

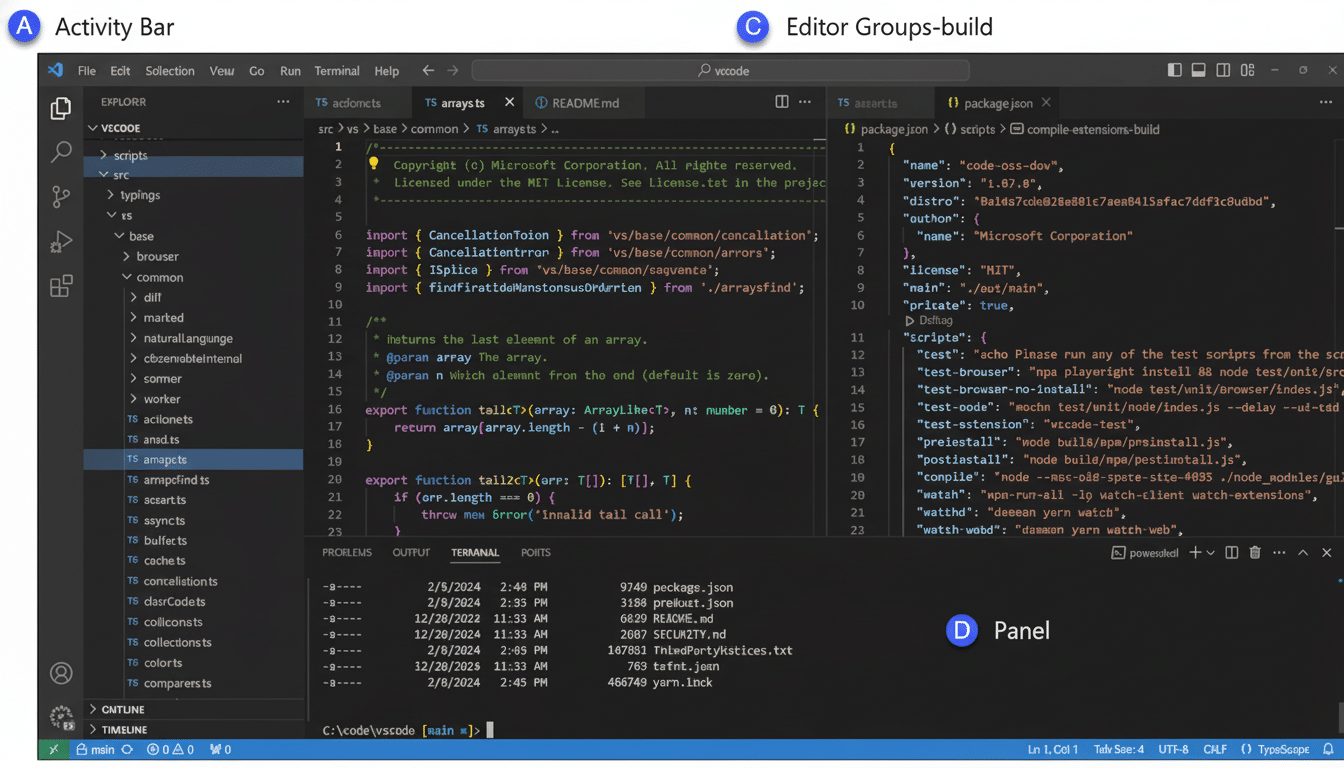

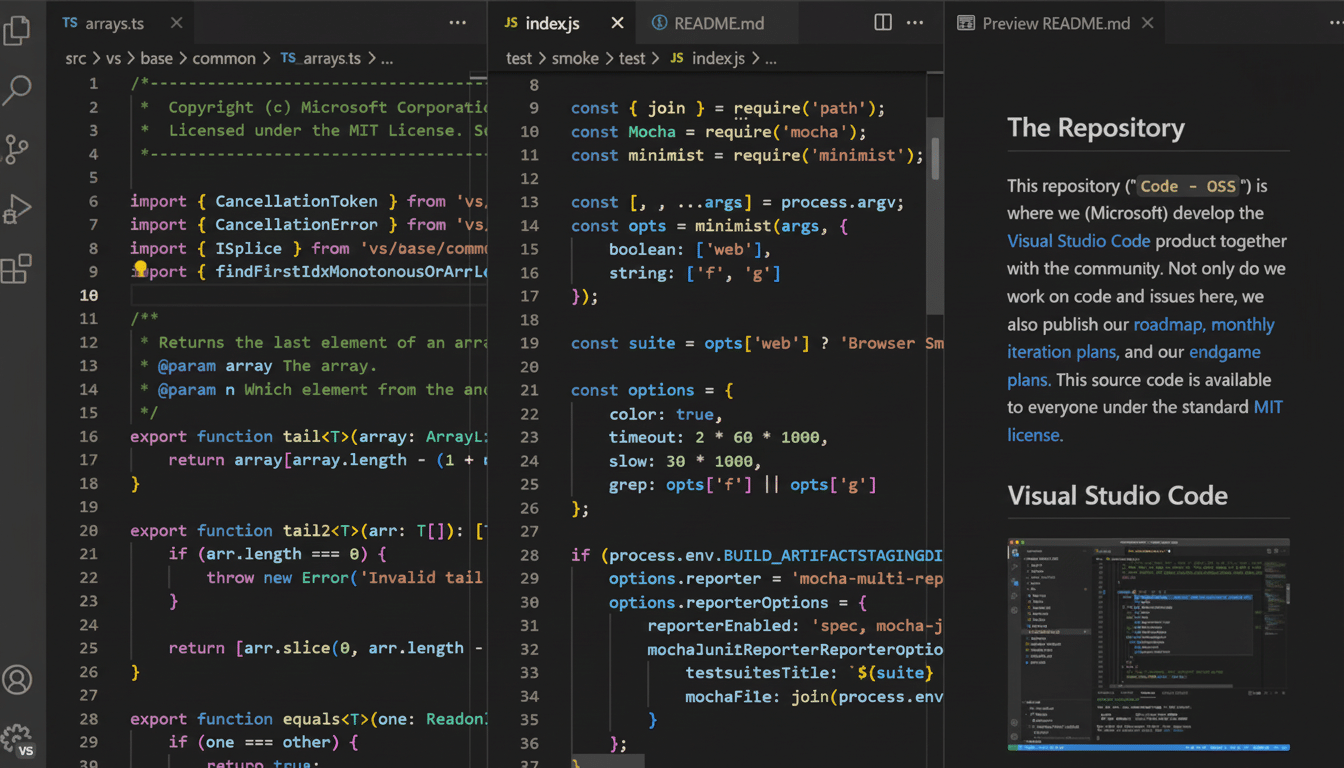

The tool was plugged into VS Code and had context of my repository meaning that it could reason across multiple files and not as isolated snippets. It’s that context-sensitivity where coded AIs become valuable. I handed off a full CSS refresh that I’d been dodging for months, and iterated through JavaScript band-aids on a finicky sign-up flow. Using some targeted prompts and small diffs, the assistant was already generating clean, working code faster than I could check out a new branch.

The acceleration is in line with other outside research. A study of GitHub developers found that participants completed tasks 55 percent faster with an AI assistant. McKinsey’s analysis projects that generative AI could reduce software development effort by between 20% and 45% by task type. In use, the benefits lie in skipping boilerplate, iterating on alternatives quickly, and front-loading mechanical glue work so you trade human time against the system design and verification cherry.

That’s the upside. I cleaned up UI, tightened up validation, added settings export/import and a prototype for new monitoring module huh.. all that in 1/2 day. Just by myself, that would have been several sprints on fire.

The big catch: throttles, opacity, and broken flow

Then came the meter: at the flick of a switch, the assistant would freeze half-way through some kind of edit, leaving my code in the twilight zone. A timer popped up: give it a few dozen minutes before the next. Following another heavier surge of usage, the wait topped out at more than an hour. Press harder still, and the cooldown grew to many days — unless I signed up for a premium tier that cost around 10 times as much.

Two problems compound here. First, mid-transaction breaking rate limits interrupt developer flow and can leave incomplete work stranded. Second, the limits aren’t transparent. On many coding AIs, you can watch token budgets or request counts. Here, there was simply nothing akin to meter. That opaqueness means it’s impossible to plan sprints when you’re on the entry plan, and silently nudges anyone serious toward higher cost tiers.

And when the tool did run, I paid for misfires. About half of them were considered non-useful due to hallucinated APIs, misread project layout or brittle patches that broke tests. That waste is typical with current-gen models, and has to be considered in any ROI math.

Cheap vs premium: what the cost of productivity actually is

For hobbyists or weekend coders, $20 is a no-brainer — until the stopwatches come out.

Professionals in need of ongoing throughput stack more expensive tools: GitHub Copilot for inline completions, a reasoning-heavy assistant like Anthropic’s Claude for planning, and AI-native IDEs like Cursor or Windsurf for repo-scale refactors. The developers I’ve asked report monthly costs in the range of 400 to 800 dollars for these stacks, and believe it is worth it as the time saved is more expensive than even a junior hire.

For the software you’re shipping as your day job, it’s a bet that often pays off the way you want it to. When you’ve been coding in your spare time, those unpredictable cutoffs at the entry tier can leave you high, dry, and exactly when you finally had some time to code.

Oversight is still needed — and mistakes are expensive

None of this removes the need for a highly skilled human in the loop. My assistant was amazing whenever I kept my tasks in an atomic step as a test, constrained them, and wrote up a test for them. Was where there were massive rewrites requested, or when a more obscure domain rule came into play, that it broke down. That squares with what Stanford HAI and other academic groups have found: AI assistants can increase output, but defect rates go up if developers put too much faith in suggestions or skip reviewing. Security work is particularly perilous; multiple studies reveal that assistants can write insecure code when not carefully directed.

The right stance is power tool, not autopilot. Keep diffs small. Use feature branches that can be rolled back. Gate collates after behind linters, unit tests and code review. Maintain privacy and compliance by never having secrets or sensitive data in prompts. And never accept AI output as authoritative in safety-critical paths.

What to Consider Before You Try It

If you’re thinking of the 20 bucks path, expect bursty sprints, not sustained marathons. Anticipate invisible ceilings, gradually coalescing cooldowns and, from time to time, mid-edit cutoffs. Save the Good Stuff: Checkpoint frequently, save work before long runs and assume some percentage of prompts will be throwaways. If uninterrupted flow is critical, plan on tuning a higher level or a complementary tool that gives access to usage meters.

My verdict is pragmatic. The $20 sidekick was now a 16x force multiplier — until the throttle shut down. For shipping teams that just want to ship stuff, premium access is the actual product: reliable tokens, no gatekeeping and time saved that more than pays for the invoice. For all others, the entry plan has been likened to a jetpack with an incredibly tiny fuel tank. It has the power to take you over a mountain, but it has been known to unceremoniously deposit you on a ledge, too, if you don’t keep an eye on the gauge.