AI has gone from a curiosity to a must in the software toolchain. According to GitHub, most developers are now being assisted by AI in some way, and a Microsoft–GitHub study found participants were able to do coding tasks up to 55% faster with Copilot. McKinsey calculates that generative AI could automate or speed up 20% to 45% of software engineering work, changing how teams describe, plan, build, test and run software.

The big wins aren’t from sprinkle-on copilots; they are from rethinking the pipeline so that AI makes quality, compliance and speed go up together. One or two financial-services leaders from the likes of Allianz Global Investors, Lloyds Banking Group, Hargreaves Lansdown, SEB and Global Payments have started to demonstrate what this might look like in practice. Here are five tactics for translating AI’s potential into tangible results.

Codify Compliance, Using AI and Guardrails

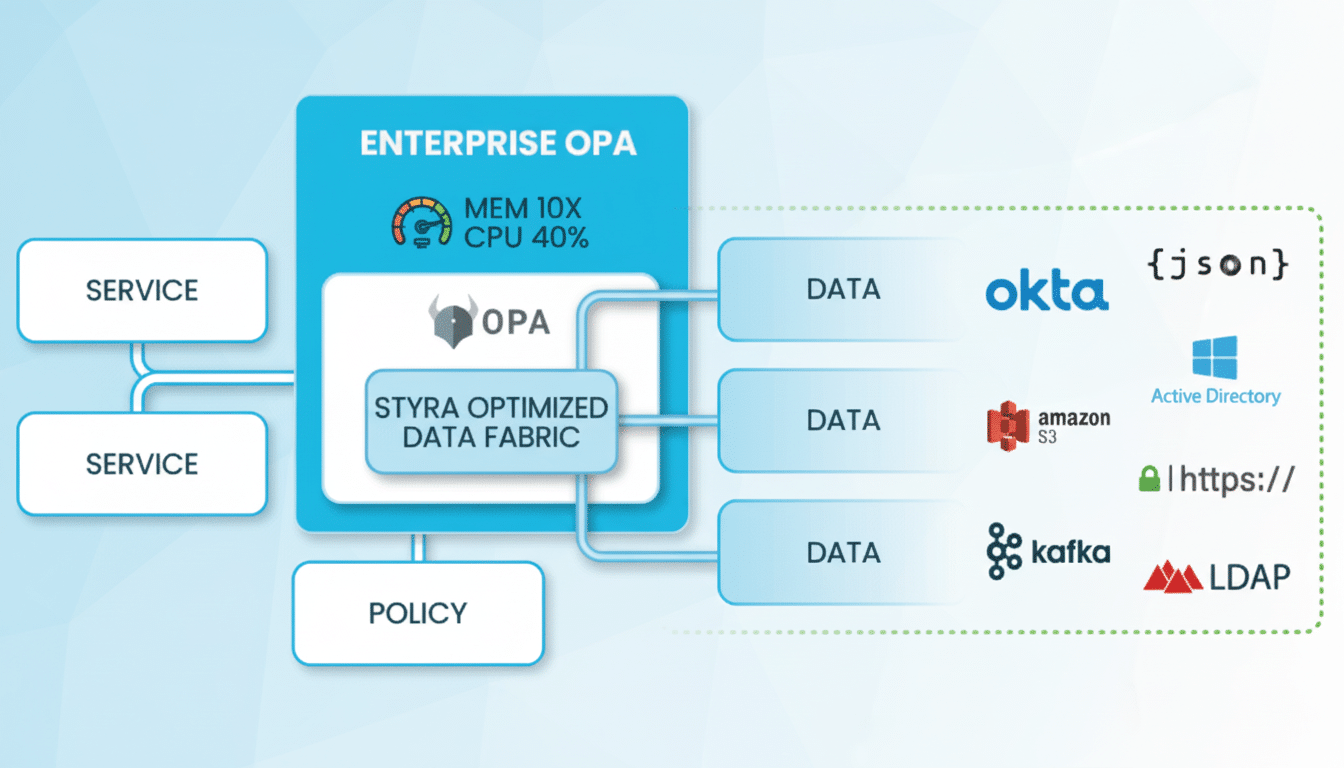

Stop regulating after the fact as an extended checklist. Embed standards into the developer process so compliance is natural, not antagonistic. Tools such as Open Policy Agent (OPA) and policy-as-code patterns can also check configuration, infrastructure and pipeline actions in real time and steer developers toward compliant decisions.

Allianz Global Investors implemented policy engines to accommodate security and audit requirements while allowing for developer autonomy—providing soft “you might want to fix this” warnings instead of hard blocks. In regulated environments, these guardrails create audit-ready evidence and eliminate manual gates that impede releases. Combine that with automated testing, SAST/DAST and code-coverage targets (as Hargreaves Lansdown has done) to ship faster with fewer exceptions.

Extend AI Throughout the Software Lifecycle

Don’t leave AI confined to the realm of code completion. Use it for backlog refinement, test generation, code review, documentation and change analysis or when responding to an incident. Teams with a sustained focus on AI covering the whole pipeline typically see the DORA metrics (lead time, change failure rate, deployment frequency and mean time to restore) improve because rework declines and feedback cycles shorten.

Lloyds Banking Group has prioritized modernization to the point that AI can speed every part of development, not just keystrokes in an IDE. Build on-demand boundary and mutation tests with AI, draft ADRs and architecture docs from code, find risky diffs before merge. Suggest runbooks and visualize alerts when you are on call. The unofficial rule: AI drafts; humans have final say.

Create an AI-Ready Platform and Golden Paths

AI’s influence is limited by platform friction. And platform engineering—with curated “golden paths” for build, test, deploy and observe standards—gives AI tools consistent environments and decreases toil. Furthermore, internal developer portals like Backstage can serve as a conduit for publishing these paths, exposing their reusable templates and surfacing AI-assisted automations where developers already are working.

Modernization efforts like Lloyds’ overhaul of its infrastructure, or Global Payments’ focus on building systems that can handle more at a greater security level, illustrate the importance of having consistent and approved stacks to work from. Harden your base images, reduce preview environment sizes to zero out the minutes, and give managed access to model endpoints. In instances where paved roads are available, AI agents can orchestrate workflows end to end with less fragile edges.

Govern AI Code Generation Carefully and Consistently

AI can generate thousands of lines at a click; your security and compliance have to run as fast. Leverage the NIST Secure Software Development Framework for base controls and apply the NIST AI Risk Management Framework to manage model use. Utilize the OWASP Top 10 for LLMs to mitigate against prompt injection, disclosure of data and insecure direct object references.

Bake it into your pipeline: license checks on generated code, secret scanning, dependency and container scanning, SBOMs (SPDX or CycloneDX). Use SLSA attestations to assert provenance and tamper-evidence. Log prompts and model responses for sensitive operations, review with security to harden prompts and tooling. SEB has conceptualized developers as “conductors of agents,” which further emphasizes the necessity for human-in-the-loop policy approval and automatic rollback when AI-suggested changes fail guardrails.

Finally, add an “evals” field: lightweight, reproducible tests that drive AI quality in your domains (APIs, frameworks and standards). This prevents silent regressions to huge files as models or prompts change.

Upskill Teams and Transform Engineering Culture

Here’s the fast track to unlocking value: building your organization’s fluency in AI. Conduct internal demos, brown-bag sessions and prompt clinics. Global Payments’ “university” model—short, peer-led show-and-tells—helps us to gather pace and promulgate practical patterns. Literate AI should be the tech equivalent of test-driven development: a skill required by every engineer, not just something that specialists do.

Involve your security, audit and risk teams so they can “fight fire with fire,” employing AI for reviewing logs, policies and evidence. To promote high-quality work, use “vibe coding” or agentic patterns, and demand testing and thorough code reviews—with an emphasis on newer developers. Track progress with developer-experience surveys, PR cycle time and escaped-defect rates—not how many people are using your tools.

The lesson: AI pays, and only when it’s embedded, governed and taught. Mix policy-as-code, lifecycle-wide automation, solid platforms and continuous upskilling, and you’ll turn AI from a promising helper into a compounding advantage.