Leaders of the House Oversight Committee have called on the chief executives of Discord, Twitch and Reddit to testify about how their platforms may be used to spread extremism that fuels real-world violence. Lawmakers say they are increasingly seeking granular insights about content moderation, recommendation systems and the provision of assistance to law enforcement when threats move from screens into the real world.

Why is Congress targeting Discord, Twitch and Reddit?

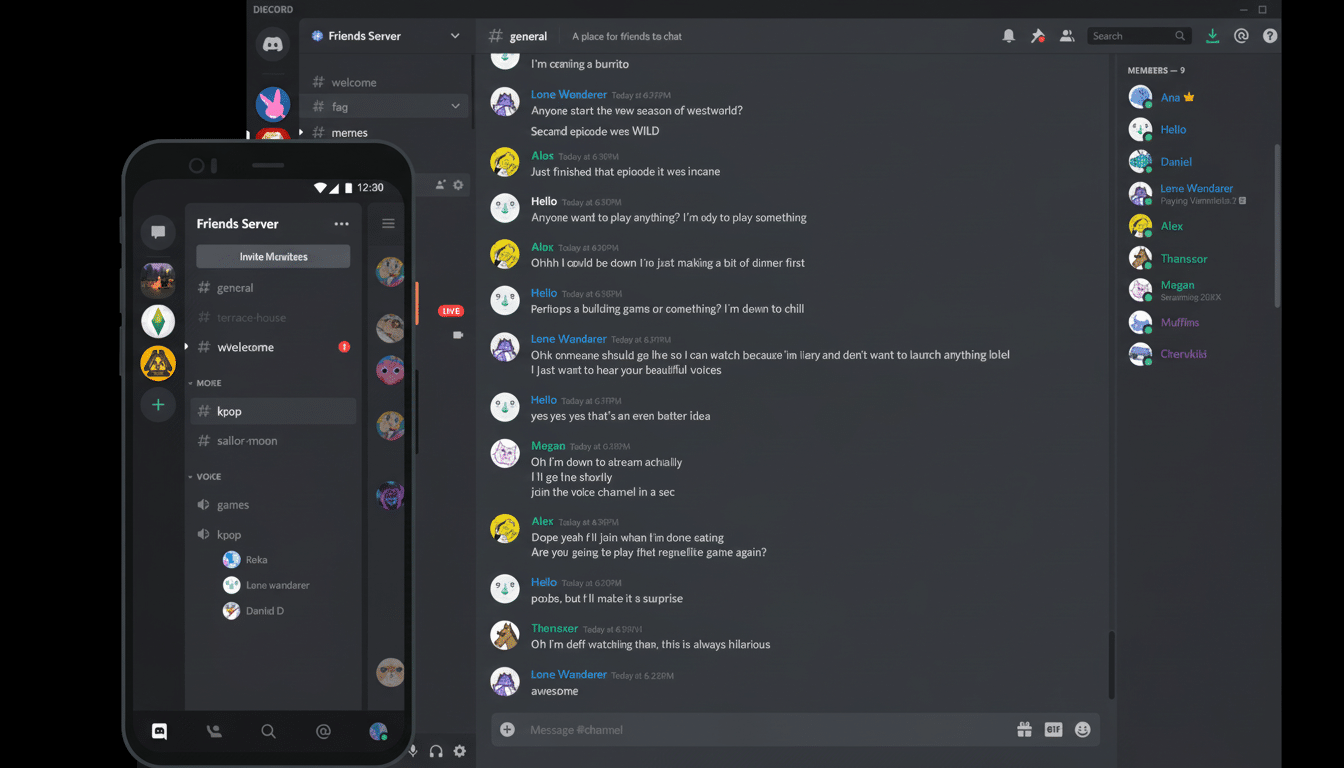

Each platform occupies a different choke point in the modern attention pipeline. Discord houses sprawling, semi-public networks of servers where moderation is decentralized and community norms vary wildly. Twitch shows live video to huge audiences, so the need for a fast response is high-stakes. Reddit organizes discussion on its platform in thousands of topic-specific communities, often referred to as subreddits, where volunteer moderators apply localized rules based on overarching site-wide policies. The panel also contacted leaders at PC gaming marketplace Steam, demonstrating a focus that could extend to the rest of gaming and its chat ecosystems.

Lawmakers are reacting to a trend that security experts have long warned about: radicalization that brews in fringe spaces and spreads in mainstream feeds. Past incidents illustrate the stakes. The Buffalo supermarket shooter live-streamed his shooting on Twitch; the stream was taken down within minutes, but copies rapidly spread to other sites. Discord server hubs of extremist organizing were also exposed after the Charlottesville rally, and journals belonging to the Buffalo shooter were later found on private Discord servers. Reddit, for its part, has banned communities that celebrated violence and put quarantines in place to decrease the reach of borderline content, but enforcement at scale is a work in progress.

The catalyst and the controversy behind the hearing

Oversight Chair James Comer referenced the killing of conservative activist Charlie Kirk, as well as other recent incidents of political violence, in calling for the hearing, insisting that Congress must investigate platforms used by violent actors. A suspect, 22, has been arrested in Kirk’s killing. Investigators are looking into reported messages left on Discord at the time of the incident, Channel NewsAsia reported, and some media outlets have reported that bullets used in the attack were engraved with meme-like references to video games.

The businesses have now started staking out their territory. In a statement, a Discord spokesman said the company frequently works with safety-minded lawmakers and values ongoing conversation. Reddit said it is investigating whether there’s a connection between the incident and its site but has not found evidence the suspect was an active Reddit user, pointing to rules against hateful content or content that incites violence. Twitch usually cites its layered approach — proactive detection, users reporting and quick takedowns — while recognizing that real-time streams introduce special risks.

What lawmakers are seeking from tech platforms

- How recommendation algorithms, trending tools and notifications can speed the spread of extremist content — and what guardrails counter that growth.

- How trust-and-safety operations function: the number of people working there, how quickly they respond, what paths exist for escalating decisions and how often a decision about content is reversed on appeal.

- Access for third-party researchers to study platform harms (in ways that are privacy- and security-respecting).

- Protocols for sharing data with law enforcement to avert imminent threats, and how platforms weigh those requests against civil liberties and user privacy.

- Protections for minors, particularly on chat and live-video services where grooming, doxxing and other forms of intimidation can spiral rapidly.

What the evidence indicates so far about extremism

Homeland security officials and academic researchers have long warned that lone actors frequently radicalize online before they become violent. The Anti-Defamation League has released annual surveys highlighting how harassment is rampant in online gaming spaces, with many players reporting exposure to extremist content through voice and text chat. Government watchdogs have warned that performance-oriented algorithms can magnify sensational content, even if platforms do not mean to boost extremism. Those findings do not pin blame on any one service, but they do highlight how quickly dangerous material can cascade across platforms joined at the hip.

Meanwhile, companies point to measurable progress: automated catching of violent threats, wider banning of extremist symbols, stronger age gates and more frequent transparency reporting. In the wake of the Buffalo shooting, for instance, Twitch’s swift enforcement wound up being a success story and a cautionary tale at once — quick removal on one platform could not prevent its re-emergence elsewhere.

Potential outcomes and policy risks for online platforms

The hearing might even help shape legislative proposals for tightening reporting rules around imminent threats, establishing minimum standards for risk assessments or mandating more robust transparency regarding recommendation systems. Any effort that gets near to Section 230 or encrypted communications is sure to lead to furious debate over speech, progress and privacy. As for the platforms, their risk in the short term is reputational and operational: tightened commitments to proactive moderation, more resources for human review, new product constraints designed to limit virality.

For users and developers, it will be a delicate balance. Tougher guardrails could curb harm but also sweep up legitimate political expression if rules are vague or overbroad. That’s why the specifics of how platforms define extremist content, measure success and open their systems to outside scrutiny will matter more than any individual headline moment. The Oversight Committee is seeking those details. And now the CEOs of Discord, Twitch and Reddit can expect to answer for them, on the record.