Lambda, the AI infrastructure company that’s perhaps best known for its on-demand GPU compute service, is preparing to launch its data training solution and announced a pre-funding round that brings the value of the company to $2.2 billion. The company has hired Morgan Stanley, J.P. Morgan and Citi to advise it for a potential listing, which would make Lambda one of the few specialized GPU cloud players to explore the public markets since the debut of one of its competitors.

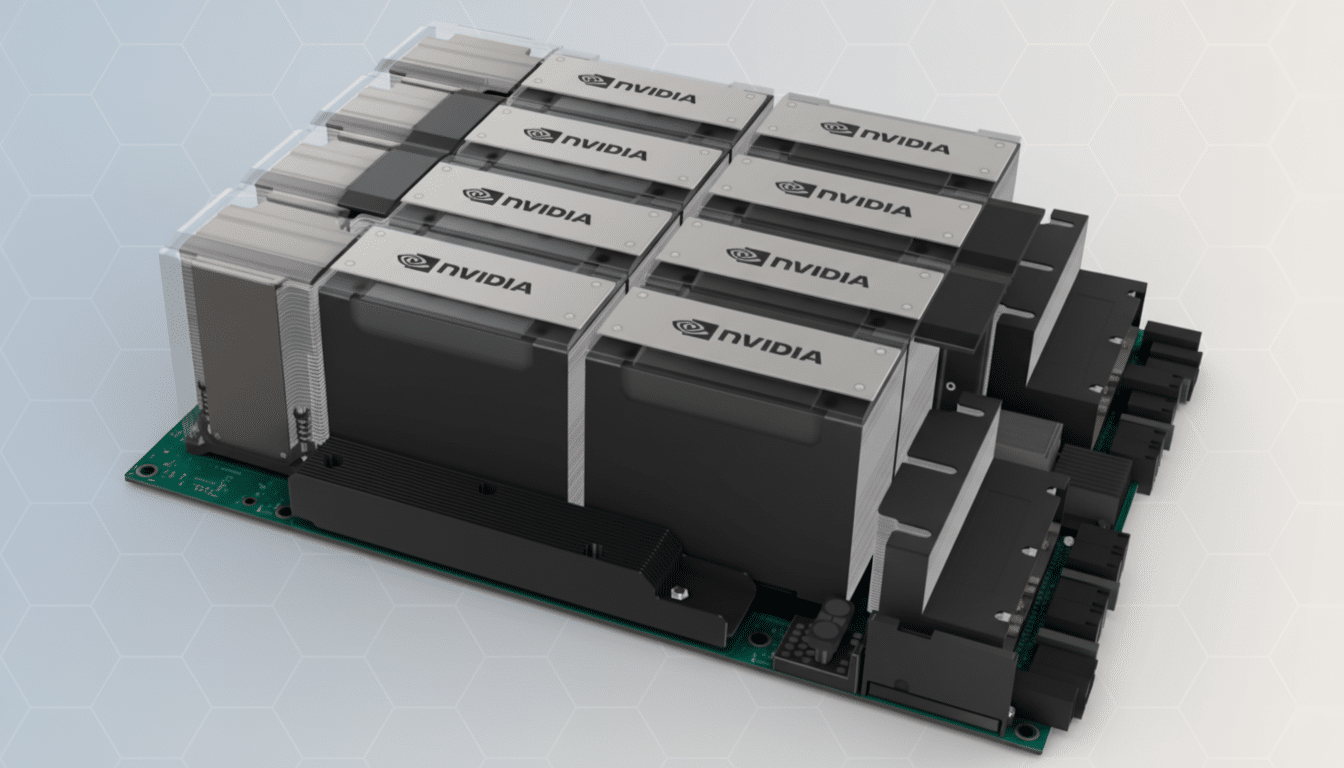

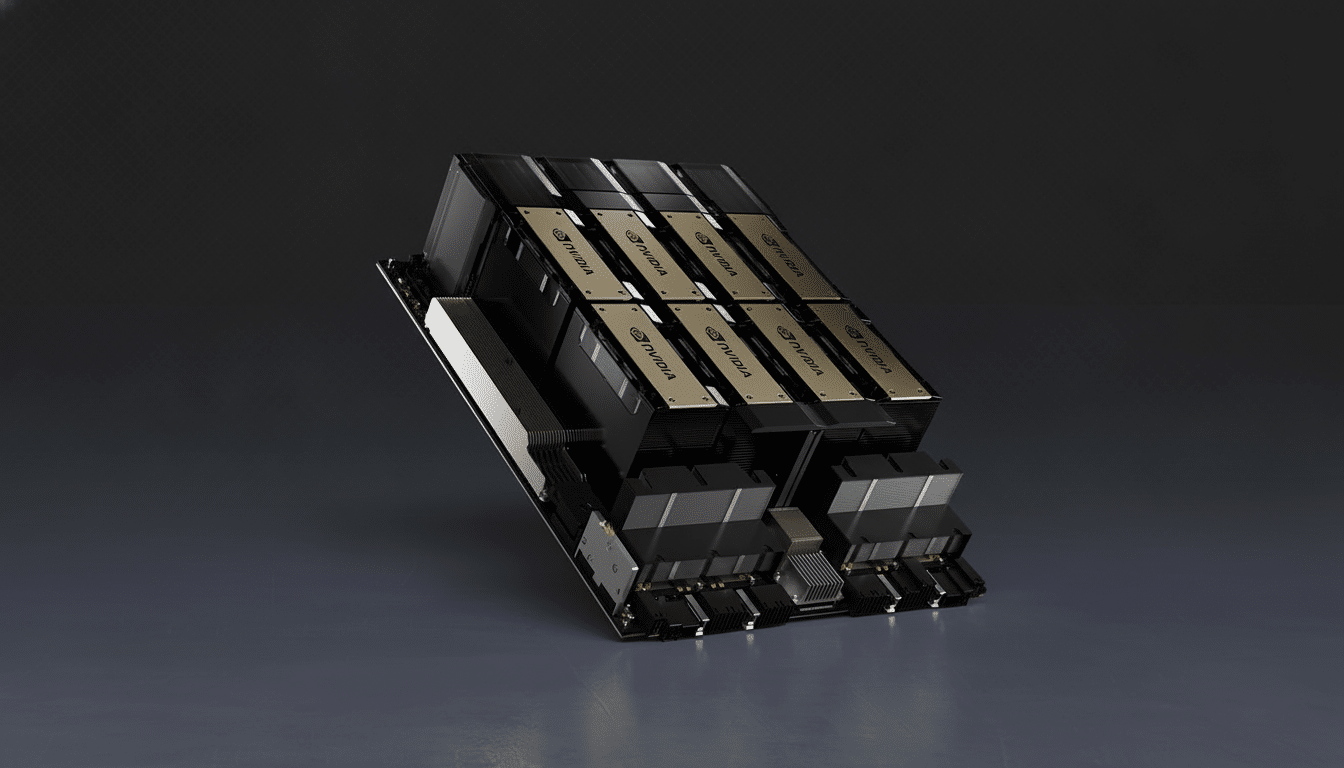

A listing would be a turning point for a company that is built on what remains one scarce two: top-flight accelerators for training and serving large AI models. With the ongoing demand for H100-class capacity outpacing supply in diverse regions, and possible future allocation problems, a public offering would give Lambda the financial currency and visibility it needs to secure long-term allocations of important chips, increase its data center footprint and sink more deeply into the enterprise.

Why a GPU cloud needs an IPO

AI infrastructure is brutally capital-intensive. To build clusters that can keep up with the modern swath of training workloads now requires tight-packed networking, storage exoticism and multi-megawatt power, oh and you need to commit to the accelerators in advance. Public market equity raises can reduce the cost of financing, support prepayments to chip vendors and underwrite rapid leaps into new page where software developers and enterprises are lining up for capacity.

The market has also been ripe for specialty providers. Public market entry for CoreWeave sets new comp for investors looking at GPU clouds outside the hyperscaler trio. While other organizations struggle with the trade-off between faster access and more tailored pricing vs.’ the laundry list of services offered by various hyperscalers, dedicated GPU clouds have found a niche in the world of the most compute-hungry of workloads.

What Lambda provides (and how it’s different)

Lambda established an early fanbase with developer-friendly tooling and hardware which they also built into their cloud: ready-to-train environments, preinstalled popular frameworks, and bare-metal (or managed, your choice) for teams that need to go from prototype to large scale runs with minimal stack wrestling. High performance networking and storage for multi-GPU training is the focus of the company, coupled with flexible purchasing options, such as on-demand and reserved capacity.

That positioning has appealed to research labs and startups, especially the ones who optimize for time-to-train over the vast catalogs of general-purpose clouds. The pitch includes reliable access to the current-generation of GPUs, transparent pricing, and a stack built for AI workloads as opposed to cut-and-pasting one from enterprise compute of yore.

Funding, backers and flow of bankers

According to Crunchbase, Lambda has raised over $1.7 billion in equity and debt financing. Investors include Nvidia, Alumni Ventures and Andra Capital, among others. Its most recent was a $480 million Series D, money that probably went into expanding capacity as well as working capital to lock down accelerator supply.

The heavyweight lead underwriters — Morgan Stanley, J.P. Morgan and Citi — suggests Lambda is pursuing a traditional IPO, rather than alternatives like a direct listing. That lineup is standard for capital-intensive tech issuers and reflects an intention to try to appeal to a wide base of institutional investors who are used to infrastructure economics.

Competitive pressures and risks

Competition is fierce on two fronts. Specialized competitors like CoreWeave, Crusoe Cloud and Voltage Park are racing to add capacity and lock in long-term customer commitments. Meanwhile, the hyperscalers — Amazon Web Services, Microsoft Azure, Google Cloud and Oracle Cloud — package the GPU with a range of platform services and enterprise relationships, a powerful combination for large buyers.

Dependence on one accelerator supplier is a structural risk for the industry. The primary source of training-class GPUs continues to be Nvidia, with a few new chips from AMD and custom silicon from the big AI labs expanding options. Pricing pressure is another variable: the cost of spot rates for GPUs has moved with supply, and margins depend on use, power contracts, and the mix of reserved versus on-demand use. Customer concentration and multi-cloud strategy may also have an impact on revenue sustainability.

What to watch if Lambda files

An S-1 would answer questions like how quickly revenue is growing, gross margin trajectories as clusters mature, and the size of purchase obligations for accelerators and data center commitments. Here, investors will seek a line down to utilization, the percentage of contracted capacity, churn, and cohort behavior amongst AI-native startups vs. incumbent enterprises.

Just as crucial will be disclosures on supply: any long-term deals with chip vendors, networking partners and colocation providers, as well as how Lambda plans to diversify across geographies and power sources. If the company can prove out predictable access to the latest GPUs and good unit economics at scale, it could become a stalwart public pure-play on AI infrastructure — supplementing, rather then directly challenging, the hyperscale clouds.

White declined to comment on the announced plans. The bank mandates were first reported by The Information; funding figures are based on Crunchbase data.