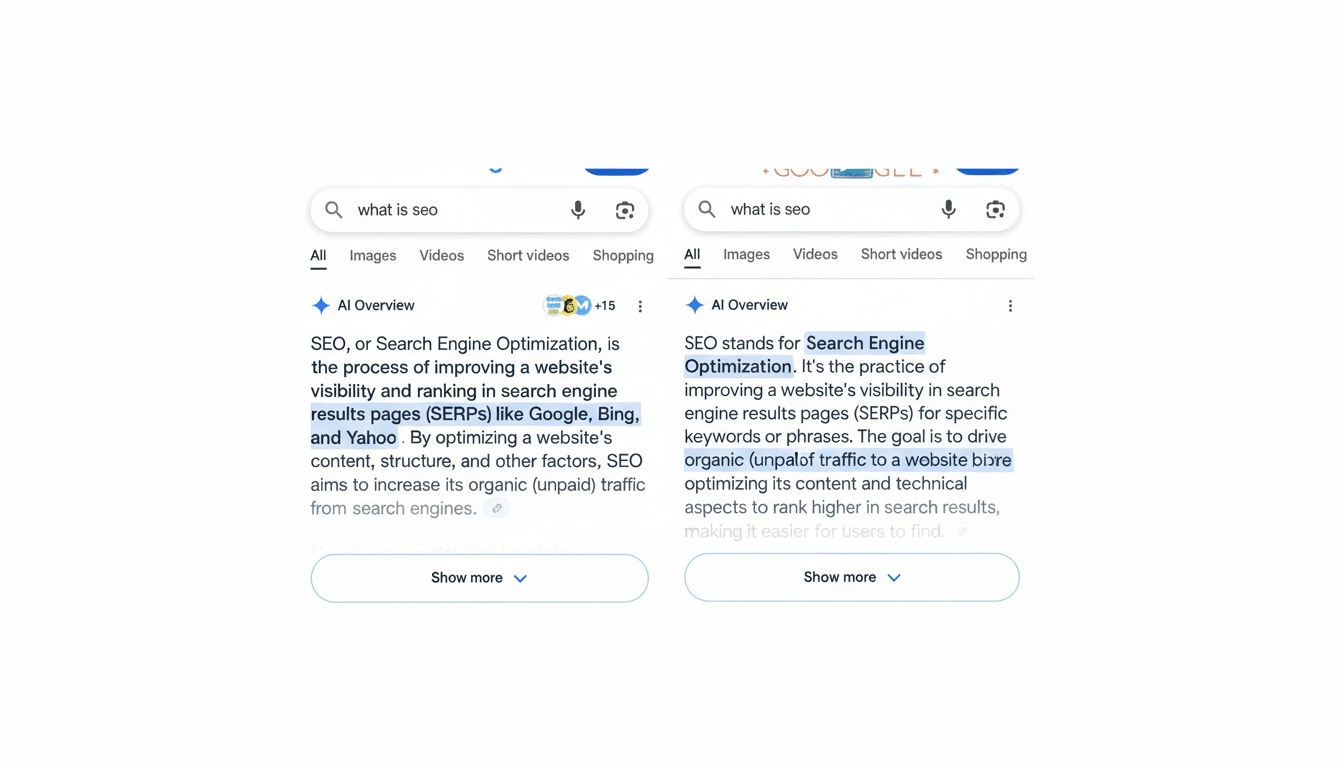

Fact-checking the summaries in Google’s AI Overviews just got noticeably easier. Google is rolling out on-hover source pop-ups in both AI Overviews and AI Mode, letting users jump straight to the underlying articles tied to each paragraph of the AI-generated answer. It’s a small interface change with big implications for trust, transparency, and traffic attribution across the web.

Why This Matters for Trust and Transparency Online

AI can synthesize information at speed, but it can also miss context or misinterpret a claim. After the debut of AI Overviews, widely shared screenshots of faulty guidance highlighted a central tension: convenience versus verifiability. By surfacing source links precisely where the AI draws on them, Google is reducing the friction of checking the originals—critical for topics where accuracy is non-negotiable, from health advice to financial guidance.

Google has previously shown a set of sources in a side panel, but those lists didn’t clarify which source informed which part of a summary. The new pop-ups address that gap by mapping citations to specific sentences or sections, creating a clearer audit trail for readers.

How the New Source Pop-Ups Work in AI Overviews

On desktop, run a search that triggers an AI Overview at the top of results. Within each paragraph or bullet of the overview, you’ll see inline links indicating citations. Hover over any of those links and a small window appears showing the original source with a short description. One click opens the source site for a deeper look. Switch to AI Mode and you’ll find the same behavior: hover on a citation inside the AI response to see the exact sources consulted for that section.

The shift from a static list to contextual pop-ups isn’t just cosmetic. According to Google’s Search leadership, early testing indicates the interface invites more interaction and makes it easier for people to reach high-quality content. In practice, that means fewer blind trust moments and more direct engagement with the publishers whose work underpins the summaries.

What It Means for Search Users and Publishers Today

For searchers, the benefit is speed and confidence: it’s now simpler to check whether a medical tidbit came from a peer-reviewed health resource or a general-interest blog, or whether a technical tip cites an official developer doc. For publishers, clearer attributions should translate into better click-through opportunities when their reporting or research informs an AI answer.

This also dovetails with industry pressure for more transparent AI systems. Researchers and policy groups have urged stronger provenance signals so users can understand where information originates. While pop-ups don’t solve deeper issues like model hallucinations or outdated sources, they are a practical step toward accountable summarization.

Real-World Use Case: Verifying Sources at a Glance

Consider a search such as how to safely clean a cast-iron pan. An AI Overview might present a short set of steps followed by small citation links. Hovering on a link reveals whether the advice is drawn from a culinary school, a cookware manufacturer, or a community forum. If the answer seems off—say it recommends a harsh detergent—you can click through to the cited source to confirm the context or find more authoritative guidance.

Tips to Verify AI Overviews Faster and More Safely

Scan the pop-ups for authoritativeness first. Government portals, academic journals, medical associations, and original manufacturer documents typically carry more weight than anonymous posts or lightly sourced blogs. If multiple citations appear, compare a couple to see whether they agree on the key facts or if the AI has blended conflicting advice.

Check publication dates in the source pages to avoid acting on stale information. For fast-moving topics—software updates, tax rules, product recalls—outdated pages are a common source of AI misfires. When the stakes are high, use multiple independent sources before following an AI-summarized recommendation.

Limitations to Note and Key Areas to Watch Next

Coverage depends on whether an AI Overview or AI Mode appears for your query, and the experience is currently oriented to desktop hovering. Some queries may still show generic source groupings where the tie between a sentence and a citation isn’t perfect. And as with any generative system, summaries can compress nuance or drop caveats from the originals.

Still, the direction is clear: Google is pushing toward more attributable, inspectable AI answers. Expect further iterations, potentially including richer provenance cues, expanded mobile support, and clearer signals when sources disagree. Until then, these new source pop-ups give searchers a faster route to the truth—and give publishers a more visible seat in the AI-driven search experience.