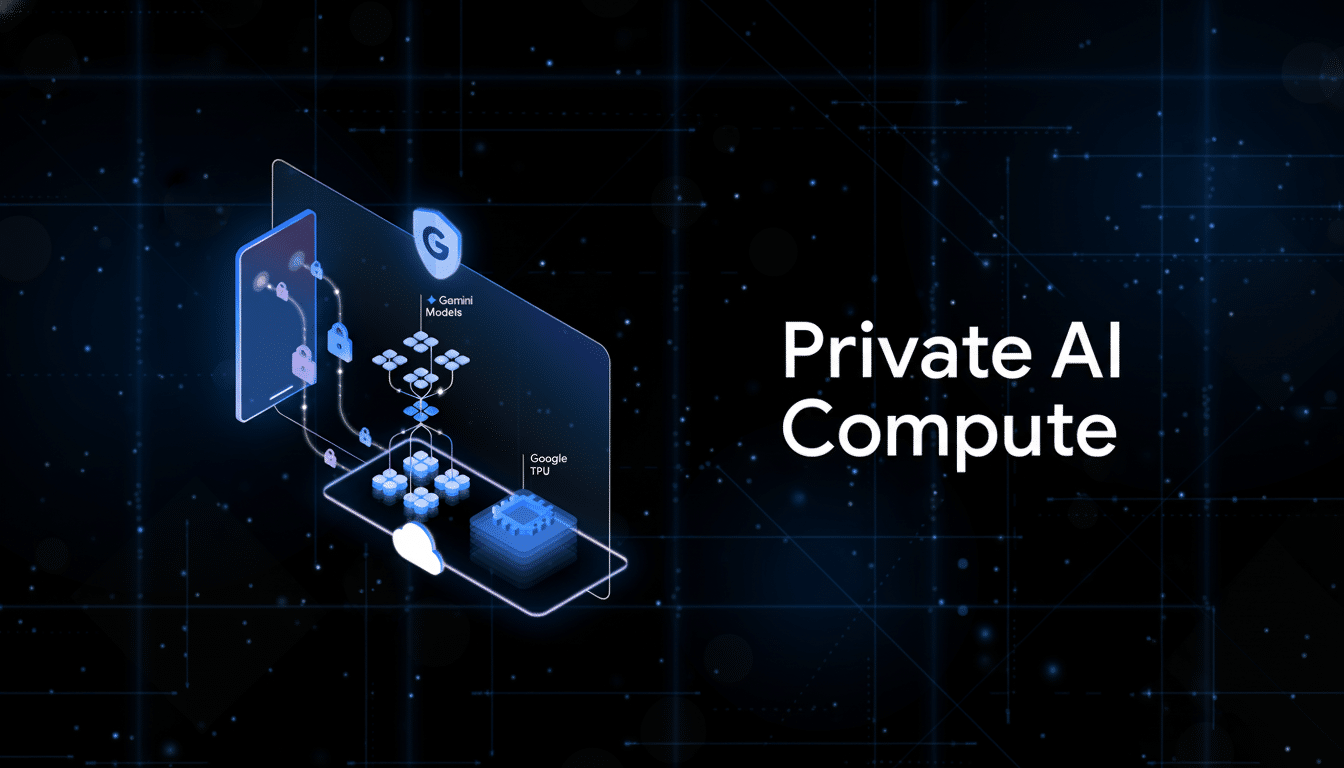

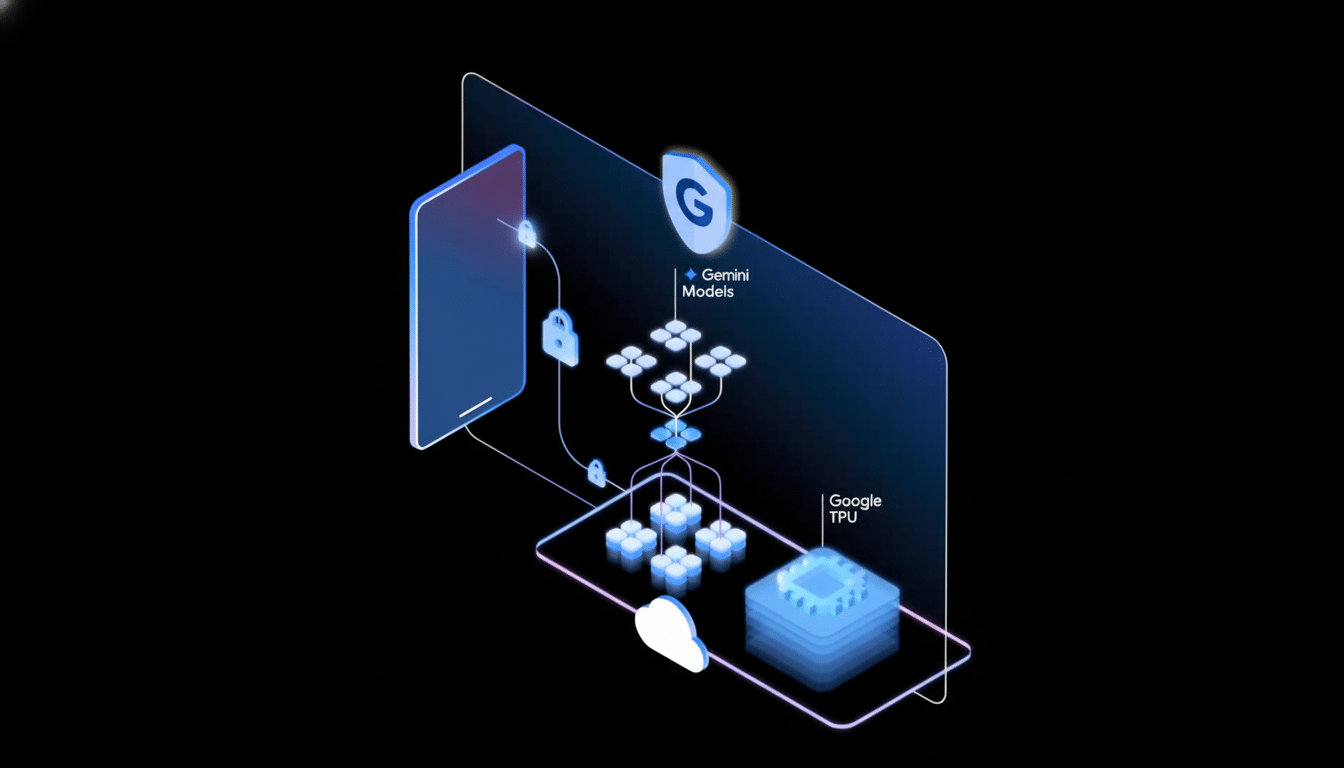

Google has unveiled Private AI Compute, a new way of running its most powerful Gemini models that protects sensitive data from the view of both cloud operators and outsiders.

The company claims the system provides the one-two punch of cloud-scale AI with privacy assurances users demand from on-device processing.

At its center, Private AI Compute shuffles requests through hardware-isolated enclaves that Google says are sealed, auditable, and end-to-end encrypted. The pitch is simple but powerful: your prompts, documents, and recordings can be processed by state-of-the-art models without Google engineers ever having to even see—let alone store or share—their actual contents.

How Private AI Compute works to protect sensitive data

Private AI Compute is based on the principles of confidential computing. On-device requests are encrypted and sent to a proven enclave, which confirms the identity of its code before decrypting any data. According to Google, these run with memory locked down, ephemeral keys, and only narrow interfaces (no write connectivity), which means that data can’t be written anywhere persistent or inspected by an operator.

Under the hood, Google references its own Secure AI Framework and custom TPUs, as well as fresh-from-the-factory Titanium Intelligence Enclaves for isolation and attestation. This is unlike traditional cloud inference, when data may only momentarily be exposed to higher-level platform services. In this case, the decrypted payload resides inside the enclave’s secure memory space, and the output produced is re-encrypted before it is sent out.

The flow might go something like this: a phone asks for a full-length summary, encrypts your transcript locally, and sends the encrypted text to an attested enclave. The enclave verifies its software image, acts on the request using a Gemini model, and returns an encrypted response. Google says logs are kept to a minimum, and no sensitive content is preserved—matching the privacy profile that we see everywhere else for on-device work.

Why it’s a big deal for privacy-conscious users

Enterprises and individuals have been reticent to adopt cloud AI in part because data used for prompts or context during an interaction can be sensitive—think legal filings, medical notes, or unreleased product plans. Privacy is strong on-device AI, though sometimes the horsepower is missing for huge models. Private AI Compute is one tool that tries to close the distance, providing cloud-like power without handing raw data over to the provider.

The action closely mirrors the industry transition led in part by Apple with its Private Cloud Compute technology, which relies on server-side enclaves and public attestation to restrict access to user data. Google’s assertion that Private AI Compute offers the same security as on-device processing is a high standard and will attract scrutiny from privacy groups, independent researchers, and compliance officers.

Availability and initial use cases across Google products

Google says Private AI Compute will first come out in some products, including Magic Cue on the Pixel 10 and upgraded Recorder abilities that deliver smarter summaries and suggestions. The company stresses that these features will leverage the cloud models, while maintaining confidentiality of conversations and recordings.

The most immediate beneficiaries are likely to be users with heavy workloads that tamp down mobile silicon—lengthy recordings, multi-subject syntheses, context-heavy planning. In corporate situations, imagine if meeting minutes never leave a secure enclave, or drafting help is available based on private documents without the content being visible to the provider of the platform.

Security and compliance context for enclave-based AI

Private AI Compute is part of a larger trend toward privacy-preserving computation. Analyst firm Gartner forecasts that by 2025, 60% of large organizations will employ privacy-enhancing computation, including homomorphic encryption and secure multiparty computation in analytics and data science use cases. For those teams executing against the NIST AI Risk Management Framework or zero trust approaches to processing, enclave-based processing is a tangible control to reduce data exposure.

Pressure from regulators is building too. The EU’s AI Act, GDPR mandates for data minimization, and sector rules such as HIPAA are nudging enterprises to show that sensitive information remains protected when being used in AI inference. When enclaves are attested remotely with strict key management, we obtain a pragmatic path to auditability and policy compliance.

What to watch next as confidential computing expands

Other important details to watch are the depth of Google’s public attestation, how open it will be around enclave images, and what third-party auditing and red-team testing is performed. Adoption will also hinge on how Private AI Compute handles developer access, customer-managed keys, latency, and cost between consumer and enterprise tiers.

The competitive environment heats up as big providers bet on confidential computing and privacy-first AI. If Google can deliver real assurances and practical performance at scale, Private AI Compute could become the default method sensitive tasks use to draw from Gemini—bringing cloud-class intelligence to masses of personal and corporate data without losing control.