Google is surreptitiously testing a more intelligent home for its viral Nano Banana image editor that places it straight into the Gemini overlay that overlays other apps. Add a picture to the overlay and you get a new “Edit this image” button designed to kick you into Nano Banana’s prompt-based editing flow. It’s a natural match — and one that most definitely feels overdue for a tool Google has been racing to put everywhere.

The experiment has been showing up for some folks on recent builds of the Google app (most notably version 16.42.61), although availability seems to be fairly limited and thoroughly controlled by server-side flags. In other words, even if you are up to date, you may not see it yet.

How the Gemini overlay is changing to support image edits

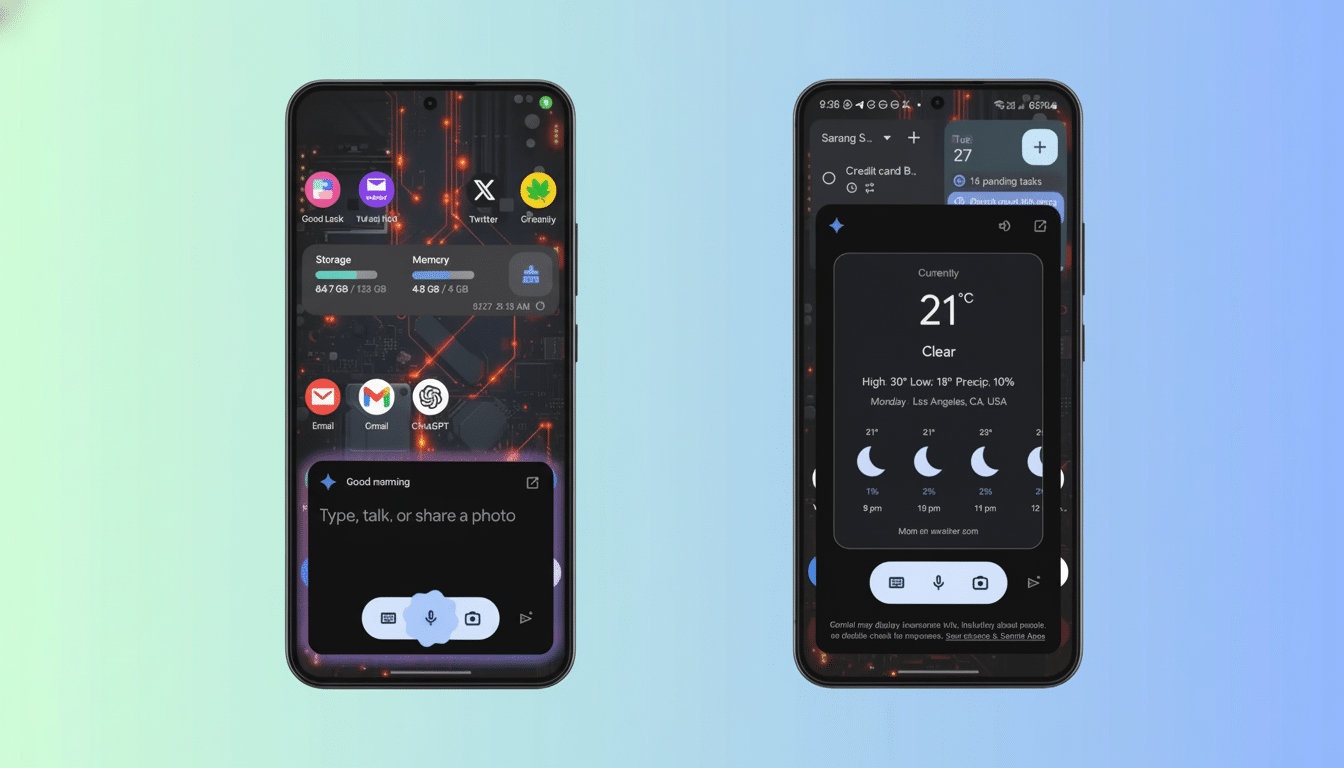

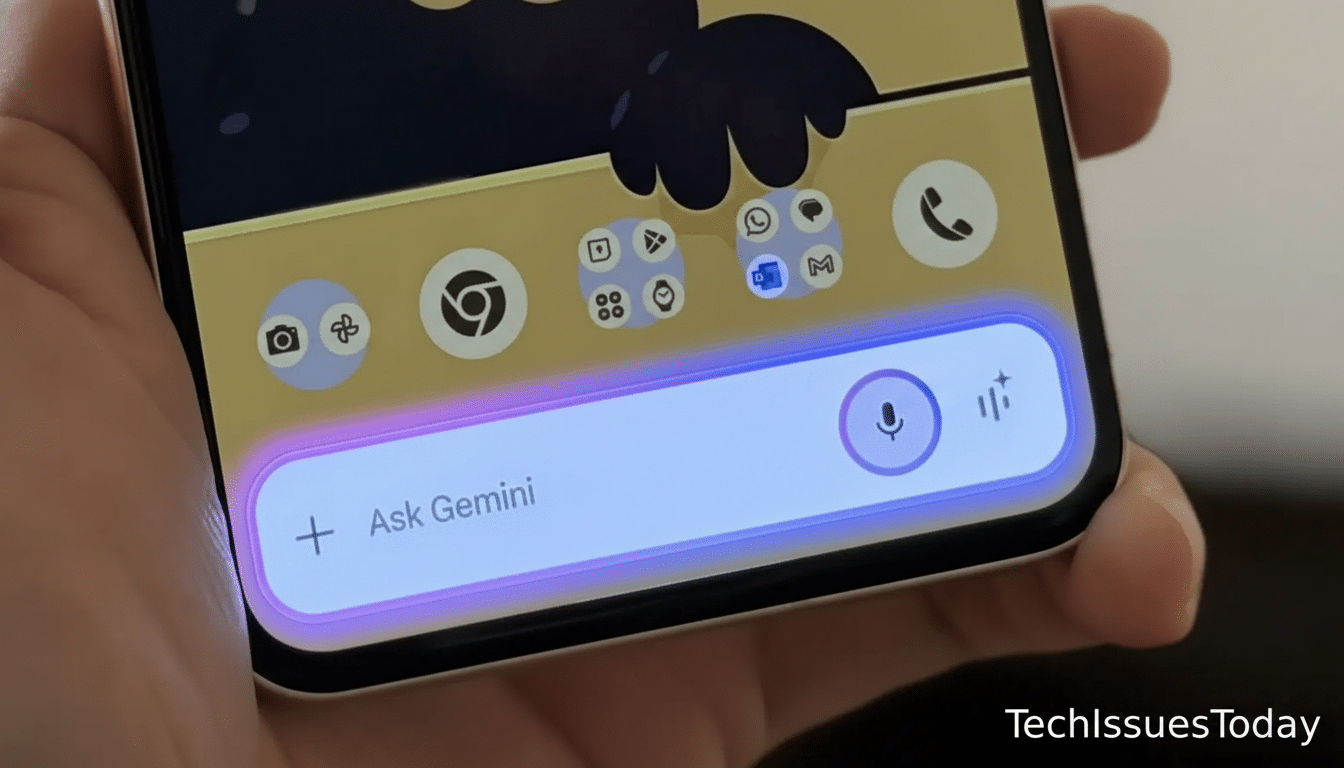

Previously, the Gemini overlay’s approach to images had skewed conversational: add a photo and you’re encouraged to have a live chat session about what’s in that photo. The fresh avenue is a practical one — a one-tap route straight to Nano Banana editing. Think of it as collapsing the distance between discovery and invention.

After you tap “Edit this image,” describe what the transformation is — such as removing an object, restyling a background, adding a component, or remixing aesthetics — and iterate with prompts. It’s the same experience as Nano Banana in full, with less poking. For that kind of workflow — the “chatting while screenshot cleaning” or “browsing while posting and product photo tweaking” workflow — shaving one or two steps off can make a big difference.

Why the Gemini overlay took longer to add Nano Banana

On the face of it, this seems like the most natural place for Nano Banana. But the overlay is a system-wide surface, and routing images from any app into generative editing poses tough questions: permission handling, memory limits, on-device acceleration, and battery impact. Google has been steadily fine-tuning the Gemini overlay to be more context-aware; layering high-powered image generation on top of that requires some careful guardrails to prevent slowdowns or gameplay ping-ponging.

There’s also a safety and provenance layer. Recent pushes around image watermarking for AI imagery (SynthID from Google and DeepMind), or the idea of standardized metadata (as recommended by industry working groups like the Coalition for Content Provenance and Authenticity) are clear signals of this evolution. Embedding those policies within a feature that’s one tap away from any app probably required policy, UI, and back-end alignment so edited images bear the right disclosures.

More homes for Nano Banana across Google apps and services

This overlay test is a component of the larger enhanced testing. Outside of its home base within Gemini, Nano Banana has popped up in NotebookLM and Messages, and it hooks into Google Lens or Search’s AI mode. The next obvious candidate is Google Photos itself, where the editor could provide for snappy fixes and collaging, and feed back into your library. More conservative editing for basic personal usage: quick fixes, cam-style filigrees, or party fusions — PhotoFx.

The potential reach is enormous. Google Photos collects photos from well over a billion users globally, and Google has said that people make billions of visual searches every month with Lens. Adding Nano Banana to those surfaces, one imagines, could make generative edits as everyday a menu tap as Auto Enhance. It also keeps up with an ecosystem in which companies like Adobe’s Generative Fill, Microsoft’s Designer, and Canva’s Magic Edit are normalizing AI-first image workflows.

The Feeling Lucky wildcard could add playful one-tap styles

References to a Feeling Lucky option in Nano Banana are in test builds, too. Likewise, the label calls back the classic I’m Feeling Lucky button from Search — it hints at a one-tap surprise: a popular style maybe, or even a set of curated presets to turn your stuff kaleidoscopic. If Google embraces this, you can imagine a safe, reversible sandbox that promotes exploration without requiring immediate mastery.

What users need to know about availability and rollouts

Contributing to office speculation, as with many things Google, this feature will be cautiously rolled out, geographic area by geographic area, and flicked remotely. Several code sleuths, including independent Android reverse engineers such as AssembleDebug, tend to notice these hooks before they go live, though features may still change or disappear before being released to the public. If you do notice “Edit this image” in the overlay, that’s a sign that Google is comfortable trying out the experience at scale.

Bottom line: Pulling Nano Banana into the Gemini overlay shortens the distance from idea to edit, and it fits with Google’s drive to render generative imaging ambient across its ecosystem. And the surprise isn’t that it’s being tested there — it’s that it took so long. When that is more universally accessible, look for faster, broader use from your phones on the go.