Google is prepping for a new episode of The Android Show covering nothing but Android XR, including “exciting” platform updates for smart glasses and mixed-reality headsets. A short teaser and a redesign of the landing page point to work on making Android a first-class substrate for spatial computing, with Gemini’s features getting top billing.

What Google Is Teasing for Its Android XR Event

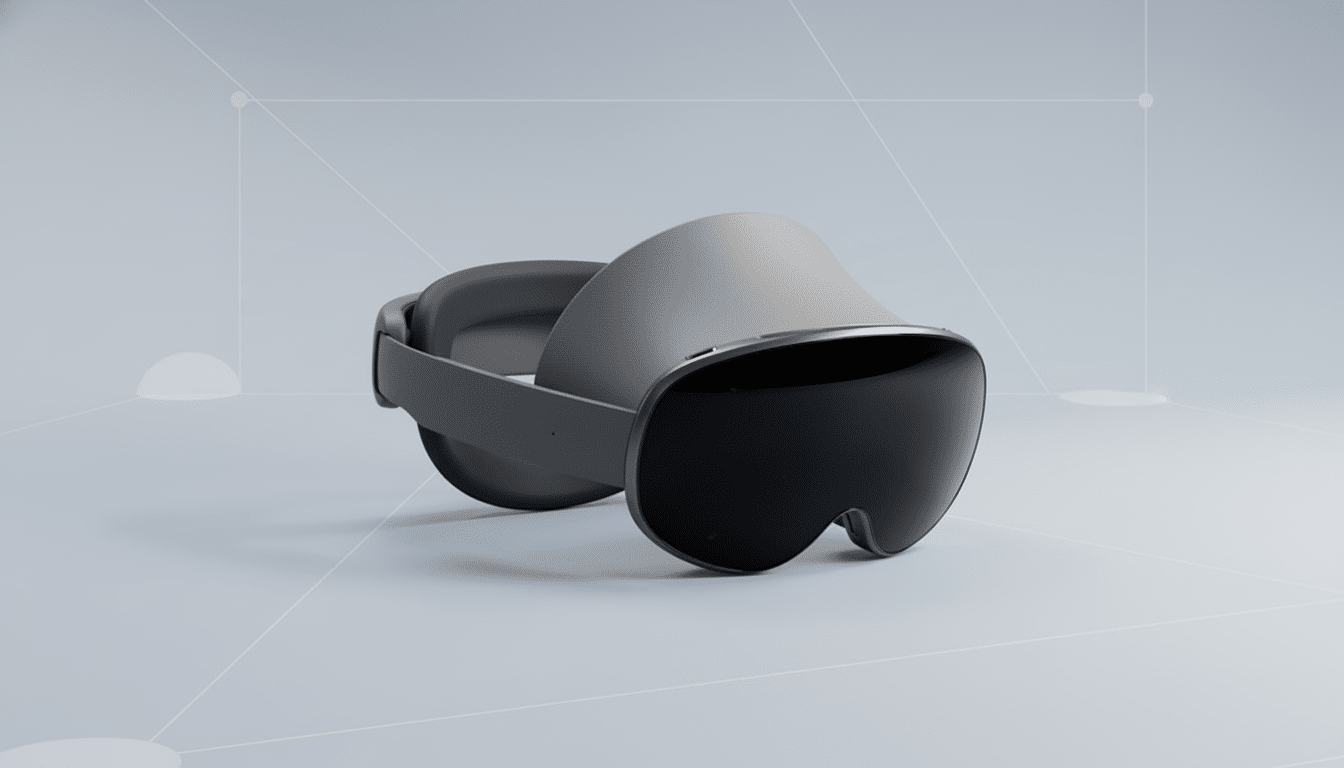

The company posted a brief teaser on a YouTube event page: animated Android bots don XR headsets and glasses as visual cues hint at both fully immersive VR and passthrough AR. The Android XR site now has a countdown, topics preview, and easy reminders — tap “Notify me” on YouTube or dump the event into Google Calendar.

In the show description, Google writes that the session will “cover all things XR across glasses, headsets and everything in between,” and specifically names Gemini. There will be demos where voice, gaze, and hand input collide with a conversational, context-aware assistant that knows about the user’s world — a must-have for real-world, heads-up computing.

The livestream is expected to last around 30 minutes, a structure that usually combines developer helpings with product sightings. In the past, The Android Show has featured SDK updates, platform features, best practices — all delivered with short code-them-up demos!

Why Android XR Is Relevant for Developers Today

Android’s XR aspirations have been incrementally building over successive hardware cycles. Google has been collaborating with Samsung on a Galaxy-branded headset, and it’s partnered with Gentle Monster and Warby Parker for smart-glasses projects — hints that Android XR will aim to cover high-end mixed reality as well as regular eyewear.

The competitive environment is getting hotter. Apple’s visionOS jump-started developer mindshare for spatial interfaces, and Meta’s mainstream push with Quest built a substantial content economy; to date, Meta said consumers have spent more than $2 billion on Quest apps and games. On the silicon side, Qualcomm’s Snapdragon XR2 Gen 2 boasts up to 2.5x GPU performance versus the previous-gen chip, promising richer passthrough and more realistic mixed-reality scenes on Android-rooted devices.

And for Google the win would be a unified platform that takes care of input (hand, controller, eye, voice), spatial mapping, and distribution through Play so developers can ship once and distribute to multiple hardware.

That’s the Android playbook, refactored for 3D. It depends on strong system services — spatial anchors, scene understanding, low-latency camera passthrough, background permissions that preserve user privacy in always-sensing systems.

What To Watch For During The Android XR Livestream

- Gemini-first experiences: Find APIs that allow apps to hand off tasks to Gemini with environmental context. That might involve scene-aware assistance, multimodal search tied to the user’s view, and generative overlays that enhance physical objects without obstructing the field of view.

- Glasses/headset parity: Look for instruction on developing for both see-through glasses and opaque headsets, with adaptive UI patterns that scale from glanceable tiles to room-scale canvases. Google also might spell out what its requirements are for input interoperability — so the same app supports hand tracking on one device and a controller on another.

- OpenXR and Android tooling: Developers will be looking for further alignment with OpenXR, new Android Studio templates for spatial applications, and additional Jetpack libraries idealized for 3D, sensors, and low-latency graphics. Some runtime performance enhancements (via adjusting frame pacing, reprojection, and improved GPU scheduling) would also go a long way in providing smooth passthrough MR.

- Distribution and monetization: The Play Store could add categories or editorial surfaces for XR, in addition to clearer guidelines around safety, privacy, and age-appropriate content. Expect to hear about familiar monetization tools — subscriptions, in-app purchases, and trials — reimagined for experiential offerings.

The Hardware Angle and Expected Device Partners

Samsung’s upcoming XR devices seem to dominate the ecosystem calculus, and its eyewear partners like Gentle Monster and Warby Parker suggest fashion-forward smart glasses are part of the roadmap. Google might demonstrate unbranded prototypes, highlight next-generation features of existing headsets, or make reference designs available to help manufacturers figure out ergonomics, battery life, and thermal limits.

If Google can make the case that Android XR will provide a standard set of sensors, input, and app distribution across a family of devices, it reduces risk for developers and gives OEMs reason to standardize.

That, more than any one demo, is what will make or break whether XR on Android scales beyond a few hero products.

How To Watch Google’s Android XR Livestream Online

The Android Show XR Edition is already waiting in the wings on YouTube, covering a preview and countdown. Audiences can choose to opt in for the “Notify me” setting or simply add it through the calendar on the Android XR landing page. The session is short, so expect a mostly buttoned-up run of announcements and demos over a deep-dive keynote.

Teases like this one don’t often overdeliver, but if nothing else, it looks like Google is willing to finally lock in the groundwork for a unified Android XR stack — and demonstrate exactly how Gemini could make spatial computing not just novelty cool, but genuinely useful.