Google’s next wave of smart glasses just came into sharper focus after a newly surfaced phone companion app hinted at how the wearables will work in the real world. Strings and setup screens point to conversation-aware notification controls, an audio-only mode, camera privacy safeguards, and selective access to Gemini-powered features based on hardware capabilities.

Companion App Surfaces In Developer Tools

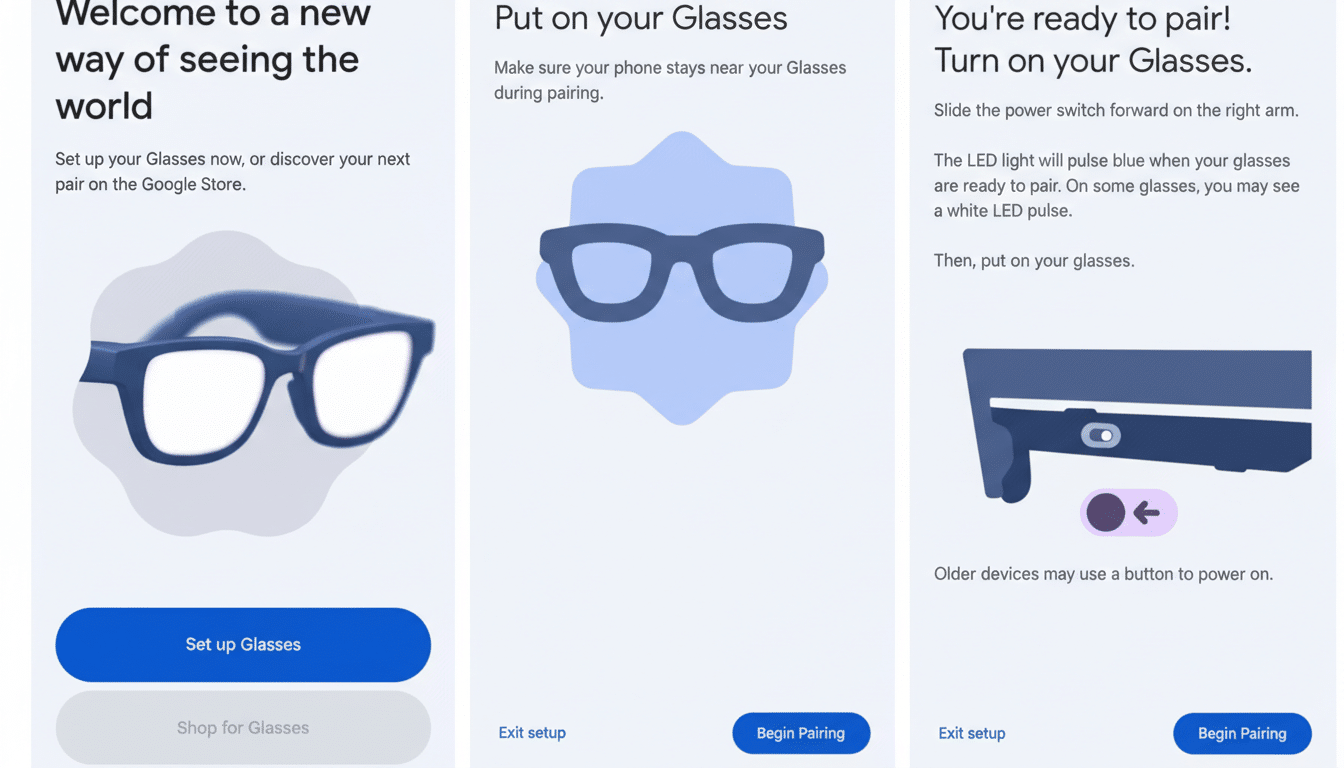

The companion app, identified by the package name com.google.android.glasses.companion, was extracted from an Android Studio canary system image by a community developer on Reddit’s Google Glass forum. While setup appears to require unreleased glasses hardware, the app’s resources and UI snippets align with terminology in Google’s own support documentation, providing a credible preview of the software experience that will pair the glasses to Android phones.

This discovery tracks with industry chatter that Google is preparing multiple smart glasses variants, including audio-first models and display-equipped versions, and is building Android-side tooling to unify pairing, permissions, updates, and AI features.

Conversation-Aware Alerts And On-Device Privacy

The clearest theme is attention management. App strings describe “conversation detection” that can automatically pause spoken notifications when you’re talking, with an emphasis that processing happens on the glasses and that no raw audio or images are shared with cloud services. That on-device stance mirrors how modern assistants handle hotword detection and voice activity recognition, minimizing data exposure while keeping latency low.

For manual control, the app references a “Pause announcements” toggle with options to silence readouts for set durations (examples in the code suggest hour-based presets). It’s a small but crucial detail: social acceptability has historically been a stumbling block for face-worn tech, and context-aware muting addresses one of the biggest friction points—intrusive alerts in the middle of a conversation.

Audio-Only Mode And Display Controls For Glasses

Another flag is an audio-only or “displayless” mode that explicitly shuts off the glasses’ display, plus a brightness slider when visuals are enabled. This bifurcated design suggests Google will support both audio-first wearables (similar in spirit to camera-enabled, screenless models like the latest Ray-Ban smart glasses) and later AR-capable variants, with a single app accommodating both profiles.

Audio-first experiences tend to drive higher battery life and comfort, which matters for all-day use. A quick mode switch also gives users a privacy lever—no display, no doubt about what the device is doing.

Camera And Recording Safeguards For Smart Glasses

On the imaging side, the app references video capture up to 1080p and an “experimental” 3K option, likely gated by model. There’s also evidence of policies preventing recording if the status LED is obscured, a clear nod to bystander awareness rules that public agencies and venues increasingly expect. Earlier consumer smart glasses faced criticism over covert capture; a mandatory recording indicator, enforced in software, is a practical response.

Expect nuanced defaults here. For example, a prominent LED, audible shutter sounds, and clear privacy prompts can reduce real-world frictions and align with best practices advocated by digital rights groups and workplace compliance teams.

Gemini Integration And Hardware Tiers For Glasses

Several strings reference Gemini features and a “hardware ineligible” state, implying that advanced AI capabilities will depend on specific silicon. That could mean on-device models akin to Gemini Nano, availability of dedicated NPUs, or camera pipelines optimized for real-time transcription and scene understanding. It also suggests a tiered lineup: all models get core connectivity and controls, while higher-end versions unlock richer multimodal features.

The broader ecosystem supports this trajectory. Chipsets like Qualcomm’s AR-focused platforms already power audio-first and camera-centric glasses, and a Gemini-forward stack would benefit from similar accelerators for local inference, reducing latency and preserving privacy.

Why This Matters For Android XR And Wearable AI

Smart glasses were a visible trend at recent industry shows, and the market is pivoting from novelty to utility: navigation prompts, hands-free capture, real-time translation, and quick AI lookups. Analysts have forecast renewed XR growth as lighter form factors arrive and phone-tethered designs lower cost and complexity. In that context, a polished companion app is not a side note—it’s the backbone that governs pairing, permissions, updates, and trust.

Nothing here is final, and features can change before launch. But the through-line is clear: Google is prioritizing ambient AI that stays out of your way, strong on-device privacy defaults, and safeguards to make camera use socially legible. If the hardware matches the software’s intent, Android’s smart glasses era could finally feel ready for everyday wear.