Now, after using three new models from Google that run Android XR, it’s an easy conclusion: This is the most seamless vision of everyday smart glasses I’ve seen yet.

Google displayed a puck-anchored glasses set concocted with XREAL, a monocular pair that leans on your phone for brains, and a distant twin-ocular design that suggests depth-heavy AR. The hardware looks like a winner, but the real next step is Google’s approach to software and its deep ties into the Android ecosystem.

- Why These Android XR Glasses Prototypes Matter Now

- Project Aura with XREAL aims for slim mixed reality

- Monocular glasses use phone-first design and voice

- Display-free model and retail partnerships detailed

- Binocular prototype hints at a deeper 3D AR future

- Privacy safeguards and building cultural trust for XR

- The takeaway for XR smart glasses and 2026 launch

Why These Android XR Glasses Prototypes Matter Now

XR has so far failed to have a mainstream moment. Meta’s Ray-Ban Display Glasses are more ambitious at $799. In contrast, they’re locked down to Meta’s apps and brand. The high-end headsets have amazed in demos and fizzled at home. Instead of that, Google uses Android XR, wants to keep compute at the phone level, layer Gemini for natural interactions, and take advantage of the vast base of applications on Android smartphones in which rich notifications are being sent already across more than 3 billion devices. Analyst companies like IDC and CCS Insight have spent years saying that fulfillment, cost, and frictionless software are the levers to pull in order to achieve uptake; Google is applying a substantial force to all three.

Project Aura with XREAL aims for slim mixed reality

Project Aura is a partnership between Google and XREAL, and it plays like the slim, travel-friendly version of a mixed reality headset. You put glasses on, anchored to a pocket puck holding the battery and compute. Under the hood is Qualcomm’s Snapdragon XR2 Plus Gen 2, which is based on Android XR and has hand tracking and more of a comfortable layout. In practice, however, it felt far more like normal eyewear than a VR visor and so potentially doable for flights, train rides, and guided experiences (like museum tours). The battery specs are not final; the puck warmed up in my demo, which is to say there’s the typical trade-off between weight and endurance. Google is aiming for a 2026 time frame, said two of the people.

Monocular glasses use phone-first design and voice

At the head of the lineup is the monocular prototype: light glasses with a single full-color display, embedded in one lens, and POV cameras. There’s a touchpad on the stem for taps and swipes, but voice is obviously first class. With Gemini Live, I could point a phone at a pantry and ask for a recipe based on what was before me or even begin an ad hoc Google Meet in which the other participant saw what I saw. Sound emanates from subtle stem speakers, and fit feels significantly better than larger camera glasses I’ve tested.

Software minimalism is the most striking feature. No clunky launcher; instead the UI serves up exactly what you require when launching, without cluttering itself with extraneous functionality. Navigation features the hint of the next turn with a tilt-down peek for a mini map. Music control is presented as a tiny, legible strip of album art. En route to a ride pickup, core details from Uber appear. And there’s no new app store to wait on because Google reuses Android ongoing notifications. If an app sends a persistent notification — Maps, YouTube Music, ride-hailing or fitness services are some likely candidates — the developers can add a little Android XR glue and be “glasses-ready” on launch day. And I mean, an immediate ecosystem, without making everyone reinstall or rediscover things.

Most important, the glasses offload compute to your phone. That keeps the frames slim, cools your face, and acknowledges the truth that no man or woman leaves home without a handset. Google has also teased Wear OS-supported gestures—no weird band or extra controller required. Max Spear, the Android XR product lead at Google, said he had cooked a holiday meal with it while his dad looked on from inside the house over Meet: a sweet illustration of hands-free remote help that squarely aligns with real life.

Display-free model and retail partnerships detailed

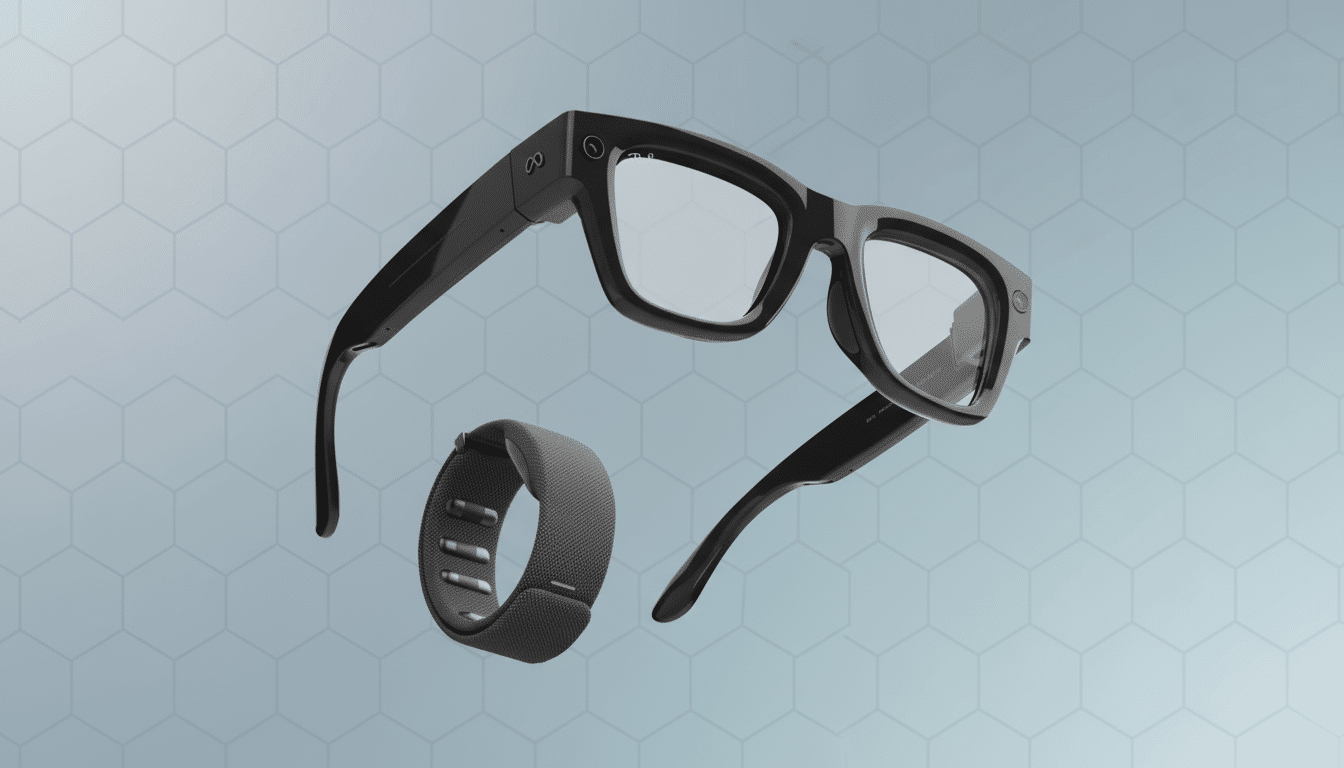

Google has an audio-only model—no display, same cameras, voice, and Gemini—for people who don’t want the visual experience but want ambient assistance. On the fashion and fit end, the retail versions are in collaboration with Samsung, Warby Parker, and Gentle Monster. That matters: Glasses succeed or fail on ergonomics and social acceptance as much as silicon.

Binocular prototype hints at a deeper 3D AR future

The binocular demo, which is still early, puts a display in each lens for an extensive field of view and subtle depth cues. In a brief navigation test, pins and overlays also “floated” more convincingly, and a YouTube video clip of Pink Floyd’s Comfortably Numb looked and sounded better than I expected. You’re not going to see this any time in 2026, Google says, and that seems like an indication of a staggered roadmap: monocular and audio-first models first, depth-rich binocular models later.

Privacy safeguards and building cultural trust for XR

Google is constructing visible privacy signals: a physical, prominent on/off switch that appears red when it’s off; an active recording LED that you can’t cover without stopping the recording; and clearer, user-forward data policies. Those are table stakes given the lessons of early smart glasses. The bigger test is cultural trust—the need to persuade people that face-worn cameras and A.I. won’t overstep. That will involve transparency, default-private settings, and some limits on data collection.

The takeaway for XR smart glasses and 2026 launch

This playbook is practical in a way XR usually isn’t. By squatting on phones for compute, Android phone notifications for instant utility, and Gemini for natural interaction, Google reduces friction where it matters. If the 2026 launch lands anywhere near the mark of these demos—comfort, ease of use, and a pre-existing app story—Google could reset all smart glasses expectations and ratchet up the pressure on competitors who have been struggling to play catch-up with closed ecosystems. For the first time, it looks much less like a science project and much more like something you’d actually want to wear.