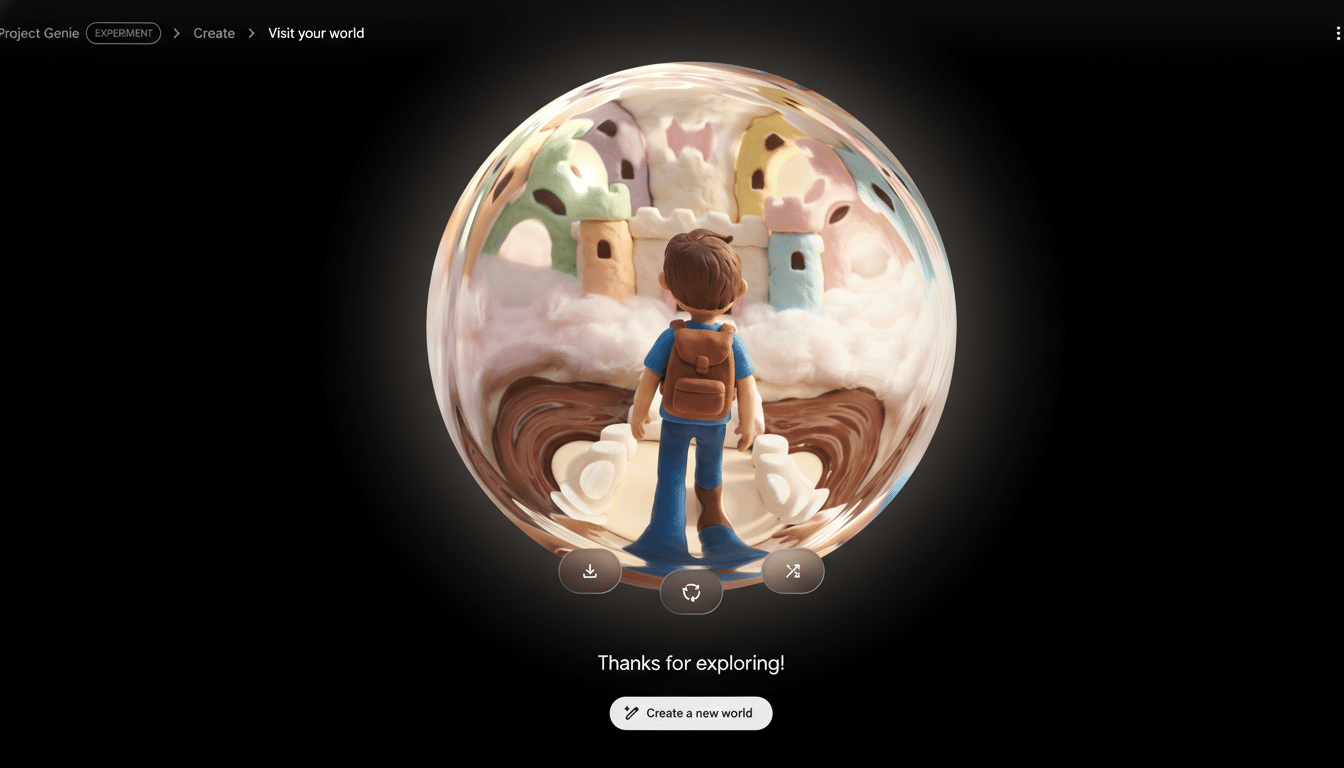

Google DeepMind has begun opening access to Project Genie, an experimental AI tool that turns text prompts or images into explorable game worlds. As part of a limited rollout to Google AI Ultra subscribers in the U.S., I took it for a spin—and yes, I built a marshmallow castle with a chocolate river, claymation and all. The result felt like a sneak peek at where world models are headed: dazzling, imperfect, and undeniably new.

What Project Genie Actually Builds and How It Works

Genie begins with a “world sketch.” You describe an environment and a main character, choose first- or third-person view, and let the system synthesize a scene you can navigate. Under the hood, it stitches together DeepMind’s world model Genie 3, the image generator Nano Banana Pro, and Gemini for orchestration.

The workflow is fast. Within seconds of confirming an image—either AI-created or a real photo used as a baseline—Genie produces an interactive space. You can remix others’ worlds by editing the underlying prompts, browse a curated gallery, hit a randomizer for inspiration, and export short video clips of your explorations.

I Built a Marshmallow Castle With a Chocolate River

My test was a childhood fever dream: a cloud-top castle sculpted from marshmallows, a glossy chocolate moat, trees made of candy, the whole thing rendered in claymation. Genie nailed the vibe. Puffy white spires with pastel trim, glinting chocolate water, and a playable character bouncing through the courtyard gave it the tactile, stop-motion feel I asked for.

Stylistically driven prompts—watercolors, anime, classic cartoons—tend to shine. Where Genie stumbles is photorealism. Attempts to conjure cinematic, lifelike scenes felt more like a mid-era video game than reality. And while Nano Banana Pro lets you tweak details before a world is generated, it occasionally misfires on specifics (in one case, hair color repeatedly skewed off-prompt).

Using real photos as a foundation produced mixed results. A snapshot of my office yielded a space with familiar props—wood desk, plants, gray couch—but rearranged and noticeably synthetic. A desk photo featuring a plush toy, however, came to life charmingly: Genie animated the toy as the avatar and had nearby objects subtly react as it moved past.

Genie 3’s auto-regressive design means the system “remembers” what it has produced. In practice, revisiting previously seen areas usually preserves what’s there. I only caught one continuity hiccup: returning to a desk generated a duplicate mug on a second pass.

Limits You Will Notice While Exploring Genie Worlds

Sessions are capped at 60 seconds. DeepMind says the limit reflects the heavy compute cost of auto-regressive world generation and the need to give more people time with the prototype. The feel is like a timed sandbox: enough to explore and iterate, not enough to get lost.

Interactivity is Genie’s most exciting promise—and its most obvious constraint. Characters sometimes clip through walls. Environmental physics feel early-stage. Navigation relies on WASD plus arrow keys and spacebar; on my machine, controls occasionally lagged or sent me off course, turning a straightforward walk into a zigzag sprint.

Safety systems are strict. Attempts to generate anything suggestive or anything that might evoke major copyrighted franchises are blocked. That posture tracks with mounting legal pressure around AI training data; for example, Disney recently sent a cease-and-desist accusing certain AI models of infringing on its IP. In Genie, even innocuous prompts that hint at famous characters or trademarks are steered away.

Why World Models Matter Now for Generative AI Systems

World models build an internal representation of environments and use it to predict future states and plan actions. Many AI labs view them as a stepping stone to more general reasoning systems—and a pragmatic path to train embodied agents (robots) in simulation before deployment in the real world.

DeepMind’s move lands amid a broader race. World Labs, co-founded by Fei-Fei Li, recently unveiled Marble, a commercial world-model product. Runway has introduced its own world-model approach for generative video. AMI Labs, started by former Meta AI chief scientist Yann LeCun, is also prioritizing world models. Genie’s consumer-facing test bed, even in prototype form, is a bid for feedback loops—and data—that could accelerate progress.

Early Verdict on Genie After Hands-On Testing

When you stay inside stylistic lanes, Genie can be magical. The marshmallow fortress worked because claymation forgives imperfections and embraces exaggeration. Push into photorealism, and the seams show. The engine’s memory is promising, but collisions, physics, and controls need polish. The 60-second clock pressures you to iterate rather than wander, which, for now, feels like the right compromise.

DeepMind frames Genie as a research prototype, not a daily-use app. The roadmap is clear enough: longer, richer interactions; stronger physics; more precise control; better handling of real-world images. If that arrives, the tool could move from novelty to utility—useful for rapid game prototyping, education, or previsualization—and, more importantly, serve as a proving ground for the world-models approach itself.