Google Maps is announcing a new wave of AI-powered features designed to let people create and share interactive, map-based stories in just a few minutes. Based on Gemini models, the update includes a natural-language “builder agent,” a styling assistant for branded cartography (with additional customization available for an extra fee), a low-code visualization component, and an MCP server that makes Google’s technical documentation into your real-time coding copilot.

What the builder agent can build using Maps data and UI

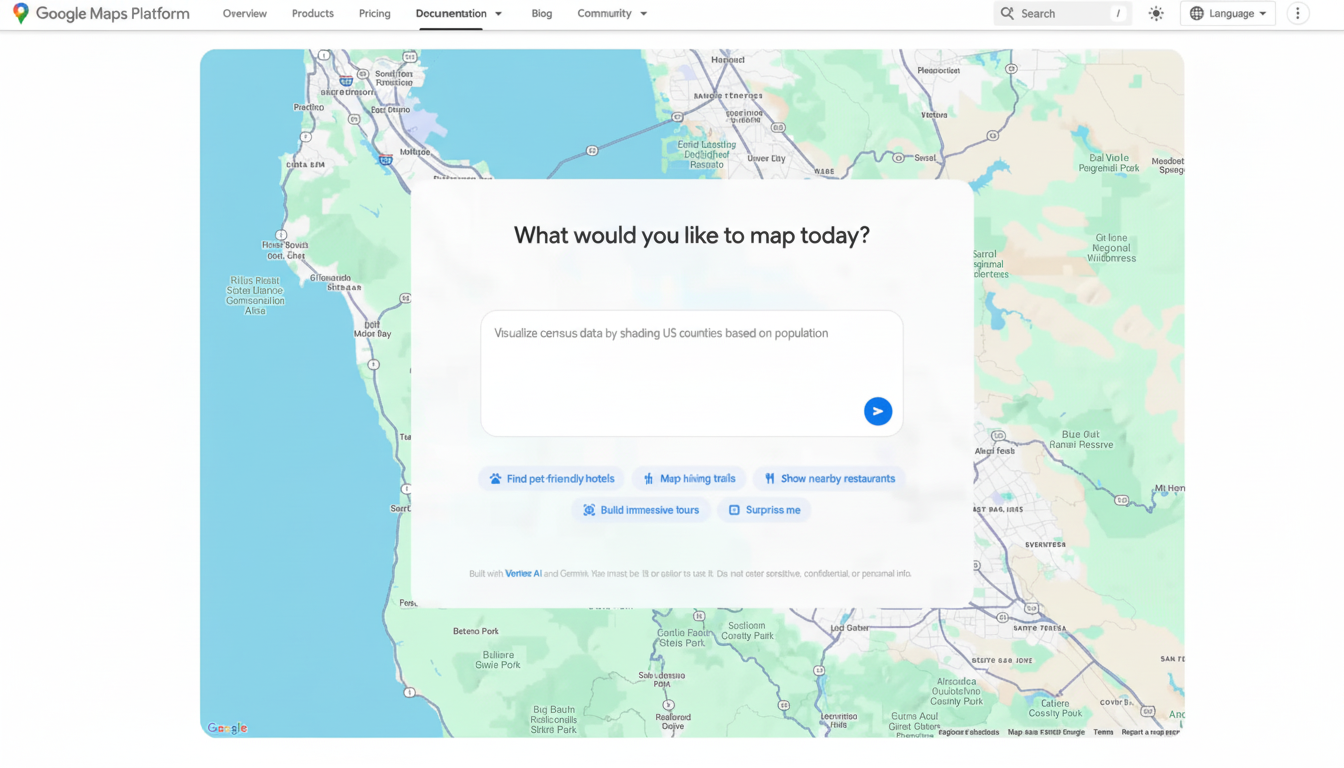

That builder agent then turns plain-English prompts into working prototypes that pull from Maps data, logic, and UI. Request an introduction to a Street View city tour, a live weather overlay by county, or a finder for pet-friendly hotels, and it assembles that scaffolded project on its own.

- What the builder agent can build using Maps data and UI

- Grounding Lite and MCP add real-world context

- Picture the answer: contextual views in Maps apps

- A copilot for Maps builders: documentation and MCP

- Why this release matters for geospatial applications

- Privacy and cost considerations for AI mapping tools

- What we’re watching for early use cases and pilots

Developers can then export the code, add their own API keys, and iterate in Firebase Studio. A buddy styling tool pushes brand palettes, typography, and iconography onto maps to make on-brand maps without hand-tuned CSS or map style JSONs—useful for retailers, events companies, and tourism boards that need a consistent look.

Concrete example: a city’s tourism board asks for a 3D walking tour that teleports between curated Street View scenes, overlaid with accessible routes and local business data points from Places. A utility could prototype an outage dashboard that buckets incidents, layers service territories, and prioritizes repair tranches.

Grounding Lite and MCP add real-world context

To counter hallucinations and boost situational cognition, Google unveils Grounding Lite, which enables developers to ground their own AI models on Maps data using Model Context Protocol (MCP). MCP is the new standard for connecting AI assistants to external data sources, tools, and documentation.

This means it can answer questions like “How far away is the nearest grocery store?” or “Which neighborhoods are in a 20-minute transit ride?”—and the results are tied to the underlying map. For businesses, that footing is critical for use cases like store placement, delivery promise windows, or safety geofencing.

Picture the answer: contextual views in Maps apps

With the preview, the Concept Maps component and low-code asks can turn text into visual output (a ranked list, an interactive map, or even a 3D scene) without custom rendering code, making it easy to explore your ideas.

Request “coffee shops where I can sit outside,” then users can immediately toggle between a text summary and an interactive map that has filters.

A copilot for Maps builders: documentation and MCP

(Main reason: Google is also delivering an MCP server that hooks AI assistants directly to our documentation for the Google Maps Platform.) The toolkit answers implementation questions like “What are the required fields for Places Details?” and highlights pitfalls such as quota limits or billing impact for high-volume routing matrices.

For teams just getting onto the platform, this reduces time-to-first-prototype and cuts support tickets. It’s built on Gemini command-line extensions, which already make our Maps data available to developers, bringing static documentation to life by making it interactive and context-aware.

Why this release matters for geospatial applications

Google Maps powers products used by more than a billion people and contains information on over 200 million places around the world, the company says in its public stats. By combining that coverage with agentic AI and low-code tooling, we lower the barrier to serious geospatial apps (in travel, real estate, logistics, public safety) by moving effort from boilerplate code and problem setup to problem design.

The timing fits larger industry trends: analyst firms have estimated that about 70% of new applications will be developed on low-code platforms. Geospatial workloads are a rich hunting ground—route optimization, catchment analysis, curb-level guidance—because they sit at the intersection of data ingestion (structured and visual) and user intent that maps can profile and interpret.

Privacy and cost considerations for AI mapping tools

Like any AI-based tool for developers, governance is key. Projects will continue to depend on API keys and usage-based billing, so teams will still require guardrails around quota limits, data residency, and access control. Grounding Lite’s MCP approach holds promise because it allows sensitive context to be kept in an organization’s environment but still leverage Maps data, while architects should prepare to confirm data flow diagrams and replication policies.

What we’re watching for early use cases and pilots

- Retail: conversational store locators with inventory and live foot traffic.

- Mobility: explainable overlays and delivery ETAs that can adapt to weather and road closures.

- Documentation: README.

- Real estate: neighborhood scouts that combine schools, transit, and price trends.

- Media: interactive news maps created from a reporter’s notes and public data sets.

On the consumer side, Google is also embedding Gemini further into navigation with hands-free help and piloting incident alerts and speed limit information in some parts of India—signals that the very same AI stack is threading through both developer and end-user experiences.

The result: with a natural-language builder, grounded reasoning, visual outputs, and an AI-readable doc stack, Google Maps is turning the map itself into a programmable canvas. From the previous weeks of specialized geospatial work, teams can move from idea to interactive prototype in one working session.